Introduction

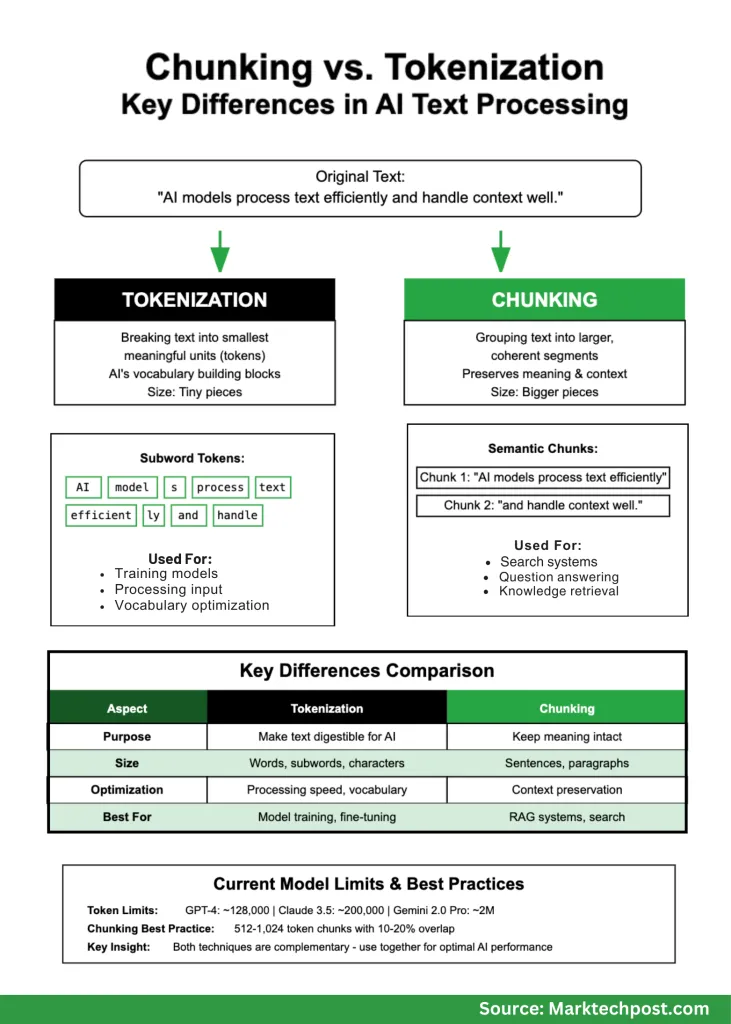

When you’re working with AI and natural language processing, you’ll quickly encounter two fundamental concepts that often get confused: tokenization and chunking. While both involve breaking down text into smaller pieces, they serve completely different purposes and work at different scales. If you’re building AI applications, understanding these differences isn’t just academic—it’s crucial for creating systems that actually work well.

Think of it this way: if you’re making a sandwich, tokenization is like cutting your ingredients into bite-sized pieces, while chunking is like organizing those pieces into logical groups that make sense to eat together. Both are necessary, but they solve different problems.

What is Tokenization?

Tokenization is the process of breaking text into the smallest meaningful units that AI models can understand. These units, called tokens, are the basic building blocks that language models work with. You can think of tokens as the “words” in an AI’s vocabulary, though they’re often smaller than actual words.

There are several ways to create tokens:

Word-level tokenization splits text at spaces and punctuation. It’s straightforward but creates problems with rare words that the model has never seen before.

Subword tokenization is more sophisticated and widely used today. Methods like Byte Pair Encoding (BPE), WordPiece, and SentencePiece break words into smaller chunks based on how frequently character combinations appear in training data. This approach handles new or rare words much better.

Character-level tokenization treats each letter as a token. It’s simple but creates very long sequences that are harder for models to process efficiently.

Here’s a practical example:

- Original text: “AI models process text efficiently.”

- Word tokens: [“AI”, “models”, “process”, “text”, “efficiently”]

- Subword tokens: [“AI”, “model”, “s”, “process”, “text”, “efficient”, “ly”]

Notice how subword tokenization splits “models” into “model” and “s” because this pattern appears frequently in training data. This helps the model understand related words like “modeling” or “modeled” even if it hasn’t seen them before.

What is Chunking?

Chunking takes a completely different approach. Instead of breaking text into tiny pieces, it groups text into larger, coherent segments that preserve meaning and context. When you’re building applications like chatbots or search systems, you need these larger chunks to maintain the flow of ideas.

Think about reading a research paper. You wouldn’t want each sentence scattered randomly—you’d want related sentences grouped together so the ideas make sense. That’s exactly what chunking does for AI systems.

Here’s how it works in practice:

- Original text: “AI models process text efficiently. They rely on tokens to capture meaning and context. Chunking allows better retrieval.”

- Chunk 1: “AI models process text efficiently.”

- Chunk 2: “They rely on tokens to capture meaning and context.”

- Chunk 3: “Chunking allows better retrieval.”

Modern chunking strategies have become quite sophisticated:

Fixed-length chunking creates chunks of a specific size (like 500 words or 1000 characters). It’s predictable but sometimes breaks up related ideas awkwardly.

Semantic chunking is smarter—it looks for natural breakpoints where topics change, using AI to understand when ideas shift from one concept to another.

Recursive chunking works hierarchically, first trying to split at paragraph breaks, then sentences, then smaller units if needed.

Sliding window chunking creates overlapping chunks to ensure important context isn’t lost at boundaries.

The Key Differences That Matter

Understanding when to use each approach makes all the difference in your AI applications:

| What You’re Doing | Tokenization | Chunking |

|---|---|---|

| Size | Tiny pieces (words, parts of words) | Bigger pieces (sentences, paragraphs) |

| Goal | Make text digestible for AI models | Keep meaning intact for humans and AI |

| When You Use It | Training models, processing input | Search systems, question answering |

| What You Optimize For | Processing speed, vocabulary size | Context preservation, retrieval accuracy |

Why This Matters for Real Applications

For AI Model Performance

When you’re working with language models, tokenization directly affects how much you pay and how fast your system runs. Models like GPT-4 charge by the token, so efficient tokenization saves money. Current models have different limits:

- GPT-4: Around 128,000 tokens

- Claude 3.5: Up to 200,000 tokens

- Gemini 2.0 Pro: Up to 2 million tokens

Recent research shows that larger models actually work better with bigger vocabularies. For example, while LLaMA-2 70B uses about 32,000 different tokens, it would probably perform better with around 216,000. This matters because the right vocabulary size affects both performance and efficiency.

For Search and Question-Answering Systems

Chunking strategy can make or break your RAG (Retrieval-Augmented Generation) system. If your chunks are too small, you lose context. Too big, and you overwhelm the model with irrelevant information. Get it right, and your system provides accurate, helpful answers. Get it wrong, and you get hallucinations and poor results.

Companies building enterprise AI systems have found that smart chunking strategies significantly reduce those frustrating cases where AI makes up facts or gives nonsensical answers.

Where You’ll Use Each Approach

Tokenization is Essential For:

Training new models – You can’t train a language model without first tokenizing your training data. The tokenization strategy affects everything about how well the model learns.

Fine-tuning existing models – When you adapt a pre-trained model for your specific domain (like medical or legal text), you need to carefully consider whether the existing tokenization works for your specialized vocabulary.

Cross-language applications – Subword tokenization is particularly helpful when working with languages that have complex word structures or when building multilingual systems.

Chunking is Critical For:

Building company knowledge bases – When you want employees to ask questions and get accurate answers from your internal documents, proper chunking ensures the AI retrieves relevant, complete information.

Document analysis at scale – Whether you’re processing legal contracts, research papers, or customer feedback, chunking helps maintain document structure and meaning.

Search systems – Modern search goes beyond keyword matching. Semantic chunking helps systems understand what users really want and retrieve the most relevant information.

Current Best Practices (What Actually Works)

After watching many real-world implementations, here’s what tends to work:

For Chunking:

- Start with 512-1024 token chunks for most applications

- Add 10-20% overlap between chunks to preserve context

- Use semantic boundaries when possible (end of sentences, paragraphs)

- Test with your actual use cases and adjust based on results

- Monitor for hallucinations and tweak your approach accordingly

For Tokenization:

- Use established methods (BPE, WordPiece, SentencePiece) rather than building your own

- Consider your domain—medical or legal text might need specialized approaches

- Monitor out-of-vocabulary rates in production

- Balance between compression (fewer tokens) and meaning preservation

Summary

Tokenization and chunking aren’t competing techniques—they’re complementary tools that solve different problems. Tokenization makes text digestible for AI models, while chunking preserves meaning for practical applications.

As AI systems become more sophisticated, both techniques continue evolving. Context windows are getting larger, vocabularies are becoming more efficient, and chunking strategies are getting smarter about preserving semantic meaning.

The key is understanding what you’re trying to accomplish. Building a chatbot? Focus on chunking strategies that preserve conversational context. Training a model? Optimize your tokenization for efficiency and coverage. Building an enterprise search system? You’ll need both—smart tokenization for efficiency and intelligent chunking for accuracy.