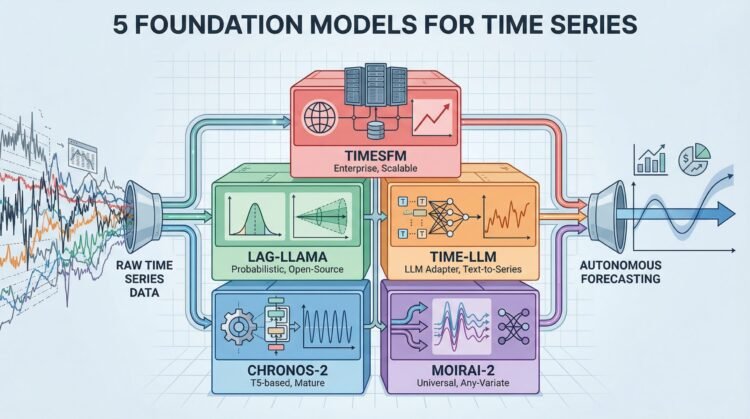

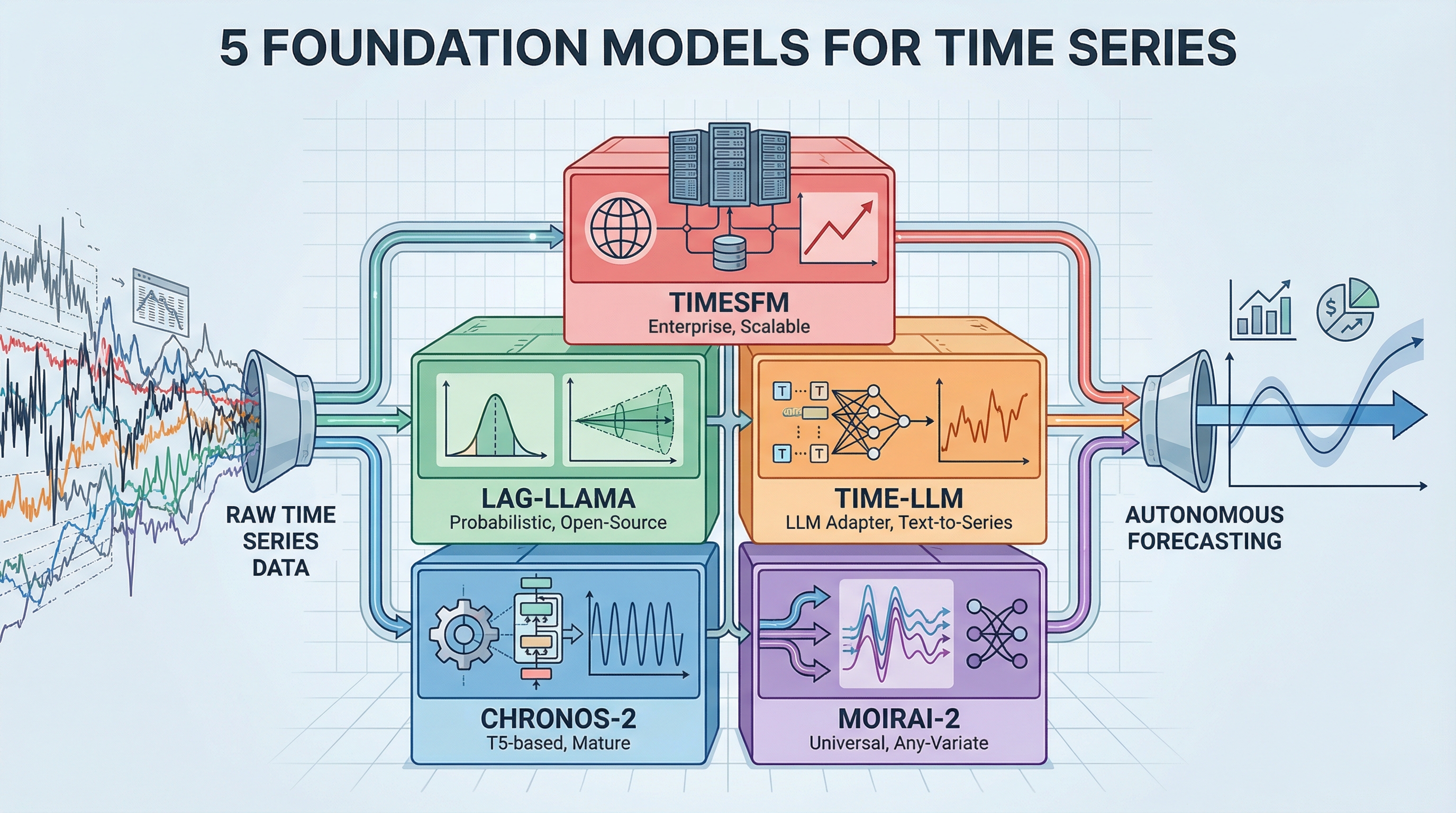

The 2026 Time Series Toolkit: 5 Foundation Models for Autonomous Forecasting

Image by Author

Introduction

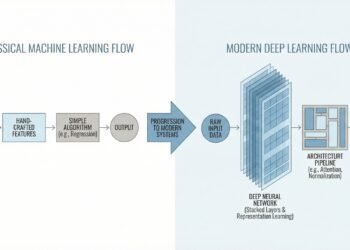

Most forecasting work involves building custom models for each dataset — fit an ARIMA here, tune an LSTM there, wrestle with Prophet‘s hyperparameters. Foundation models flip this around. They’re pretrained on massive amounts of time series data and can forecast new patterns without additional training, similar to how GPT can write about topics it’s never explicitly seen. This list covers the five essential foundation models you need to know for building production forecasting systems in 2026.

The shift from task-specific models to foundation model orchestration changes how teams approach forecasting. Instead of spending weeks tuning parameters and wrangling domain expertise for each new dataset, pretrained models already understand universal temporal patterns. Teams get faster deployment, better generalization across domains, and lower computational costs without extensive machine learning infrastructure.

1. Amazon Chronos-2 (The Production-Ready Foundation)

Amazon Chronos-2 is the most mature option for teams moving to foundation model forecasting. This family of pretrained transformer models, based on the T5 architecture, tokenizes time series values through scaling and quantization — treating forecasting as a language modeling task. The October 2025 release expanded capabilities to support univariate, multivariate, and covariate-informed forecasting.

The model delivers state-of-the-art zero-shot forecasting that consistently beats tuned statistical models out of the box, processing 300+ forecasts per second on a single GPU. With millions of downloads on Hugging Face and native integration with AWS tools like SageMaker and AutoGluon, Chronos-2 has the strongest documentation and community support among foundation models. The architecture comes in five sizes, from 9 million to 710 million parameters, so teams can balance performance against computational constraints. Check out the implementation on GitHub, review the technical approach in the research paper, or grab pretrained models from Hugging Face.

2. Salesforce MOIRAI-2 (The Universal Forecaster)

Salesforce MOIRAI-2 tackles the practical challenge of handling messy, real-world time series data through its universal forecasting architecture. This decoder-only transformer foundation model adapts to any data frequency, any number of variables, and any prediction length within a single framework. The model’s “Any-Variate Attention” mechanism dynamically adjusts to multivariate time series without requiring fixed input dimensions, setting it apart from models designed for specific data structures.

MOIRAI-2 ranks highly on the GIFT-Eval leaderboard among non-data-leaking models, with strong performance on both in-distribution and zero-shot tasks. Training on the LOTSA dataset — 27 billion observations across nine domains — gives the model robust generalization to new forecasting scenarios. Teams benefit from fully open-source development with active maintenance, making it valuable for complex, real-world applications involving multiple variables and irregular frequencies. The project’s GitHub repository includes implementation details, while the technical paper and Salesforce blog post explain the universal forecasting approach. Pretrained models are on Hugging Face.

3. Lag-Llama (The Open-Source Backbone)

Lag-Llama brings probabilistic forecasting capabilities to foundation models through a decoder-only transformer inspired by Meta’s LLaMA architecture. Unlike models that produce only point forecasts, Lag-Llama generates full probability distributions with uncertainty intervals for each prediction step — the quantified uncertainty that decision-making processes need. The model uses lagged features as covariates and shows strong few-shot learning when fine-tuned on small datasets.

The fully open-source nature with permissive licensing makes Lag-Llama accessible to teams of any size, while its ability to run on CPU or GPU removes infrastructure barriers. Academic backing through publications at major machine learning conferences adds validation. For teams prioritizing transparency, reproducibility, and probabilistic outputs over raw performance metrics, Lag-Llama offers a reliable foundation model backbone. The GitHub repository contains implementation code, and the research paper details the probabilistic forecasting methodology.

4. Time-LLM (The LLM Adapter)

Time-LLM takes a different approach by converting existing large language models into forecasting systems without modifying the original model weights. This reprogramming framework translates time series patches into text prototypes, letting frozen LLMs like GPT-2, LLaMA, or BERT understand temporal patterns. The “Prompt-as-Prefix” technique injects domain knowledge through natural language, so teams can use their existing language model infrastructure for forecasting tasks.

This adapter approach works well for organizations already running LLMs in production, since it eliminates the need to deploy and maintain separate forecasting models. The framework supports multiple backbone models, making it easy to switch between different LLMs as newer versions become available. Time-LLM represents the “agentic AI” approach to forecasting, where general-purpose language understanding capabilities transfer to temporal pattern recognition. Access the implementation through the GitHub repository, or review the methodology in the research paper.

5. Google TimesFM (The Big Tech Standard)

Google TimesFM provides enterprise-grade foundation model forecasting backed by one of the largest technology research organizations. This patch-based decoder-only model, pretrained on 100 billion real-world time points from Google’s internal datasets, delivers strong zero-shot performance across multiple domains with minimal configuration. The model design prioritizes production deployment at scale, reflecting its origins in Google’s internal forecasting workloads.

TimesFM is battle-tested through extensive use in Google’s production environments, which builds confidence for teams deploying foundation models in business scenarios. The model balances performance and efficiency, avoiding the computational overhead of larger alternatives while maintaining competitive accuracy. Ongoing support from Google Research means continued development and maintenance, making TimesFM a reliable choice for teams seeking enterprise-grade foundation model capabilities. Access the model through the GitHub repository, review the architecture in the technical paper, or read the implementation details in the Google Research blog post.

Conclusion

Foundation models transform time series forecasting from a model training problem into a model selection challenge. Chronos-2 offers production maturity, MOIRAI-2 handles complex multivariate data, Lag-Llama provides probabilistic outputs, Time-LLM leverages existing LLM infrastructure, and TimesFM delivers enterprise reliability. Evaluate models based on your specific needs around uncertainty quantification, multivariate support, infrastructure constraints, and deployment scale. Start with zero-shot evaluation on representative datasets to identify which foundation model fits your forecasting needs before investing in fine-tuning or custom development.