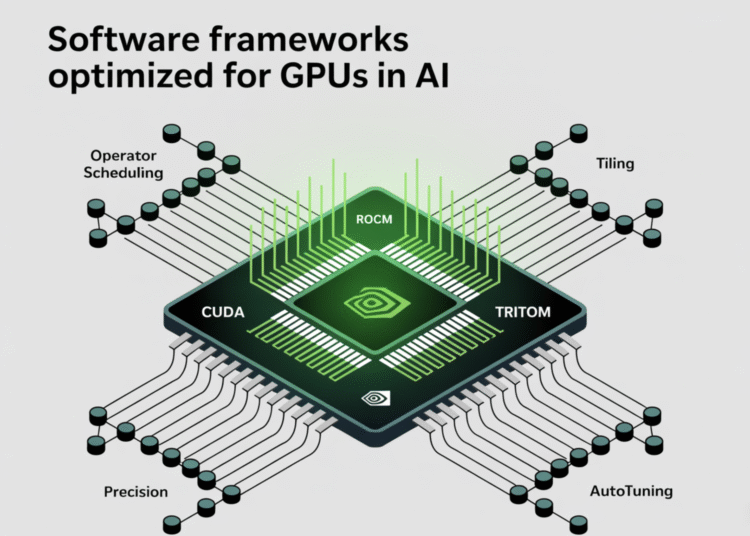

Deep-learning throughput hinges on how effectively a compiler stack maps tensor programs to GPU execution: thread/block schedules, memory movement, and instruction selection (e.g., Tensor Core MMA pipelines). In this article we will focus on four dominant stacks—CUDA, ROCm, Triton, and TensorRT—from the compiler’s perspective and explains which optimizations move the needle in practice.

What actually determines performance on modern GPUs

Across vendors, the same levers recur:

- Operator scheduling & fusion: reduce kernel launches and round-trips to HBM; expose longer producer→consumer chains for register/shared-memory reuse. TensorRT and cuDNN “runtime fusion engines” exemplify this for attention and conv blocks.

- Tiling & data layout: match tile shapes to Tensor Core/WGMMA/WMMA native fragment sizes; avoid shared-memory bank conflicts and partition camping. CUTLASS documents warp-level GEMM tiling for both Tensor Cores and CUDA cores.

- Precision & quantization: FP16/BF16/FP8 for training/inference; INT8/INT4 (calibrated or QAT) for inference. TensorRT automates calibration and kernel selection under these precisions.

- Graph capture & runtime specialization: graph execution to amortize launch overheads; dynamic fusion of common subgraphs (e.g., attention). cuDNN 9 added graph support for attention fusion engines.

- Autotuning: search tile sizes, unroll factors, and pipelining depths per arch/SKU. Triton and CUTLASS expose explicit autotune hooks; TensorRT performs builder-time tactic selection.

With that lens, here’s how each stack implements the above.

CUDA: nvcc/ptxas, cuDNN, CUTLASS, and CUDA Graphs

Compiler path. CUDA code compiles through nvcc into PTX, then ptxas lowers PTX to SASS (arch-specific machine code). Controlling optimization requires feeding flags to both host and device phases; for kernels the key is -Xptxas. Developers often miss that -O3 alone affects only host code.

Kernel generation & libraries.

- CUTLASS provides parametric templates for GEMM/conv, implementing warp-level tiling, Tensor Core MMA pipelines, and smem iterators designed for conflict-free access—canonical references for writing peak kernels, including Hopper’s WGMMA path.

- cuDNN 9 introduced runtime fusion engines (notably for attention blocks), native CUDA Graph integration for those engines, and updates for new compute capabilities—materially reducing dispatch overheads and improving memory locality in Transformer workloads.

Performance implications.

- Moving from unfused PyTorch ops to cuDNN attention fusion typically cuts kernel launches and global memory traffic; combined with CUDA Graphs, it reduces CPU bottlenecks in short-sequence inference.

- On Hopper/Blackwell, aligning tile shapes to WGMMA/Tensor Core native sizes is decisive; CUTLASS tutorials quantify how mis-sized tiles waste tensor-core throughput.

When CUDA is the right tool. You need maximum control over instruction selection, occupancy, and smem choreography; or you’re extending kernels beyond library coverage while staying on NVIDIA GPUs.

ROCm: HIP/Clang toolchain, rocBLAS/MIOpen, and the 6.x series

Compiler path. ROCm uses Clang/LLVM to compile HIP (CUDA-like) into GCN/RDNA ISA. The 6.x series has focused on perf and framework coverage; release notes track component-level optimizations and HW/OS support.

Libraries and kernels.

- rocBLAS and MIOpen implement GEMM/conv primitives with arch-aware tiling and algorithm selection similar in spirit to cuBLAS/cuDNN. The consolidated changelog highlights iterative perf work across these libraries.

- Recent ROCm workstream includes better Triton enablement on AMD GPUs, enabling Python-level kernel authoring while still lowering through LLVM to AMD backends.

Performance implications.

- On AMD GPUs, matching LDS (shared memory) bank widths and vectorized global loads to matrix tile shapes is as pivotal as smem bank alignment on NVIDIA. Compiler-assisted fusion in frameworks (e.g., attention) plus library autotuning in rocBLAS/MIOpen typically closes a large fraction of the gap to handwritten kernels, contingent on architecture/driver. Release documentation indicates continuous tuner improvements in 6.0–6.4.x.

When ROCm is the right tool. You need native support and optimization on AMD accelerators, with HIP portability from existing CUDA-style kernels and a clear LLVM toolchain.

Triton: a DSL and compiler for custom kernels

Compiler path. Triton is a Python-embedded DSL that lowers via LLVM; it handles vectorization, memory coalescing, and register allocation while giving explicit control over block sizes and program IDs. Build docs show the LLVM dependency and custom builds; NVIDIA’s developer materials discuss Triton’s tuning for newer architectures (e.g., Blackwell) with FP16/FP8 GEMM improvements.

Optimizations.

- Autotuning over tile sizes,

num_warps, and pipelining stages; static masking for boundary conditions without scalar fallbacks; shared-memory staging and software pipelining to overlap global loads with compute. - Triton’s design aims to automate the error-prone parts of CUDA-level optimization while leaving block-level tiling choices to the author; the original announcement outlines that separation of concerns.

Performance implications.

- Triton shines when you need a fused, shape-specialized kernel outside library coverage (e.g., bespoke attention variants, normalization-activation-matmul chains). On modern NVIDIA parts, vendor collabs report architecture-specific improvements in the Triton backend, reducing the penalty versus CUTLASS-style kernels for common GEMMs.

When Triton is the right tool. You want near-CUDA performance for custom fused ops without writing SASS/WMMA, and you value Python-first iteration with autotuning.

TensorRT (and TensorRT-LLM): builder-time graph optimization for inference

Compiler path. TensorRT ingests ONNX or framework graphs and emits a hardware-specific engine. During the build, it performs layer/tensor fusion, precision calibration (INT8, FP8/FP16), and kernel tactic selection; best-practice docs describe these builder phases. TensorRT-LLM extends this with LLM-specific runtime optimizations.

Optimizations.

- Graph-level: constant folding, concat-slice canonicalization, conv-bias-activation fusion, attention fusion.

- Precision: post-training calibration (entropy/percentile/mse) and per-tensor quantization, plus smooth-quant/QAT workflows in TensorRT-LLM.

- Runtime: paged-KV cache, in-flight batching, and scheduling for multi-stream/multi-GPU deployments (TensorRT-LLM docs).

Performance implications.

- The largest wins typically come from: end-to-end INT8 (or FP8 on Hopper/Blackwell where supported), removing framework overhead via a single engine, and aggressive attention fusion. TensorRT’s builder produces per-arch engine plans to avoid generic kernels at runtime.

When TensorRT is the right tool. Production inference on NVIDIA GPUs where you can pre-compile an optimized engine and benefit from quantization and large-graph fusion.

Practical guidance: choosing and tuning the stack

- Training vs. inference.

- Training/experimental kernels → CUDA + CUTLASS (NVIDIA) or ROCm + rocBLAS/MIOpen (AMD); Triton for custom fused ops.

- Production inference on NVIDIA → TensorRT/TensorRT-LLM for global graph-level gains.

- Exploit architecture-native instructions.

- On NVIDIA Hopper/Blackwell, ensure tiles map to WGMMA/WMMA sizes; CUTLASS materials show how warp-level GEMM and smem iterators should be structured.

- On AMD, align LDS usage and vector widths to CU datapaths; leverage ROCm 6.x autotuners and Triton-on-ROCm for shape-specialized ops.

- Fuse first, then quantize.

- Kernel/graph fusion reduces memory traffic; quantization reduces bandwidth and increases math density. TensorRT’s builder-time fusions plus INT8/FP8 often deliver multiplicative gains.

- Use graph execution for short sequences.

- CUDA Graphs integrated with cuDNN attention fusions amortize launch overheads in autoregressive inference.

- Treat compiler flags as first-class.

- For CUDA, remember device-side flags: example,

-Xptxas -O3,-v(and-Xptxas -O0when diagnosing). Host-only-O3isn’t sufficient.

- For CUDA, remember device-side flags: example,

References: