In this tutorial, we build our own custom GPT-style chat system from scratch using a local Hugging Face model. We start by loading a lightweight instruction-tuned model that understands conversational prompts, then wrap it inside a structured chat framework that includes a system role, user memory, and assistant responses. We define how the agent interprets context, constructs messages, and optionally uses small built-in tools to fetch local data or simulated search results. By the end, we have a fully functional, conversational model that behaves like a personalized GPT running. Check out the FULL CODES here.

!pip install transformers accelerate sentencepiece --quiet

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

from typing import List, Tuple, Optional

import textwrap, json, osWe begin by installing the essential libraries and importing the required modules. We ensure that the environment has all necessary dependencies, such as transformers, torch, and sentencepiece, ready for use. This setup allows us to work seamlessly with Hugging Face models inside Google Colab. Check out the FULL CODES here.

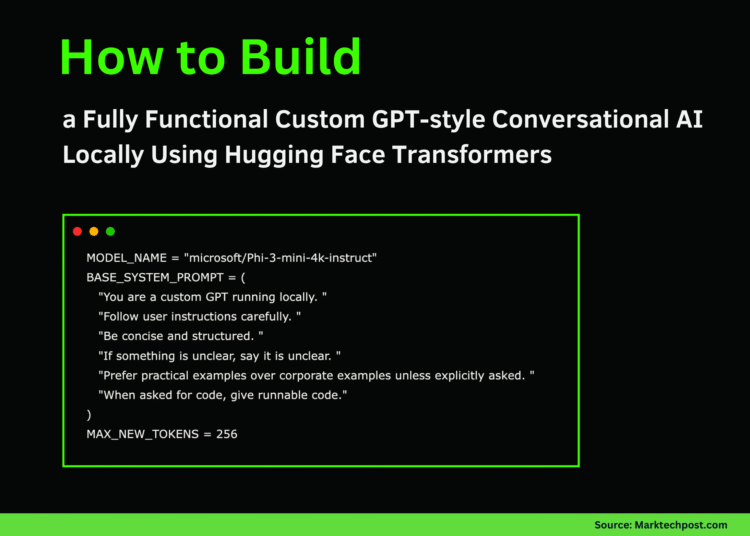

MODEL_NAME = "microsoft/Phi-3-mini-4k-instruct"

BASE_SYSTEM_PROMPT = (

"You are a custom GPT running locally. "

"Follow user instructions carefully. "

"Be concise and structured. "

"If something is unclear, say it is unclear. "

"Prefer practical examples over corporate examples unless explicitly asked. "

"When asked for code, give runnable code."

)

MAX_NEW_TOKENS = 256We configure our model name, define the system prompt that governs the assistant’s behavior, and set token limits. We establish how our custom GPT should respond, concise, structured, and practical. This section defines the foundation of our model’s identity and instruction style. Check out the FULL CODES here.

print("Loading model...")

tokenizer = AutoTokenizer.from_pretrained(MODEL_NAME)

if tokenizer.pad_token_id is None:

tokenizer.pad_token_id = tokenizer.eos_token_id

model = AutoModelForCausalLM.from_pretrained(

MODEL_NAME,

torch_dtype=torch.float16 if torch.cuda.is_available() else torch.float32,

device_map="auto"

)

model.eval()

print("Model loaded.")We load the tokenizer and model from Hugging Face into memory and prepare them for inference. We automatically adjust the device mapping based on available hardware, ensuring GPU acceleration if possible. Once loaded, our model is ready to generate responses. Check out the FULL CODES here.

ConversationHistory = List[Tuple[str, str]]

history: ConversationHistory = [("system", BASE_SYSTEM_PROMPT)]

def wrap_text(s: str, w: int = 100) -> str:

return "\n".join(textwrap.wrap(s, width=w))

def build_chat_prompt(history: ConversationHistory, user_msg: str) -> str:

prompt_parts = []

for role, content in history:

if role == "system":

prompt_parts.append(f"<|system|>\n{content}\n")

elif role == "user":

prompt_parts.append(f"<|user|>\n{content}\n")

elif role == "assistant":

prompt_parts.append(f"<|assistant|>\n{content}\n")

prompt_parts.append(f"<|user|>\n{user_msg}\n")

prompt_parts.append("<|assistant|>\n")

return "".join(prompt_parts)We initialize the conversation history, starting with a system role, and create a prompt builder to format messages. We define how user and assistant turns are arranged in a consistent conversational structure. This ensures the model always understands the dialogue context correctly. Check out the FULL CODES here.

def local_tool_router(user_msg: str) -> Optional[str]:

msg = user_msg.strip().lower()

if msg.startswith("search:"):

query = user_msg.split(":", 1)[-1].strip()

return f"Search results about '{query}':\n- Key point 1\n- Key point 2\n- Key point 3"

if msg.startswith("docs:"):

topic = user_msg.split(":", 1)[-1].strip()

return f"Documentation extract on '{topic}':\n1. The agent orchestrates tools.\n2. The model consumes output.\n3. Responses become memory."

return NoneWe add a lightweight tool router that extends our GPT’s capability to simulate tasks like search or documentation retrieval. We define logic to detect special prefixes such as “search:” or “docs:” in user queries. This simple agentic design gives our assistant contextual awareness. Check out the FULL CODES here.

def generate_reply(history: ConversationHistory, user_msg: str) -> str:

tool_context = local_tool_router(user_msg)

if tool_context:

user_msg = user_msg + "\n\nUseful context:\n" + tool_context

prompt = build_chat_prompt(history, user_msg)

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

with torch.no_grad():

output_ids = model.generate(

**inputs,

max_new_tokens=MAX_NEW_TOKENS,

do_sample=True,

top_p=0.9,

temperature=0.6,

pad_token_id=tokenizer.eos_token_id

)

decoded = tokenizer.decode(output_ids[0], skip_special_tokens=True)

reply = decoded.split("<|assistant|>")[-1].strip() if "<|assistant|>" in decoded else decoded[len(prompt):].strip()

history.append(("user", user_msg))

history.append(("assistant", reply))

return reply

def save_history(history: ConversationHistory, path: str = "chat_history.json") -> None:

data = [{"role": r, "content": c} for (r, c) in history]

with open(path, "w") as f:

json.dump(data, f, indent=2)

def load_history(path: str = "chat_history.json") -> ConversationHistory:

if not os.path.exists(path):

return [("system", BASE_SYSTEM_PROMPT)]

with open(path, "r") as f:

data = json.load(f)

return [(item["role"], item["content"]) for item in data]We define the primary reply generation function, which combines history, context, and model inference to produce coherent outputs. We also add functions to save and load past conversations for persistence. This snippet forms the operational core of our custom GPT. Check out the FULL CODES here.

print("\n--- Demo turn 1 ---")

demo_reply_1 = generate_reply(history, "Explain what this custom GPT setup is doing in 5 bullet points.")

print(wrap_text(demo_reply_1))

print("\n--- Demo turn 2 ---")

demo_reply_2 = generate_reply(history, "search: agentic ai with local models")

print(wrap_text(demo_reply_2))

def interactive_chat():

print("\nChat ready. Type 'exit' to stop.")

while True:

try:

user_msg = input("\nUser: ").strip()

except EOFError:

break

if user_msg.lower() in ("exit", "quit", "q"):

break

reply = generate_reply(history, user_msg)

print("\nAssistant:\n" + wrap_text(reply))

# interactive_chat()

print("\nCustom GPT initialized successfully.")We test the entire setup by running demo prompts and displaying generated responses. We also create an optional interactive chat loop to converse directly with the assistant. By the end, we confirm that our custom GPT runs locally and responds intelligently in real time.

In conclusion, we designed and executed a custom conversational agent that mirrors GPT-style reasoning without relying on any external services. We saw how local models can be made interactive through prompt orchestration, lightweight tool routing, and conversational memory management. This approach enables us to understand the internal logic behind commercial GPT systems. It empowers us to experiment with our own rules, behaviors, and integrations in a transparent and fully offline manner.

Check out the FULL CODES here. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.