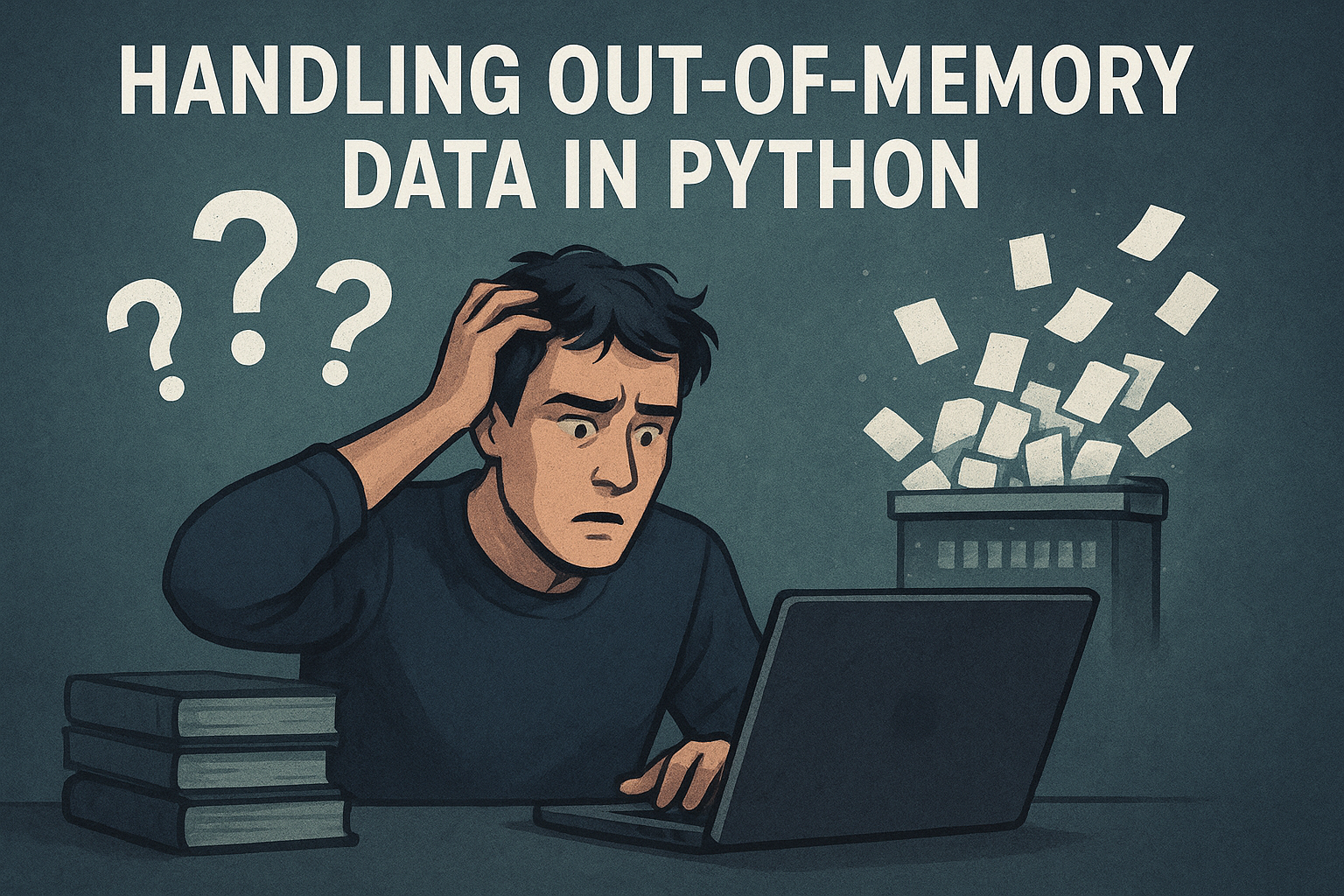

A Practical Guide to Handling Out-of-Memory Data in Python

Image by Editor

Introduction

These days, it is not uncommon to come across datasets that are too large to fit into random access memory (RAM), especially when working on advanced data analysis projects at scale, managing streaming data generated at high velocity, or building large machine learning models. Take, for instance, trying to load a 100 GB dataset from a CSV file into a Pandas DataFrame. In all these situations, memory limitations can interrupt entire data workflows, sometimes with costly consequences. This problem, known as Out-of-Memory — or OOM for short — directly impacts a system’s scalability, efficiency, and cost.

This article provides an overview of some practical techniques and strategies to navigate the OOM problem in Python-based projects, providing a “taste test” of various tools to help data scientists and developers work fluently with datasets that cannot fit into memory by processing data in chunks, replacing RAM with disk, or using distributed computing across several machines.

“Taste-Testing” of Strategies for Dealing with OOM Data

This tour of strategies and techniques for handling OOM issues will use the 100K customers dataset, a version of which is available here. While this is not a truly massive dataset, its size (100K instances) will be enough to clearly illustrate the techniques covered.

Data Chunking

The first strategy can be applied “on the fly” while the dataset is being read and loaded, and it consists of partitioning it into chunks. In Pandas, we can do this by using the chunksize argument in the read_csv() function, specifying the number of instances per chunk.

|

import pandas as pd

url = “https://raw.githubusercontent.com/gakudo-ai/open-datasets/refs/heads/main/customers-100000.csv” reader = pd.read_csv(url, chunksize=30000)

for i, chunk in enumerate(reader): print(f“Chunk {i}: {chunk.shape}”) |

Chunking can be an effective method to prevent OOM issues for datasets stored in CSV files with a simple structure, albeit not suitable when the format is more complex, e.g., instances have dependencies or nested JSON entities.

Using Dask for Parallel DataFrames and Lazy Computation

To almost seamlessly scale Pandas-like data workflows, Dask is a great choice: this library leverages parallel and lazy computation on large datasets, keeping its logic similar to standalone Pandas to a great extent.

This example applies the intermediate steps of using requests to locally download the CSV file before reading it, thus preventing possible server-side transfer problems related to aspects like encoding.

|

import dask.dataframe as dd import requests

url = “https://raw.githubusercontent.com/gakudo-ai/open-datasets/refs/heads/main/customers-100000.csv” local_filename = “customers-100000.csv”

# The CSV file is locally downloaded before reading it into a Dask DataFrame response = requests.get(url) response.raise_for_status() # Raise an exception for bad status codes with open(local_filename, ‘wb’) as f: f.write(response.content)

df = dd.read_csv(local_filename) df[df[“Country”] == “Spain”].head() |

When using Dask, it is important to directly read the data from the file using this library, rather than trying pd.read_csv(). Otherwise, all the data would be loaded into memory, which is precisely what we are trying to avoid.

Fast and Efficient Data Management with Polars

Polars is another library — with its core written in Rust — that can help efficiently manage limited memory when working with large datasets. It is more automated and agile than chunking, being a great choice for single-machine settings, but lacking Dask’s distributed computing capabilities.

This code snippet shows how to load a large dataset and lazily perform a query involving some filtering. Notice the use of the collect() function at the end of the query instruction to trigger its execution and obtain the final result before printing it on screen.

|

import polars as pl

url = “https://raw.githubusercontent.com/gakudo-ai/open-datasets/refs/heads/main/customers-100000.csv” df = pl.read_csv(url) lazy_result = df.lazy().filter(pl.col(“Country”) == “France”).select(“First Name”, “Email”).collect() print(lazy_result) |

SQL Querying via Pandas and sqlite3

If you need to repeatedly query subsets from a very large dataset file without constant data reloading, and you are familiar with the SQL language, this might be another attractive strategy to optimize memory usage. This method is great for exploratory filtering and selective data loading, although for performing the computations underlying data processing, Dask would be a better choice.

This example shows how to use sqlite3 and Pandas’ chunking capability to load data into an SQL database incrementally. After populating the database, we can perform a simple query that filters for Spanish customers, all without loading the entire dataset into a single DataFrame at once.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

import pandas as pd import sqlite3

url = “https://raw.githubusercontent.com/gakudo-ai/open-datasets/refs/heads/main/customers-100000.csv”

# Create an in-memory SQLite database connection conn = sqlite3.connect(“:memory:”)

# Read the CSV in chunks and append each chunk to the SQL table reader = pd.read_csv(url, chunksize=10000) for i, chunk in enumerate(reader): # For the first chunk, create the table. For subsequent chunks, append. if_exists_strategy = ‘replace’ if i == 0 else ‘append’ chunk.to_sql(“customers”, conn, if_exists=if_exists_strategy, index=False)

# Now, query the database without having loaded the entire file at once df = pd.read_sql_query(“SELECT * FROM customers WHERE Country = ‘Spain'”, conn) print(df.head())

conn.close() |

Bear in mind that for deeper analytics on very large datasets, this method may be comparatively slower than others.

Wrapping Up

In this article, we presented four different strategies and techniques to prevent the well-known out-of-memory (OOM) problem that may arise when handling very large datasets in constrained memory settings. Choosing one strategy or another largely depends on being familiar with their strengths and tradeoffs. To wrap up, we provide a succinct table that may help you choose the right one:

| Feature | Description |

|---|---|

| Pandas Chunking | Suitable for reading large CSV files in manageable parts. Full control over memory usage with minimal setup, but manual logic is needed for aggregation and merging. |

| Dask DataFrame | Dask scales DataFrame-based workflows to larger-than-memory data based on lazy and parallel processing. Great when high-level operations across full datasets are needed in pipelines. |

| Polars (Lazy Mode) | A memory-efficient, fast alternative to Dask with automatic query optimization. Ideal for single-machine workflows with large tabular data. |

| SQLite (via Pandas) | Optimal for querying large dataset files stored on disk without loading them into memory. Ideal for repeated filtering or structured access using SQL syntax, but may be slow. |