Feature Scaling in Practice: What Works and What Doesn’t

Image by Editor | ChatGPT

Introduction

In machine learning, the difference between a high-performing model and one that struggles often comes down to small details. One of the most overlooked steps in this process is feature scaling. While it may seem minor, how you scale data can affect model accuracy, training speed, and stability. However, not all scaling methods are equally effective in every scenario. Some techniques improve performance and ensure balance across features, while others may unintentionally distort the underlying relationships in the data.

This article explores what works in practice when it comes to feature scaling and what does not.

What is Feature Scaling?

Feature scaling is a data preprocessing technique used in machine learning to normalize or standardize the range of independent variables (features).

Since features in a dataset may have very different units and scales (e.g., age in years vs. income in dollars), models that rely on distance or gradient calculations can be biased toward features with larger numeric ranges. Feature scaling ensures that all features contribute proportionally to the model.

Why Feature Scaling Matters

- Improves model performance: Algorithms like gradient descent converge faster when features are normalized, since they don’t have to “zig-zag” across uneven scales

- Interpretability: Standardized features (mean 0, variance 1) make it easier to compare the relative importance of coefficients in linear models

- Better Accuracy: Distance-based models such as k-nearest neighbors (KNN), k-means, and support vector machines (SVMs) perform more reliably with scaled features

- Faster Convergence: Neural networks and gradient descent optimizers reach optimal solutions more quickly when features are scaled

Common Feature Scaling Techniques

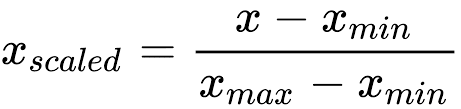

1. Normalization

Normalization is one of the simplest and most widely used feature scaling techniques. It rescales feature values to a fixed range, typically [0, 1], though it can be adjusted to any custom range [a, b].

Formula:

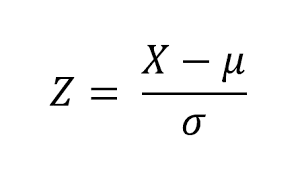

2. Standardization

Standardization is a widely used scaling technique that transforms features so that they have a mean of 0 and a standard deviation of 1. Unlike min-max scaling, it does not bound values within a fixed range; instead, it centers features and scales them to unit variance.

Formula:

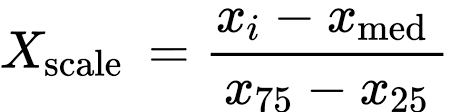

3. Robust Scaling

Robust Scaling is a feature scaling technique that uses the median and the interquartile range (IQR) instead of the mean and standard deviation. This makes it robust to outliers, as extreme values have less influence on the scaling process compared to min-max scaling or standardization.

Formula:

4. Max-Abs Scaling

Max-Abs scaling rescales each feature individually so that its maximum absolute value becomes 1.0, while preserving the sign of the data. This means all values are mapped into the range [-1, 1].

Formula:

Limitations of Feature Scaling

- Not always necessary: Tree-based models are largely insensitive to feature scaling, so applying normalization or standardization in these cases adds computation without improving results

- Loss of interpretability: Scaling can make raw feature values harder to interpret, which can complicate communication with non-technical stakeholders

- Method-Dependent: Different scaling techniques can yield different results depending on the algorithm and dataset, and an ill-suited choice can degrade performance

Conclusion

Feature scaling is a critical preprocessing step that can improve the performance of machine learning models, but its effectiveness depends on the algorithm and the data. Models that rely on distances or gradient descent often require scaling, while tree-based methods usually do not benefit from it. Always fit your scaler on the training data only (or within each fold for cross-validation and time series) and apply it to validation and test sets to avoid data leakage. When applied carefully and tested across different approaches, feature scaling can lead to faster convergence, greater stability, and more reliable results.