Here’s a statistic that should raise the eyebrows of every SEO professional: 92-94% of AI Mode searches were zero-click searches. Naturally traffic drops are being widely reported or predicted.

This means visibility in AI search is incredibly critical for brands. If your brand isn’t being mentioned or clearly cited, then you could rapidly become invisible.

The thing is, AI search platforms and LLMs operate their own crawlers. These AI search bots have different requirements to Google or other search engines. They won’t wait for your JavaScript to load, won’t parse your single-page application, and certainly won’t tolerate your 4MB hero image.

Therefore, if your site isn’t in top shape and AI-ready, you could be set to lose far more than just rankings. Here’s what you can do to prevent this.

Reasons That Sites Aren’t Crawled By AI Bots

Let’s start with an honest reality: parts of your site are likely skipped entirely by AI search crawlers. That’s just how they’re programmed.

When ChatGPT, Claude, or Perplexity encounter your website, they’re not browsing like humans. They’re arriving at your URL, grabbing the raw HTML, and making instant decisions about whether your content will be processed.

Some technical truths about AI crawlers

JavaScript gets skipped: If you’re relying on client-side rendering, you might as well not exist to AI crawlers. LLMs don’t execute JavaScript, wait for DOM manipulation, or scroll to trigger lazy loading. They see nothing and move on.

Bloat is penalized: Sites sending hundreds of requests just to display core content are getting systematically ignored. AI systems are efficient, not patient.

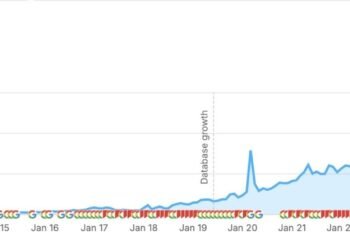

Caching catastrophes can happen: If your website’s caching isn’t set up properly, AI bots trying to read content can overload your servers. AI crawlers often work in bursts across lots of pages, so servers can get overwhelmed and slow down or even crash. The bots? They continue on.

Quick tip: Load your top 20 pages with JavaScript disabled. What you see is similar to what AI search sees.

Why Traditional SEO Health Checks Miss the Mark

Traditional site health audits focus on Google’s crawling behavior and user experience metrics. But LLMs operate under entirely different constraints and expectations. Here’s where past expectations miss the mark:

Crawlability vs. Readability

Google’s crawlers are sophisticated. They can execute JavaScript, wait for content to load, and parse complex DOM structures. LLMs? They grab HTML and move on. If your content isn’t immediately extractable in clean, semantic markup, it’s invisible.

Performance vs. Parseability

While traditional SEO emphasizes Core Web Vitals for user experience, LLM optimization is based on how quickly and cleanly an AI system can extract meaningful information from your page source,

Structure vs. Story

Google understands context through links, user behavior, and content relationships. LLMs thrive on explicit structure through schema markup, clear hierarchies, and semantic HTML.

They use this not only to understand what you’re saying, but for cross-referencing with wider sources and determining how authoritative you are in saying it.

Your AI Crawler Checklist: The FAST Framework

Our FAST framework helps teams run a quick sense check of their readiness:

F – Fetchable

Can an AI system retrieve and read your HTML without rendering?

- Implement server-side rendering (SSR) for all critical content

- Ensure core information is available in the initial HTML response

- Test pages with JavaScript off to see what bots actually receive

A – Accessible

Is your key content understandable without executing scripts?

- Use semantic HTML5 elements (article, section, header, etc.)

- Structure content with proper heading hierarchies (H1-H6)

- Include alt text, captions, and descriptive markup

S – Structured

Are you using schema, semantic tags, and clear hierarchies?

- Implement FAQ schema for common questions

- Use Product, Article, or Organization schema as suitable

- Create clear content layers with definition boxes and bulleted answers

T – Trim

Are you sending what’s needed, no bloat, no noise?

- Minimize JavaScript dependencies for content pages

- Compress assets and optimize images

- Clean up unnecessary tracking scripts and third-party integrations

Why Being Ignored Is A Big Deal For Brands

While it’s a subtle technical shift, it could prove transformational for business outcomes. We touched on the visibility factor earlier, but the risks run deeper.

Market Share at Scale

When ChatGPT summarizes your competitor; but skips your brand, that’s a decision lost before users even enter your funnel. With AI search growing exponentially, and predicted to eclipse search engines by 2028 this is business-critical.

Potential Brand Mistrust

To use the same hypothetical as above: if your site isn’t accessible, then AI will look to third party sources to fill this information gap. When key factors like pricing or product features aren’t in your control and are represented inaccurately? Then you’re risking trust with buyers.

Conversion Velocity Changes

Users increasingly act on AI-summarized information. Our recent study found that LLM traffic is 4.4x more valuable than that of search engines. If your site is optimized and gives users the information they need to make a decision, they’re landing on your site ready to convert. If not? They’re going elsewhere.

Structural Ways To Build Visibility

After checking that your key brand and product content is crawlable, it’s time to look at how it’s structured and delivering trust signals.

Entity-First Content Architecture

Structure your content around topics as clear entities (people, places, product ranges, concepts) rather than just keywords. LLMs navigate via entity relationships, not just page-to-page links.

Check out Backlinko’s LLM visibility research for a deeper-dive into content structuring.

AI-Optimized Information Layers

Create content that works at multiple levels:

- Surface level: Quick facts and definitions

- Contextual level: Detailed explanations and examples

- Authority level: Citations, data sources, and expert commentary

Trust Signal Engineering

Beyond traditional backlinks, focus on:

- Structured citations and references

- Expert author markup that links to bio pages

- Clear content attribution and sourcing

- Consistent entity representation across platforms

Your 3-Step AI Search Readiness Plan

Completed the FAST Framework? Time to get started with some initial steps to optimize your site for AI crawlers:

Step 1 – Assessment

- Audit your top 20 pages with HTML-only crawling

- Test content extraction with browser JavaScript disabled

- Benchmark your current AI citation and mention rates using AI search solutions like AI Optimization by Semrush Enterprise

Step 2 – Quick Wins

- Clean up robots.txt and implement/update llms.txt

- Add FAQ schema to your most important pages

- Work to optimize page load times to under 2 seconds (also very important for conversion rates)

Step 3 – Structural Improvements

- Implement server-side rendering for critical content paths

- Create clear content hierarchies with semantic HTML

- Add structured data for products, articles, or services

Seize The Site Health Opportunity

The window for proactive site health optimization is closing. Start with the FAST framework audit and identify your biggest technical gaps. Remember: this isn’t about perfection; it’s about being better prepared than your competition.

Brands that master LLM-ready site health today will be the brands that define tomorrow’s digital landscape. This shift is happening right now, will you be ready first or playing catch up?