In this article, you will learn five practical cross-validation patterns that make time series evaluation realistic, leak-resistant, and deployment-ready.

Topics we will cover include:

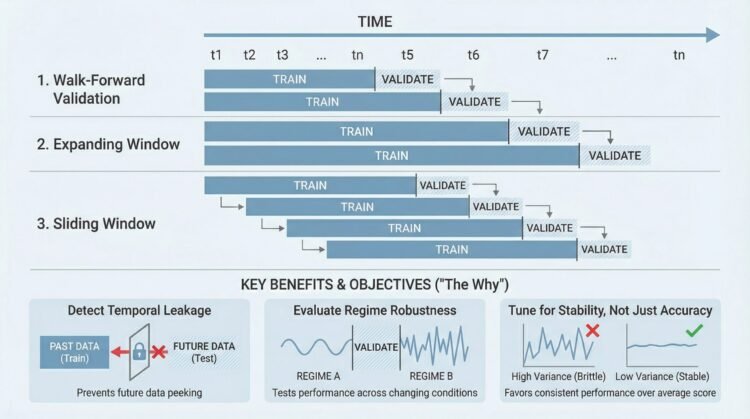

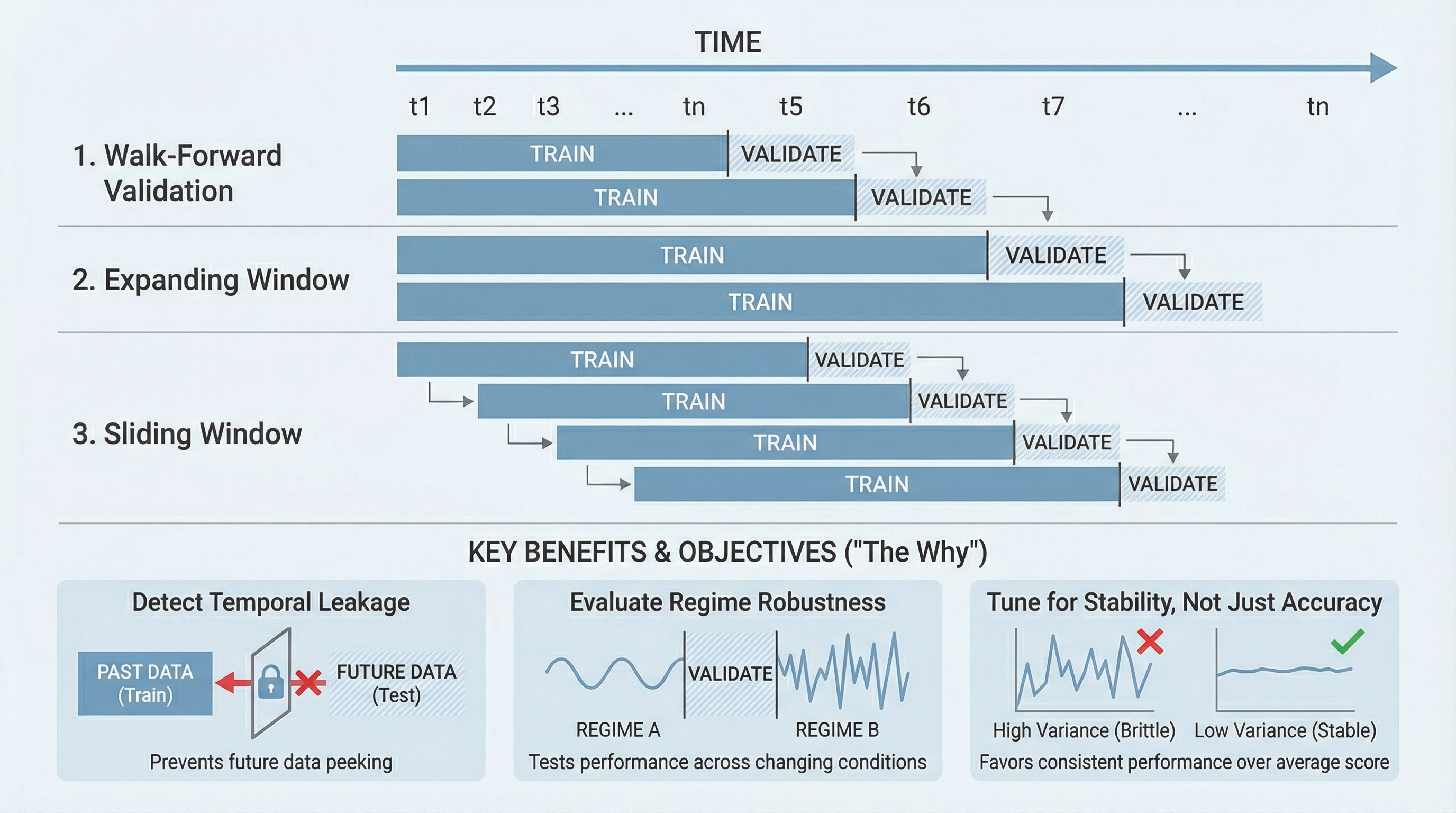

- Using walk-forward validation to mirror real production behavior.

- Comparing expanding versus sliding windows to choose the right memory depth.

- Finding temporal leakage, testing robustness across regimes, and tuning for stability—not just peak accuracy.

Let’s explore these techniques.

5 Ways to Use Cross-Validation to Improve Time Series Models

Image by Editor

Cross-Validation to Time Series

Time series modeling has a reputation for being fragile. A model that looks excellent in backtesting can fall apart the moment it meets new data. Much of that fragility comes from how validation is handled.

Random splits, default cross-validation, and one-off holdout sets quietly break the temporal structure that time series depend on. Cross-validation is not the enemy here, but it has to be used differently.

When applied with respect for time, it becomes one of the most powerful tools you have for diagnosing leakage, improving generalization, and understanding how your model behaves as conditions change. Used well, it does more than score accuracy — it forces your model to earn trust under realistic constraints.

Using Walk-Forward Validation to Simulate Real Deployment

Walk-forward validation is the closest thing to a dress rehearsal for a production time series model. Instead of training once and testing once, the model is retrained repeatedly as time advances. Each split respects causality, training only on past data and testing on the immediate future. This matters because time series models rarely fail due to lack of historical signal; they fail because the future does not behave like the past.

This approach exposes how sensitive your model is to small shifts in data. A model that performs well in early folds but degrades later is signaling regime dependence — an insight that is invisible in a single holdout split. Walk-forward validation also surfaces whether retraining frequency matters. Some models improve dramatically when updated often, while others barely change.

There is also a practical benefit: walk-forward validation forces you to codify your retraining logic early. Feature generation, scaling, and lag construction must all work incrementally. If something breaks when the window moves forward, it would have broken in production, too. Validation becomes a way to debug the entire pipeline, not just the estimator.

Comparing Expanding and Sliding Windows to Test Memory Depth

One of the quiet assumptions in time series modeling is how much history the model should remember. Expanding windows keep all past data and grow over time. Sliding windows discard older observations and keep the window length fixed. Cross-validation allows you to test this assumption explicitly instead of guessing.

Expanding windows tend to favor stability — they work well when long-term patterns dominate and structural breaks are rare. Sliding windows are more responsive, adapting quickly when recent behavior matters more than distant history. Neither is universally better, and the difference often shows up only when you evaluate across multiple folds.

Cross-validating both strategies reveals how your model balances bias and variance over time. If performance improves with shorter windows, the system is telling you that old data may be harmful. If longer windows consistently win, the signal is likely persistent. This comparison also informs feature engineering choices, especially for lag depth and rolling statistics.

Using Cross-Validation to Detect Temporal Data Leakage

Temporal leakage is one of the most common reasons time series models look better than they should. It rarely comes from obvious mistakes; more often it sneaks in through feature engineering, normalization, or target-derived signals that quietly peek into the future. Cross-validation, when designed properly, is one of the best ways to catch it.

If your validation scores are suspiciously stable across folds, that is often a warning sign because real time series performance usually fluctuates. Perfect consistency can indicate that information from the test period is bleeding into training. Walk-forward splits with strict boundaries make leakage much harder to hide.

Cross-validation also helps isolate the source of the problem. When you see a sharp performance drop after fixing the split logic, you know the model was leaning on future information. That feedback loop is invaluable. It shifts validation from a passive scoring step into an active diagnostic tool for pipeline integrity.

Evaluating Model Robustness Across Regime Changes

Time series rarely live in a single regime. Markets shift, user behavior evolves, sensors drift, and external shocks rewrite the rules. A single train-test split can accidentally land entirely inside one regime and give a false sense of confidence. Cross-validation spreads your evaluation across time, increasing the chance of crossing regime boundaries.

By examining fold-level performance instead of just averages, you can see how the model reacts to change. Some folds may show strong accuracy, others clear degradation. That pattern matters more than the mean score. It tells you whether the model is robust or brittle.

This perspective also guides model selection. A slightly less accurate model that degrades gracefully is often preferable to a brittle high performer. Cross-validation makes those trade-offs visible. It turns evaluation into a stress test rather than a beauty contest.

Tuning Hyperparameters Based on Stability, Not Just Accuracy

Hyperparameter tuning in time series is often treated the same way as in tabular data: optimize a metric, pick the best score, move on. Cross-validation enables a more nuanced approach. Instead of asking which configuration wins on average, you can ask which one behaves consistently over time.

Some hyperparameters produce high peaks and deep valleys; others deliver steady, predictable performance. Cross-validation exposes that difference. When you inspect fold-by-fold results, you can favor configurations with lower variance even if the mean score is slightly lower.

This mindset aligns better with real-world deployment. Stable models are easier to monitor, retrain, and explain. Cross-validation becomes a tool for risk management, not just optimization. It helps you choose models that perform reliably when the data inevitably drifts.

Conclusion

Cross-validation is often misunderstood in time series work, not because it is flawed, but because it is misapplied. When time is treated as just another feature, evaluation becomes misleading. When time is respected, cross-validation turns into a powerful lens for understanding model behavior.

Walk-forward splits, window comparisons, leakage detection, regime awareness, and stability-driven tuning all emerge from the same idea: test the model the way it will actually be used. Do that consistently, and cross-validation stops being a checkbox and starts becoming a competitive advantage.