A recent study from Oregon State University estimated that more than 3,500 animal species are at risk of extinction because of factors including habitat alterations, natural resources being overexploited, and climate change.

To better understand these changes and protect vulnerable wildlife, conservationists like MIT PhD student and Computer Science and Artificial Intelligence Laboratory (CSAIL) researcher Justin Kay are developing computer vision algorithms that carefully monitor animal populations. A member of the lab of MIT Department of Electrical Engineering and Computer Science assistant professor and CSAIL principal investigator Sara Beery, Kay is currently working on tracking salmon in the Pacific Northwest, where they provide crucial nutrients to predators like birds and bears, while managing the population of prey, like bugs.

With all that wildlife data, though, researchers have lots of information to sort through and many AI models to choose from to analyze it all. Kay and his colleagues at CSAIL and the University of Massachusetts Amherst are developing AI methods that make this data-crunching process much more efficient, including a new approach called “consensus-driven active model selection” (or “CODA”) that helps conservationists choose which AI model to use. Their work was named a Highlight Paper at the International Conference on Computer Vision (ICCV) in October.

That research was supported, in part, by the National Science Foundation, Natural Sciences and Engineering Research Council of Canada, and Abdul Latif Jameel Water and Food Systems Lab (J-WAFS). Here, Kay discusses this project, among other conservation efforts.

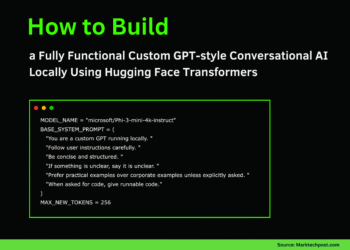

Q: In your paper, you pose the question of which AI models will perform the best on a particular dataset. With as many as 1.9 million pre-trained models available in the HuggingFace Models repository alone, how does CODA help us address that challenge?

A: Until recently, using AI for data analysis has typically meant training your own model. This requires significant effort to collect and annotate a representative training dataset, as well as iteratively train and validate models. You also need a certain technical skill set to run and modify AI training code. The way people interact with AI is changing, though — in particular, there are now millions of publicly available pre-trained models that can perform a variety of predictive tasks very well. This potentially enables people to use AI to analyze their data without developing their own model, simply by downloading an existing model with the capabilities they need. But this poses a new challenge: Which model, of the millions available, should they use to analyze their data?

Typically, answering this model selection question also requires you to spend a lot of time collecting and annotating a large dataset, albeit for testing models rather than training them. This is especially true for real applications where user needs are specific, data distributions are imbalanced and constantly changing, and model performance may be inconsistent across samples. Our goal with CODA was to substantially reduce this effort. We do this by making the data annotation process “active.” Instead of requiring users to bulk-annotate a large test dataset all at once, in active model selection we make the process interactive, guiding users to annotate the most informative data points in their raw data. This is remarkably effective, often requiring users to annotate as few as 25 examples to identify the best model from their set of candidates.

We’re very excited about CODA offering a new perspective on how to best utilize human effort in the development and deployment of machine-learning (ML) systems. As AI models become more commonplace, our work emphasizes the value of focusing effort on robust evaluation pipelines, rather than solely on training.

Q: You applied the CODA method to classifying wildlife in images. Why did it perform so well, and what role can systems like this have in monitoring ecosystems in the future?

A: One key insight was that when considering a collection of candidate AI models, the consensus of all of their predictions is more informative than any individual model’s predictions. This can be seen as a sort of “wisdom of the crowd:” On average, pooling the votes of all models gives you a decent prior over what the labels of individual data points in your raw dataset should be. Our approach with CODA is based on estimating a “confusion matrix” for each AI model — given the true label for some data point is class X, what is the probability that an individual model predicts class X, Y, or Z? This creates informative dependencies between all of the candidate models, the categories you want to label, and the unlabeled points in your dataset.

Consider an example application where you are a wildlife ecologist who has just collected a dataset containing potentially hundreds of thousands of images from cameras deployed in the wild. You want to know what species are in these images, a time-consuming task that computer vision classifiers can help automate. You are trying to decide which species classification model to run on your data. If you have labeled 50 images of tigers so far, and some model has performed well on those 50 images, you can be pretty confident it will perform well on the remainder of the (currently unlabeled) images of tigers in your raw dataset as well. You also know that when that model predicts some image contains a tiger, it is likely to be correct, and therefore that any model that predicts a different label for that image is more likely to be wrong. You can use all these interdependencies to construct probabilistic estimates of each model’s confusion matrix, as well as a probability distribution over which model has the highest accuracy on the overall dataset. These design choices allow us to make more informed choices over which data points to label and ultimately are the reason why CODA performs model selection much more efficiently than past work.

There are also a lot of exciting possibilities for building on top of our work. We think there may be even better ways of constructing informative priors for model selection based on domain expertise — for instance, if it is already known that one model performs exceptionally well on some subset of classes or poorly on others. There are also opportunities to extend the framework to support more complex machine-learning tasks and more sophisticated probabilistic models of performance. We hope our work can provide inspiration and a starting point for other researchers to keep pushing the state of the art.

Q: You work in the Beerylab, led by Sara Beery, where researchers are combining the pattern-recognition capabilities of machine-learning algorithms with computer vision technology to monitor wildlife. What are some other ways your team is tracking and analyzing the natural world, beyond CODA?

A: The lab is a really exciting place to work, and new projects are emerging all the time. We have ongoing projects monitoring coral reefs with drones, re-identifying individual elephants over time, and fusing multi-modal Earth observation data from satellites and in-situ cameras, just to name a few. Broadly, we look at emerging technologies for biodiversity monitoring and try to understand where the data analysis bottlenecks are, and develop new computer vision and machine-learning approaches that address those problems in a widely applicable way. It’s an exciting way of approaching problems that sort of targets the “meta-questions” underlying particular data challenges we face.

The computer vision algorithms I’ve worked on that count migrating salmon in underwater sonar video are examples of that work. We often deal with shifting data distributions, even as we try to construct the most diverse training datasets we can. We always encounter something new when we deploy a new camera, and this tends to degrade the performance of computer vision algorithms. This is one instance of a general problem in machine learning called domain adaptation, but when we tried to apply existing domain adaptation algorithms to our fisheries data we realized there were serious limitations in how existing algorithms were trained and evaluated. We were able to develop a new domain adaptation framework, published earlier this year in Transactions on Machine Learning Research, that addressed these limitations and led to advancements in fish counting, and even self-driving and spacecraft analysis.

One line of work that I’m particularly excited about is understanding how to better develop and analyze the performance of predictive ML algorithms in the context of what they are actually used for. Usually, the outputs from some computer vision algorithm — say, bounding boxes around animals in images — are not actually the thing that people care about, but rather a means to an end to answer a larger problem — say, what species live here, and how is that changing over time? We have been working on methods to analyze predictive performance in this context and reconsider the ways that we input human expertise into ML systems with this in mind. CODA was one example of this, where we showed that we could actually consider the ML models themselves as fixed and build a statistical framework to understand their performance very efficiently. We have been working recently on similar integrated analyses combining ML predictions with multi-stage prediction pipelines, as well as ecological statistical models.

The natural world is changing at unprecedented rates and scales, and being able to quickly move from scientific hypotheses or management questions to data-driven answers is more important than ever for protecting ecosystems and the communities that depend on them. Advancements in AI can play an important role, but we need to think critically about the ways that we design, train, and evaluate algorithms in the context of these very real challenges.