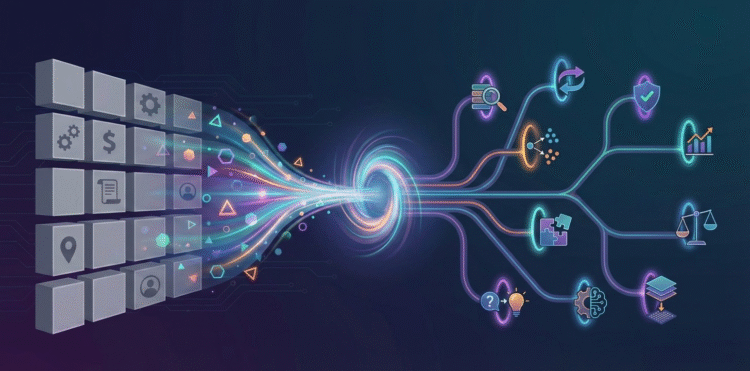

10 Ways to Use Embeddings for Tabular ML Tasks

Image by Editor

Introduction

Embeddings — vector-based numerical representations of typically unstructured data like text — have been primarily popularized in the field of natural language processing (NLP). But they are also a powerful tool to represent or supplement tabular data in other machine learning workflows. Examples not only apply to text data, but also to categories with a high level of diversity of latent semantic properties.

This article uncovers 10 insightful uses of embeddings to leverage data at its fullest in a variety of machine learning tasks, models, or projects as a whole.

Initial Setup: Some of the 10 strategies described below will be accompanied by brief illustrative code excerpts. An example toy dataset used in the examples is provided first, along with the most basic and commonplace imports needed in most of them.

|

import pandas as pd import numpy as np

# Example customer reviews’ toy dataset df = pd.DataFrame({ “user_id”: [101, 102, 103, 101, 104], “product”: [“Phone”, “Laptop”, “Tablet”, “Laptop”, “Phone”], “category”: [“Electronics”, “Electronics”, “Electronics”, “Electronics”, “Electronics”], “review”: [“great battery”, “fast performance”, “light weight”, “solid build quality”, “amazing camera”], “rating”: [5, 4, 4, 5, 5] }) |

1. Encoding Categorical Features With Embeddings

This is a useful approach in applications like recommender systems. Rather than being handled numerically, high-cardinality categorical features, like user and product IDs, are best turned into vector representations. This approach has been widely applied and shown to effectively capture the semantic aspects and relationships among users and products.

This practical example defines a couple of embedding layers as part of a neural network model that takes user and product descriptors and converts them into embeddings.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

from tensorflow.keras.layers import Input, Embedding, Flatten, Dense, Concatenate from tensorflow.keras.models import Model

# Numeric and categorical user_input = Input(shape=(1,)) user_embed = Embedding(input_dim=500, output_dim=8)(user_input) user_vec = Flatten()(user_embed)

prod_input = Input(shape=(1,)) prod_embed = Embedding(input_dim=50, output_dim=8)(prod_input) prod_vec = Flatten()(prod_embed)

concat = Concatenate()([user_vec, prod_vec]) output = Dense(1)(concat)

model = Model([user_input, prod_input], output) model.compile(“adam”, “mse”) |

2. Averaging Word Embeddings for Text Columns

This approach compresses multiple texts of variable length into fixed-size embeddings by aggregating word-wise embeddings within each text sequence. It resembles one of the most common uses of embeddings; the twist here is aggregating word-level embeddings into a sentence- or text-level embedding.

The following example uses Gensim, which implements the popular Word2Vec algorithm to turn linguistic units (typically words) into embeddings, and performs an aggregation of multiple word-level embeddings to create an embedding associated with each user review.

|

from gensim.models import Word2Vec

# Train embeddings on the review text sentences = df[“review”].str.lower().str.split().tolist() w2v = Word2Vec(sentences, vector_size=16, min_count=1)

df[“review_emb”] = df[“review”].apply( lambda t: np.mean([w2v.wv[w] for w in t.lower().split()], axis=0) ) |

3. Clustering Embeddings Into Meta-Features

Vertically stacking multiple individual embedding vectors into a 2D NumPy array (a matrix) is the core step to perform clustering on a set of customer review embeddings and identify natural groupings that might relate to topics in the review set. This technique captures coarse semantic clusters and can yield new, informative categorical features.

|

from sklearn.cluster import KMeans

emb_matrix = np.vstack(df[“review_emb”].values) km = KMeans(n_clusters=3, random_state=42).fit(emb_matrix) df[“review_topic”] = km.labels_ |

4. Learning Self-Supervised Tabular Embeddings

As surprising as it may sound, learning numerical vector representations of structured data — particularly for unlabeled datasets — is a clever way to turn an unsupervised problem into a self-supervised learning problem: the data itself generates training signals.

While these approaches are a bit more elaborate than the practical scope of this article, they commonly use one of the following strategies:

- Masked feature prediction: randomly hide some features’ values — similar to masked language modeling for training large language models (LLMs) — forcing the model to predict them based on the remaining visible features.

- Perturbation detection: expose the model to a noisy variant of the data, with some feature values swapped or replaced, and set the training goal as identifying which values are “legitimate” and which ones have been altered.

5. Building Multi-Labeled Categorical Embeddings

This is a robust approach to prevent runtime errors when certain categories are not in the vocabulary used by embedding algorithms like Word2Vec, while maintaining the usability of embeddings.

This example represents a single category like “Phone” using multiple tags such as “mobile” or “touch.” It builds a composite semantic embedding by aggregating the embeddings of associated tags. Compared to standard categorical encodings like one-hot, this method captures similarity more accurately and leverages knowledge beyond what Word2Vec “knows.”

|

tags = { “Phone”: [“mobile”, “touch”], “Laptop”: [“portable”, “cpu”], “Tablet”: [] # Added to handle the ‘Tablet’ product }

def safe_mean_embedding(words, model, dim): vecs = [model.wv[w] for w in words if w in model.wv] return np.mean(vecs, axis=0) if vecs else np.zeros(dim)

df[“tag_emb”] = df[“product”].apply( lambda p: safe_mean_embedding(tags[p], w2v, 16) ) |

6. Using Contextual Embeddings for Categorical Features

This slightly more sophisticated approach first maps categorical variables into “standard” embeddings, then passes them through self-attention layers to produce context-enriched embeddings. These dynamic representations can change across data instances (e.g., product reviews) and capture dependencies among attributes as well as higher-order feature interactions. In other words, this allows downstream models to interpret a category differently based on context — i.e. the values of other features.

7. Learning Embeddings on Binned Numerical Features

It is common to convert fine-grained numerical features like age into bins (e.g., age groups) as part of data preprocessing. This strategy produces embeddings of binned features, which can capture outliers or nonlinear structure underlying the original numeric feature.

In this example, the numerical rating feature is turned into a binned counterpart, then a neural embedding layer learns a unique 3D vector representation for diverse rating ranges.

|

bins = pd.cut(df[“rating”], bins=4, labels=False) emb_numeric = Embedding(input_dim=4, output_dim=3)(Input(shape=(1,))) |

8. Fusing Embeddings and Raw Features (Interaction Features)

Suppose you encounter a label not found in Word2Vec (e.g., a product name like “Phone”). This approach combines pre-trained semantic embeddings with raw numerical features in a single input vector.

This example first obtains a 16-dimensional embedding representation for categorical product names, then appends raw ratings. For downstream modeling, this helps the model understand both products and how they are perceived (e.g., sentiment).

|

df[“product_emb”] = df[“product”].str.lower().apply( lambda p: w2v.wv[p] if p in w2v.wv else np.zeros(16) )

df[“user_product_emb”] = df.apply( lambda r: np.concatenate([r[“product_emb”], [r[“rating”]]]), axis=1 ) |

9. Using Sentence Embeddings for Long Text

Sentence transformers convert full sequences like text reviews into embedding vectors that capture sequence-level semantics. With a small twist — converting a review into a list of vectors — we transform unstructured text into fixed-width attributes that can be used by models alongside classical tabular columns.

|

from sentence_transformers import SentenceTransformer

model = SentenceTransformer(“sentence-transformers/all-MiniLM-L6-v2”) df[“sent_emb”] = list(model.encode(df[“review”].tolist())) |

10. Feeding Embeddings Into Tree Models

The final strategy combines representation learning with tabular data learning in a hybrid fusion approach. Similar to the previous item, embeddings found in a single column are expanded into several feature columns. The focus here is not on how embeddings are created, but on how they are used and fed to a downstream model alongside other data.

|

import xgboost as xgb

X = pd.concat( [pd.DataFrame(df[“review_emb”].tolist()), df[[“rating”]]], axis=1 ) y = df[“rating”]

model = xgb.XGBRegressor() model.fit(X, y) |

Closing Remarks

Embeddings are not merely an NLP thing. This article showed a variety of possible uses of embeddings — with little to no extra effort — that can strengthen machine learning workflows by unlocking semantic similarity among examples, providing richer interaction modeling, and producing compact, informative feature representations.