Knowledge Graphs and their limitations

With the rapid growth of AI applications, Knowledge Graphs (KGs) have emerged as a foundational structure for representing knowledge in a machine-readable form. They organize information as triples—a head entity, a relation, and a tail entity—forming a graph-like structure where entities are nodes and relationships are edges. This representation allows machines to understand and reason over connected knowledge, supporting intelligent applications such as question answering, semantic analysis, and recommendation systems

Despite their effectiveness, Knowledge Graphs (KGs) have notable limitations. They often lose important contextual information, making it difficult to capture the complexity and richness of real-world knowledge. Additionally, many KGs suffer from data sparsity, where entities and relationships are incomplete or poorly connected. This lack of full annotation limits the contextual signals available during inference, posing challenges for effective reasoning, even when integrated with large language models.

Context Graphs

Context Graphs (CGs) extend traditional Knowledge Graphs by adding extra information such as time, location, and source details. Instead of storing knowledge as isolated facts, they capture the situation in which a fact or decision occurred, leading to a clearer and more accurate understanding of real-world knowledge.

When used with agent-based systems, context graphs also store how decisions were made. Agents need more than rules—they need to know how rules were applied before, when exceptions were allowed, who approved decisions, and how conflicts were handled. Since agents operate directly where decisions happen, they can naturally record this full context.

Over time, these stored decision traces form a context graph that helps agents learn from past actions. This allows systems to understand not only what happened, but also why it happened, making agent behavior more consistent and reliable.

What are the effects of Contextual Information?

Contextual information adds important layers to knowledge representation by going beyond simple entities–relation facts. It helps distinguish between facts that look similar but occur under different conditions, such as differences in time, location, scale, or surrounding circumstances. For example, two companies may be competitors in one market or time period but not in another. By capturing such context, systems can represent knowledge in a more detailed way and avoid treating all similar-looking facts as identical.

In context graphs, contextual information also plays a key role in reasoning and decision-making. It includes signals such as historical decisions, policies applied, exceptions granted, approvals involved, and related events from other systems. When agents record how a decision was made—what data was used, which rule was checked, and why an exception was allowed—this information becomes reusable context for future decisions. Over time, these records help connect entities that are not directly linked and allow systems to reason based on past outcomes and precedents, rather than relying only on fixed rules or isolated triples.

There has been a clear shift in AI systems—from static tools to decision-making agents, driven largely by major industry players. Real-world decisions are rarely based on rules alone; they involve exceptions, approvals, and lessons from past cases. Context graphs address this gap by capturing how decisions are made across systems—what policies were checked, which data was used, who approved the decision, and what outcome followed. By structuring this decision history as context, agents can reuse prior judgments instead of repeatedly relearning the same edge cases. Some examples of this shift include:

- Gmail’s Gemini features and Gemini 3–based agent frameworks both show AI shifting from simple help to active decision-making, whether that’s managing inbox priorities or running complex workflows.

- Gmail relies on conversation history and user intent, while Gemini 3 agents use memory and state to handle longer tasks. In both cases, context matters more than single prompts.

- Gemini 3 acts as an orchestration layer for multi-agent systems (ADK, Agno, Letta, Eigent), similar to how Gemini orchestrates summarization, writing, and prioritization inside Gmail.

- Features like AI Inbox and Suggested Replies rely on persistent understanding of user behavior, just as agent frameworks like Letta and mem0 rely on stateful memory to prevent context loss and ensure consistent behavior.

- Gmail turns email into actionable summaries and to-dos, while Gemini-powered agents automate browsers, workflows, and enterprise tasks—both reflecting a broader shift toward AI systems that act, not just respond.

OpenAI

- ChatGPT Health brings health data from different sources—medical records, apps, wearables, and notes—into one place. This creates a clear, shared context that helps the system understand health patterns over time instead of answering isolated questions, similar to how context graphs link facts with their context.

- By using personal health history and past interactions, ChatGPT Health helps users make better-informed decisions, such as preparing for doctor visits or understanding test results.

- Health runs in a separate, secure space, keeping sensitive information private and contained. This ensures health context stays accurate and protected, which is essential for safely using context-based systems like context graphs.

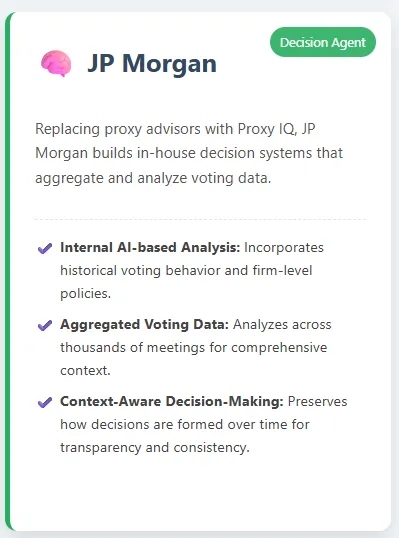

JP Morgan

- JP Morgan replacing proxy advisors with its AI tool, Proxy IQ, shows a shift toward building in-house decision systems that aggregate and analyze voting data across thousands of meetings, rather than relying on third-party recommendations.

- By analyzing proxy data internally, the firm can incorporate historical voting behavior, company-specific details, and firm-level policies—aligning with the idea of context graphs that preserve how decisions are formed over time.

- Internal AI-based analysis gives JP Morgan more transparency, speed, and consistency in proxy voting, reflecting a broader move toward context-aware, AI-driven decision-making in enterprise settings.

NVIDIA

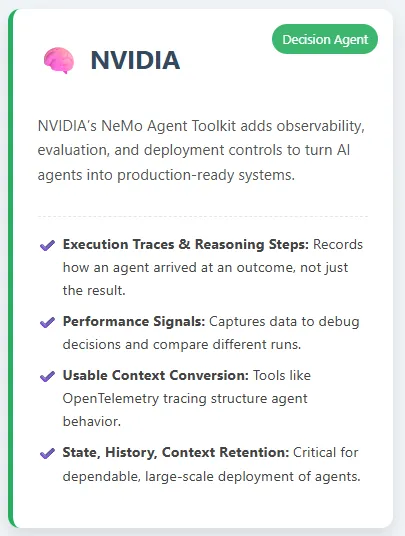

- NVIDIA’s NeMo Agent Toolkit helps turn AI agents into production-ready systems by adding observability, evaluation, and deployment controls. By capturing execution traces, reasoning steps, and performance signals, it records how an agent arrived at an outcome—not just the final result—aligning closely with the idea of context graphs.

- Tools like OpenTelemetry tracing and structured evaluations convert agent behavior into usable context. This makes it easier to debug decisions, compare different runs, and steadily improve reliability.

- Similar to how DLSS 4.5 integrates AI deeply into real-time graphics pipelines, NAT integrates AI agents into enterprise workflows. Both highlight a broader shift toward AI systems that retain state, history, and context, which is critical for dependable, large-scale deployment.

Microsoft

- Copilot Checkout and Brand Agents turn shopping conversations into direct purchases. Questions, comparisons, and decisions happen in one place, creating clear context around why a customer chose a product.

- These AI agents operate exactly where buying decisions happen—inside chats and brand websites—allowing them to guide users and complete checkout without extra steps.

- Merchants keep control of transactions and customer data. Over time, these interactions build useful context about customer intent and buying patterns, helping future decisions become faster and more accurate.