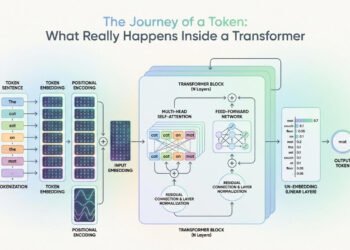

BERT is an early transformer-based model for NLP tasks that’s small and fast enough to train on a home computer. Like all deep learning models, it requires a tokenizer to convert text into integer tokens. This article shows how to train a WordPiece tokenizer following BERT’s original design.

Let’s get started.

Training a Tokenizer for BERT Models

Photo by JOHN TOWNER. Some rights reserved.

Overview

This article is divided into two parts; they are:

- Picking a Dataset

- Training a Tokenizer

Picking a Dataset

To keep things simple, we’ll use English text only. WikiText is a popular preprocessed dataset for experiments, available through the Hugging Face datasets library:

|

import random from datasets import load_dataset

# path and name of each dataset path, name = “wikitext-2”, “wikitext-2-raw-v1” dataset = load_dataset(path, name, split=“train”) print(f“size: {len(dataset)}”) # Print a few samples for idx in random.sample(range(len(dataset)), 5): text = dataset[idx][“text”].strip() print(f“{idx}: {text}”) |

On first run, the dataset downloads to ~/.cache/huggingface/datasets and is cached for future use. WikiText-2 that used above is a smaller dataset suitable for quick experiments, while WikiText-103 is larger and more representative of real-world text for a better model.

The output of this code may look like this:

|

size: 36718 23905: Dudgeon Creek 4242: In 1825 the Congress of Mexico established the Port of Galveston and in 1830 … 7181: Crew : 5 24596: On March 19 , 2007 , Sports Illustrated posted on its website an article in its … 12920: The most recent building included in the list is in the Quantock Hills . The … |

The dataset contains strings of varying lengths with spaces around punctuation marks. While you could split on whitespace, this wouldn’t capture sub-word components. That’s what the WordPiece tokenization algorithm is good at.

Training a Tokenizer

Several tokenization algorithms support sub-word components. BERT uses WordPiece, while modern LLMs often use Byte-Pair Encoding (BPE). We’ll train a WordPiece tokenizer following BERT’s original design.

The tokenizers library implements multiple tokenization algorithms that can be configured to your needs. It saves you the hassle of implementing the tokenization algorithm from scratch. You should install it with pip command:

Let’s train a tokenizer:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

import tokenizers from datasets import load_dataset

path, name = “wikitext”, “wikitext-103-raw-v1” vocab_size = 30522 dataset = load_dataset(path, name, split=“train”)

# Collect texts, skip title lines starting with “=” texts = [] for line in dataset[“text”]: line = line.strip() if line and not line.startswith(“=”): texts.append(line)

# Configure WordPiece tokenizer with NFKC normalization and special tokens tokenizer = tokenizers.Tokenizer(tokenizers.models.WordPiece()) tokenizer.pre_tokenizer = tokenizers.pre_tokenizers.Whitespace() tokenizer.decoder = tokenizers.decoders.WordPiece() tokenizer.normalizer = tokenizers.normalizers.NFKC() tokenizer.trainer = tokenizers.trainers.WordPieceTrainer( vocab_size=vocab_size, special_tokens=[“[PAD]”, “[CLS]”, “[SEP]”, “[MASK]”, “[UNK]”] ) # Train the tokenizer and save it tokenizer.train_from_iterator(texts, trainer=tokenizer.trainer) tokenizer.enable_padding(pad_id=tokenizer.token_to_id(“[PAD]”), pad_token=“[PAD]”) tokenizer_path = f“{dataset_name}_wordpiece.json” tokenizer.save(tokenizer_path, pretty=True)

# Test the tokenizer tokenizer = tokenizers.Tokenizer.from_file(tokenizer_path) print(tokenizer.encode(“Hello, world!”).tokens) print(tokenizer.decode(tokenizer.encode(“Hello, world!”).ids)) |

Running this code may print the following output:

|

wikitext-103-raw-v1/train-00000-of-00002(…): 100%|█████| 157M/157M [00:46<00:00, 3.40MB/s] wikitext-103-raw-v1/train-00001-of-00002(…): 100%|█████| 157M/157M [00:04<00:00, 37.0MB/s] Generating test split: 100%|███████████████| 4358/4358 [00:00<00:00, 174470.75 examples/s] Generating train split: 100%|████████| 1801350/1801350 [00:09<00:00, 199210.10 examples/s] Generating validation split: 100%|█████████| 3760/3760 [00:00<00:00, 201086.14 examples/s] size: 1801350 [00:00:04] Pre-processing sequences ████████████████████████████ 0 / 0 [00:00:00] Tokenize words ████████████████████████████ 606445 / 606445 [00:00:00] Count pairs ████████████████████████████ 606445 / 606445 [00:00:04] Compute merges ████████████████████████████ 22020 / 22020 [‘Hell’, ‘##o’, ‘,’, ‘world’, ‘!’] Hello, world! |

This code uses the WikiText-103 dataset. The first run downloads 157MB of data containing 1.8 million lines. The training takes a few seconds. The example shows how "Hello, world!" becomes 5 tokens, with “Hello” split into “Hell” and “##o” (the “##” prefix indicates a sub-word component).

The tokenizer created in the code above has the following properties:

- Vocabulary size: 30,522 tokens (matching the original BERT model)

- Special tokens:

[PAD],[CLS],[SEP],[MASK], and[UNK]are added to the vocabulary even though they are not in the dataset. - Pre-tokenizer: Whitespace splitting (since the dataset has spaces around punctuation)

- Normalizer: NFKC normalization for unicode text. Note that you can also configure the tokenizer to convert everything into lowercase, as the common BERT-uncased model does.

- Algorithm: WordPiece is used. Hence the decoder should be set accordingly so that the “##” prefix for sub-word components is recognized.

- Padding: Enabled with

[PAD]token for batch processing. This is not demonstrated in the code above, but it will be useful when you are training a BERT model.

The tokenizer saves to a fairly large JSON file containing the full vocabulary, allowing you to reload the tokenizer later without retraining.

To convert a string into a list of tokens, you use the syntax tokenizer.encode(text).tokens, in which each token is just a string. For use in a model, you should use tokenizer.encode(text).ids instead, in which the result will be a list of integers. The decode method can be used to convert a list of integers back to a string. This is demonstrated in the code above.

Below are some resources that you may find useful:

This article demonstrated how to train a WordPiece tokenizer for BERT using the WikiText dataset. You learned to configure the tokenizer with appropriate normalization and special tokens, and how to encode text to tokens and decode back to strings. This is just a starting point for tokenizer training. Consider leveraging existing libraries and tools to optimize tokenizer training speed so it doesn’t become a bottleneck in your training process.