Key takeaways:

- Mental health chatbots work when they know their limits. They’re most useful as a gentle first step, not as a stand-in for real care.

- Good chatbot design is more about judgment than AI. Clear boundaries, calm responses, and safety matter more than smart language models.

- Enterprises invest in chatbots to make support easier to reach, not to replace people. Availability and low pressure are the real drivers.

- Cost and complexity come from responsibility. Compliance, escalation paths, and long-term oversight shape budgets more than features do.

- The chatbots people trust feel steady, predictable, and restrained. They fit quietly into human-led support instead of trying to take over.

Mental health support rarely begins with a clear decision. More often, it starts quietly. Someone feels off but can’t quite name it. Someone wants to talk, but not yet to another person. In those moments, low-pressure digital support feels easier to reach. That’s why mental health chatbot development is getting serious attention, not as a passing trend, but as a practical layer of support people can turn to without friction.

At the same time, this is not an area where teams can move fast and fill gaps later. Mental health AI chatbot development carries responsibility from day one, whether it’s acknowledged or not. These tools are not here to replace therapists or act as standalone solutions. When they’re designed with care, they help users pause, reflect, build small coping habits, and recognise when it’s time to reach out to a human.

Teams that start building a chatbot for mental health often assume the hardest part is the AI itself. In reality, the tougher decisions sit elsewhere. Where should the chatbot stop? How should it respond when emotions run deep? How do you make sure personal feelings are handled with the same care as personal data? Those choices shape trust far more than any model upgrade ever will.

This guide looks at mental health chatbot app development through a lived-experience lens. It focuses on the decisions that matter after launch, not just during development. The goal is simple: help teams build systems that feel steady, respectful, and genuinely useful to people who turn to them in vulnerable moments.

Build Mental Health Chatbots People Actually Use

Design a mental health chatbot with clear boundaries, safety-first logic, and healthcare-grade responsibility from day one.

Why Enterprises Are Investing in Mental Health Chatbots

In many organisations, mental health support has become a very practical concern. Leaders keep running into the same situation. People struggle quietly for weeks or months. They hesitate to ask for help. By the time they do, the impact is already showing up in missed days, dropped focus, or burnout. This is where a mental health chatbot starts to feel useful, not as a grand solution, but as a simple way to lower the first barrier.

Enterprises aren’t turning to chatbots to replace therapy. That’s not the goal. What they’re looking for is an entry point. Something someone can open in a moment of hesitation. A place to pause, check in, or get basic guidance without scheduling time or explaining themselves to another person. Mental health AI chatbot development fits here because it offers support when people actually reach out, often outside office hours or away from formal systems.

There’s also a longer view behind these decisions. According to Grand View Research, the global mental health apps market is expected to reach USD 17.5 billion by 2030, largely driven by employer-backed digital mental health initiatives and scalable support models. This isn’t about following a wellbeing trend. It’s about recognising that access matters as much as intent.

From an enterprise perspective, teams that start building a chatbot for mental health usually have a few clear reasons:

- Give people a private, low-effort way to seek support

- Offer something immediate instead of asking them to wait

- Reach large or distributed teams in a consistent way

- Encourage early support before issues grow harder to manage

For most organisations, this investment isn’t about innovation headlines. It’s about meeting people where they already are, with support that feels steady, accessible, and respectful.

Mental Health Chatbots in Practice: Role and Core Development Principles

These principles reflect the key aspects of mental health chatbot development that tend to matter most once real users are involved. Mental health chatbots work best when they stay in their lane. People don’t open them looking for diagnoses or answers. Most are just trying to get through a moment when something feels off, and they need help slowing their thoughts or naming how they feel. That is where mental health chatbot development actually makes sense.

In everyday use, these tools are not problem solvers. They play a light, supportive role. Mental health AI chatbot development is most effective when a chatbot helps someone reflect, try a simple exercise, or realise it might be time to speak with a real person. Once a chatbot starts sounding authoritative, trust usually drops. This is a common risk in AI mental health therapist chatbot development, where overconfidence can quietly undermine user safety and trust.

Teams that start building a chatbot for mental health often assume the hardest part is the AI. In reality, it is about judgment. Knowing when to respond, when to pause, and what should never be said. Those human decisions matter far more than the model’s level of advancement.

- Have a narrow, honest role: A chatbot that knows exactly what it is there for feels calmer and more reliable than one that tries to handle everything.

- Slow down when things get heavy: Not every message needs a clever answer. Sometimes, acknowledging and guiding someone toward help is enough.

- Make human support visible: Good mental health chatbot app development never hides the fact that real people are part of the journey.

- Handle emotions with restraint: Just because someone shares something personal does not mean the system needs to dig deeper.

- Stay consistent: A steady tone and predictable behaviour feel safer than personality or humour.

The mental health chatbots people keep using are rarely the most advanced. They are the ones who feel respectful, predictable, and aware of their limits. They support without taking over, and that is what makes them useful in the long run.

Also Read: Mental Health App Development: A Complete Guide for 2026

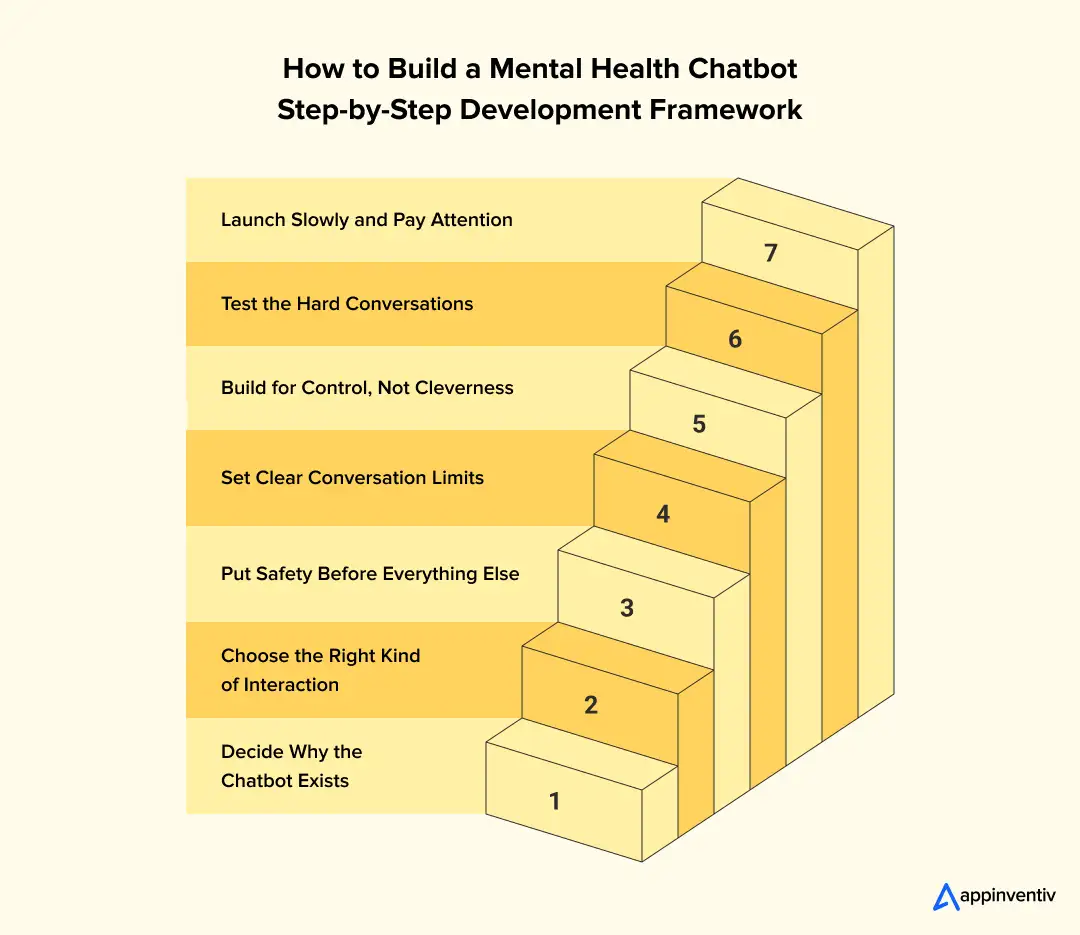

How to Build a Mental Health Chatbot: Step-by-Step Development Framework

Building a mental health chatbot isn’t about speed or feature count. It’s about getting a few important decisions right before anything goes live. People don’t interact with these systems when they’re curious or relaxed. They come in moments of doubt, stress, or quiet hesitation. That reality changes how mental health chatbot development should be approached.

What follows isn’t a textbook process. It’s closer to how teams actually build these systems when they’ve done it before.

Step 1: Decide Why the Chatbot Exists

This step sounds obvious, but it’s where many projects go wrong.

Before design or AI in healthcare comes into the picture, teams need to be honest about scope.

- What should the chatbot help with?

- Who is it for?

- When should it stop and not continue the conversation?

The most stable mental health AI chatbot development efforts usually focus on a narrow role. Daily check-ins and simple grounding exercises. Pointing users to human support. Trying to cover everything almost always creates risk.

Step 2: Choose the Right Kind of Interaction

This is less about intelligence and more about tone and flow. In real use, people don’t want to explain themselves. They want something easy to follow.

- Short prompts work better than open questions

- Simple exercises are easier than long explanations

- A predictable flow feels safer than a “chatty” one

When teams are building a chatbot for mental health, structure tends to outperform flexibility.

Step 3: Put Safety Before Everything Else

Safety decisions shape the entire system.

This usually means:

- Noticing when conversations turn heavy

- Avoiding guesses or interpretations

- Making it clear how to reach real help

Good mental health chatbot app development treats escalation as a foundation, not an edge case.

Step 4: Set Clear Conversation Limits

Once safety is defined, the rest becomes easier. Most teams rely on:

- Fixed responses for sensitive topics

- Clear limits on how deep conversations go

- Simple fallback messages when the chatbot isn’t sure

The goal isn’t to sound human. It’s to sound calm and consistent.

Step 5: Build for Control, Not Cleverness

This is where many projects overcomplicate things. For teams developing an AI chatbot for mental health support, reliability matters more than novelty. This is especially true when developing an AI chatbot for mental health support within enterprise or healthcare environments, where consistency and governance outweigh experimentation.

- AI combined with rules works better than AI alone

- Limited memory reduces risk

- Ongoing monitoring is essential

In mental health contexts, predictability builds trust faster than innovation.

Step 6: Test the Hard Conversations

Standard testing isn’t enough here. Teams need to test:

- Vague messages

- Emotional or unclear inputs

- Repeated use over time

- Moments where saying less is better than saying more

These situations reveal problems early.

Step 7: Launch Slowly and Pay Attention

Mental health chatbots shouldn’t be rolled out all at once. A realistic mental health chatbot development plan assumes iteration, review, and adjustment long after the first release.

- Small pilot releases

- Regular review of real conversations

- Changes based on actual usage, not assumptions

Most of the real shaping happens after launch. When this process is followed, the result doesn’t feel impressive on a demo slide. It feels steady, predictable and safe. And in mental health, that quiet reliability matters far more than anything flashy.

Also Read: How Does Chatbots Contribute to Business Growth & Revenue

Turn Your Mental Health Chatbot Plan Into Reality

Work with a team that understands escalation design, compliance needs, and real-world deployment challenges in mental health chatbot development.

Use Cases of Mental Health Chatbots

When these tools are useful, it’s usually because they keep things simple. They don’t try to solve everything. They give someone a quiet moment to slow down, sort out what they’re feeling, and decide what to do next.

- Quick emotional check-ins: A brief check-in gives people space to name what’s going on and get a small next step. In workplaces and universities, this matters because many people hesitate to ask for help directly.

- Short coping exercises: Simple breathing routines, grounding prompts, or short reflection exercises can ease tension in the moment. The structure feels steady and predictable, which makes it easier to return to when stress builds.

- Pointing people to the right support: In large organisations, people often don’t know where to turn. A chatbot can guide them toward counselling services, an employee assistance program, or a helpline. The value is removing confusion, not replacing care.

- Practical guidance about benefits and privacy: Sometimes the barrier isn’t emotional. It’s logistical. People want to know what’s confidential, what’s covered, or how to access support discreetly. A chatbot can answer these questions without making it feel formal.

- Support for high-pressure roles: Frontline teams and shift workers rarely have time for long conversations. Quick reset exercises or optional check-ins can help them pause and regroup between demanding tasks.

- Stabilising support after stressful events: After a difficult incident, a chatbot can offer calming prompts and clearly show the next step for professional help, making support visible when it’s needed most.

- Mood tracking and noticing patterns: Some tools allow users to log mood or stress over time. The chatbot helps surface patterns gently, so people can spot changes without feeling judged or analysed.

- A guide within broader wellbeing platforms: When embedded inside a wellness app or employee portal, the chatbot helps users find the right exercise, content, or human support instead of trying to act like therapy.

What makes these use cases work isn’t sophistication. It’s accessible. They lower the first barrier and offer a quiet starting point when reaching out feels difficult.

Mental Health Chatbot Development Cost Breakdown

When teams ask about cost, they’re usually not hunting for a single number. They want to know where the money goes and what choices push budgets up or down. In chatbot development, cost is shaped far more by safety, scope, and governance than by how “smart” the AI sounds. Teams looking to build AI therapy chatbot mental health solutions often underestimate how much budget is driven by compliance and escalation design. Understanding mental health chatbot cost starts with recognising how much of the budget is tied to safety and compliance.

Below is a realistic way to look at the spend.

What Drives the Cost Most

A few early decisions account for most budget differences:

- Scope and boundaries: A chatbot limited to check-ins and guided exercises costs far less than one that handles complex conversations or multiple use cases.

- Safety and escalation design: Crisis detection, escalation paths, and human handoff logic take time to design and test. They are essential, and they add cost.

- Compliance and data protection: Secure storage, consent flows, audit readiness, and access controls matter more in mental health than in most apps.

Typical Cost Ranges (High-Level)

Most projects fall somewhere between $40,000 and $400,000, depending on complexity and responsibility level.

| Project Type | Typical Cost Range | What This Usually Includes |

|---|---|---|

| Basic MVP | $40,000 – $80,000 | Simple chatbot development with basic check-ins, guided exercises, limited AI, and minimal integrations. Often used for pilots or internal programs. |

| Mid-Level Product | $80,000 – $180,000 | Structured conversations, safety logic, escalation paths, analytics, and a balanced approach to mental health AI chatbot development. Common for wellness and employer platforms. |

| Enterprise-Grade Solution | $180,000 – $400,000 | Advanced governance, compliance readiness, monitoring, reporting, integrations, and human-in-the-loop workflows. Built for healthcare providers and large organisations. |

The jump between these tiers is usually driven by safety, compliance, and long-term oversight, not by adding more AI features.

Key Benefits of Mental Health Chatbot Development

Mental health chatbots don’t help because they are smart. They help because they are there. Most people aren’t looking for therapy in the middle of a tough day. They just want a moment to slow down, sort their thoughts, or feel less alone. That’s where mental health chatbot development quietly adds value. Many of the long-term benefits of chatbot development come from presence and consistency rather than depth of conversation.

When done right, these tools don’t feel like a system. They feel like an option.

- Someone is there, even when no one else is: Mental health moments don’t wait for the right time. One quiet benefit of chatbot development is the simple presence. The chatbot is there late at night, between meetings, or when reaching out to a person feels like too much.

- A place to start without pressure: Saying things out loud can feel heavy. Typing a few words feels easier. With mental health AI chatbot development, people get a low-pressure way to check in with themselves and decide what they need next.

- Catching stress before it turns into burnout: Most struggles build slowly. Small, regular check-ins help people notice patterns early. That’s why developing an AI chatbot for mental health support often helps long before a crisis appears.

- The same starting point for everyone: Support can feel uneven in large or remote teams. Health chatbot app development helps level that gap, giving everyone access to the same first step, wherever they are.

- Support that knows when to step aside: The best chatbots don’t try to do everything. A good mental health chatbot means guiding people gently and making it clear when it’s time to speak to a real person.

- Help that doesn’t ask for commitment: Not every hard moment needs a call or an appointment. Sometimes people just need a pause. Mental health chatbots offer that space without asking for anything in return.

These benefits don’t come from clever technology. They come from restraint, presence, and knowing when less is more.

Also Read: A Quick Guide to the Pros and Cons of Chatbot Development

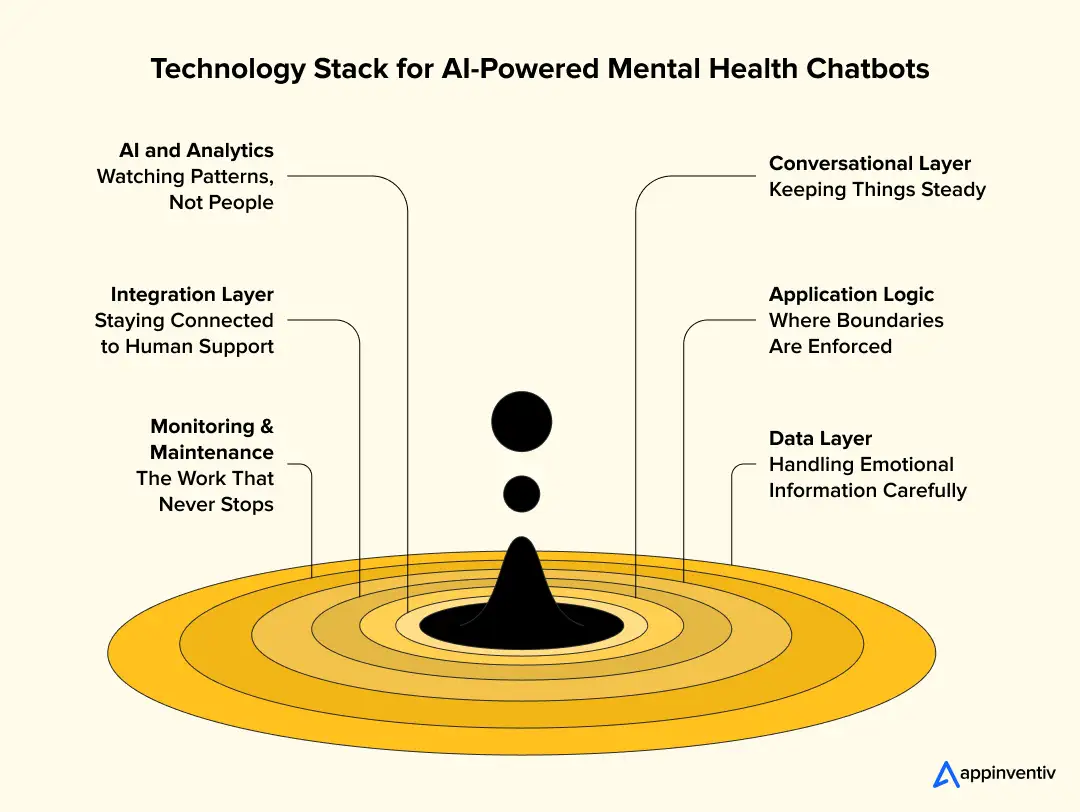

Technology Stack for AI-Powered Mental Health Chatbots

When a mental health chatbot works well, nobody thinks about the technology behind it. Users don’t notice models, layers, or systems. What they notice is that the experience feels calm, consistent, and safe. That’s why the tech stack for AI-powered mental health chatbot development is usually built with restraint. Flashy tools matter far less than reliability. At a practical level, understanding how mental health bots work. means looking less at models and more at control, flow, and escalation.

Teams that have been through this before tend to make the same choice. They stop chasing what’s new and focus instead on what behaves predictably over time.

1. Conversational Layer: Keeping Things Steady

This is the part users interact with directly, and it needs to feel controlled rather than clever.

In most AI chatbot development setups, that means:

- Using a mix of NLP models and rule-based logic

- Focusing on intent detection for common, expected inputs

- Relying on predefined response paths for sensitive topics

The goal here isn’t to impress. It’s to avoid guessing and to keep conversations on safe ground.

2. Application Logic: Where Boundaries Are Enforced

Behind the interface sits the logic that decides how the chatbot behaves.

This layer typically manages:

- Conversation flow and pacing

- Safety checks and escalation triggers

- Limits on how long or how deeply a conversation continues

For teams building a chatbot for mental health, this layer often matters more than the AI model itself. It’s where judgment is encoded.

3. Data Layer: Handling Emotional Information Carefully

Mental health conversations carry a different kind of weight. The data layer needs to reflect that sensitivity.

Most responsible builds include:

- Collecting only what’s absolutely needed

- Keeping identity data separate from conversation data

- Encrypting information both in transit and at rest

In real-world chatbot development, collecting less data often leads to more trust, not less insight.

4. AI and Analytics: Watching Patterns, Not People

AI plays a quieter role here than many expect. Instead of profiling users, teams usually focus on:

- High-level tone or sentiment signals

- Noticing repeated distress patterns over time

- Flagging conversations for review, not judgment

The aim is to improve safety and quality, not to label or diagnose.

5. Integration Layer: Staying Connected to Human Support

A mental health chatbot should never feel like a closed system. Most chatbot app development projects include:

- Clear links to helplines or crisis resources

- Chatbot integration with internal support teams or clinicians

- Simple handoff or referral mechanisms

This keeps the chatbot grounded in real care, not isolated automation. In mature chatbot app development, this connection to human-led systems is treated as a requirement, not an enhancement.

6. Monitoring and Maintenance: The Work That Never Stops

Launch is not the endpoint. Ongoing responsibility usually includes:

- Reviewing conversations for risk patterns

- Updating responses as language and expectations change

- Auditing models and flows at regular intervals

For anyone developing an AI chatbot for mental health support, this ongoing attention is part of the product itself, not a side task.

In practice, the best chatbot technology stacks are rarely the most complex ones. They’re quiet, predictable, and easy to govern. In mental health settings, that kind of reliability is what allows technology to support people without getting in the way.

Real-World Examples of Mental Health Chatbots

The easiest way to understand what works in this space is to look at tools people already rely on. Not the ones with the boldest claims, but the ones that have stayed around because they know what they’re meant to do. These are often cited as examples of successful chatbot development because they prioritise restraint over ambition.

1. Woebot – Brief, Structured, and Clear About Its Role

Woebot doesn’t try to hold long conversations. Most interactions are short. You check in. You reflect on a thought. You try a small exercise. Then you move on.

What makes Woebot stand out in mental health AI chatbot development is restraint. Much of the discussion around Woebot AI mental health chatbot development focuses on how clearly it defines its role and avoids overreach. It never presents itself as therapy. It’s upfront about being a support tool. That clarity is also why it has been studied in peer-reviewed research rather than just promoted as another wellness app.

2. Wysa – AI Support with a Clear Human Backstop

Wysa is commonly used by employers and wellbeing programmes. The chatbot guides users through simple exercises to help with stress and low mood. The tone stays calm. Conversations stay guided.

What matters most here is the handoff. When the chatbot isn’t enough, users can move to human coaching. For teams building a chatbot for mental health, Wysa is a good example of how AI chatbot in healthcare can help first without pretending to be the full answer.

3. Youper – Less Talking, More Awareness

Youper takes a quieter approach. It doesn’t try to keep people chatting. Instead, it encourages users to log their feelings and look back at patterns over time.

For anyone developing an AI chatbot for mental health support, Youper shows that usefulness doesn’t always come from conversation. Sometimes it comes from helping people notice things they usually overlook.

4. Tess (X2AI) – Built for Institutions, Not Individuals Alone

Tess is usually deployed by universities, healthcare providers, and large organisations. It’s part of broader mental health programmes, not a standalone app someone stumbles upon.

In mental health chatbot development, Tess is often cited for its work within clear boundaries. There’s oversight. There are defined use cases. The focus is on consistency and safety rather than personality.

When you step back, the pattern is simple. The chatbots that last don’t try to be everything. They don’t overpromise. They stay within their role and fit into a wider support system. That’s what makes them believable, and that’s why people keep using them.

Also Read: The Impact of AI in Mental Health

How Enterprises Measure Mental Health Chatbot ROI

Most organisations don’t look at these tools as a way to cut costs. They look at whether people are actually getting help sooner and whether support feels easier to reach.

- Are people using it more than once? The first sign of value is simple. Do employees come back? Repeat check-ins and completed sessions usually mean the tool feels safe and worth returning to.

- Is it making support easier to reach? Late-night usage, engagement from remote teams, and first-time help seekers suggest the chatbot is lowering the hesitation people feel before reaching out.

- Is it reducing routine workload? When the chatbot answers common questions or guides users to the right resource, HR and support teams spend less time redirecting requests and more time helping where it matters.

- Do people follow through to human support? It’s important to see whether users actually connect with counselors, helplines, or internal programs after being guided there. A smooth handoff matters more than the length of the conversation.

- Are well-being programs seeing more participation? Higher counselling uptake, better engagement with wellbeing initiatives, and simple feedback like “this helped” are early signs that the system is doing its job.

- Is trust being protected? Clear consent, careful data handling, and safe responses reduce compliance risk and help people feel comfortable using the tool.

Over time, some organisations also notice improvements in engagement, retention, or burnout indicators. The real return comes from helping people reach support earlier and with less hesitation.

Integrating Mental Health Chatbots into Human-Led Care Models

Mental health chatbots are most effective when they are not asked to carry the weight alone. In real settings, mental health chatbot works best when chatbots sit quietly alongside human care, not in front of it. People don’t want to be “handled” by software when things get serious. They want support that knows when to listen and when to step aside.

That’s why integration matters more than intelligence.

Here’s how that integration typically works in practice.

- Chatbots as the first point of contact: For many users, a chatbot is the easiest place to start. It lowers the barrier to entry and helps people articulate what they’re feeling before speaking to someone. This is often the starting point in building a chatbot for mental health programs within organisations.

- Clear handoff to real people: When a conversation signals distress, repetition, or risk, the chatbot should not push forward. Instead, it should make the next step obvious. That might mean suggesting a counsellor, connecting to a helpline, or guiding the user to internal support. Strong chatbot app development treats this handoff as a core feature.

- Supporting clinicians, not replacing them: In human-led care models, chatbots often help clinicians by handling routine check-ins or gathering context ahead of sessions. This allows human time to be spent where it matters most. In developing an AI chatbot for mental health support, this balance is what keeps systems trusted.

- Visibility without surveillance: Integration does not mean constant monitoring. Ethical systems share insights carefully, focusing on patterns rather than personal detail. This protects trust while still allowing care teams to intervene when needed.

- Fitting into existing care workflows: The best integrations don’t force people to change how they work. Chatbots should fit into existing mental health programs, employee assistance plans, or clinical pathways, rather than creating parallel systems.

In practice, mental health chatbots don’t replace human care. They make it easier to reach. When integration is done well, the chatbot feels like a doorway, not a destination. And that’s exactly the role it should play.

Regulatory, Ethical, and Compliance Considerations for Mental Health Chatbots

When a chatbot deals with mental health, small missteps matter. A message that feels too confident, a response that pushes too far, or unclear data use can quickly break trust. That’s why regulation and ethics are not side topics in health chatbot development. They shape how safe and believable the product feels. In practice, mental health chatbot compliance becomes a design constraint that shapes conversations, data handling, and escalation paths.

Teams working on AI chatbot development often realise this early. The technology may be solid, but the real challenge is making sure the chatbot behaves responsibly in sensitive situations.

1. Understand the Rules That Apply

Mental health chatbots may not always be considered medical tools, but they still operate in close proximity to regulated areas.

In most real projects, this means aligning with:

- GDPR for consent, data minimisation, and user control

- HIPAA in the US applies when protected health information is involved

- ISO 27001 or similar security standards

- Local laws that treat mental health data as highly sensitive

For teams building a chatbot for mental health, assuming a higher compliance standard is usually the safer path.

2. Ethics Show Up in Everyday Behaviour

Ethics are not just policy statements. They are visible in how the chatbot talks.

In mental health chatbot app development, this often means:

- Being clear that users are interacting with a chatbot

- Avoiding language that sounds like a diagnosis or medical advice

- Not pushing users to share more than they’re comfortable with

- Knowing when to pause or redirect the conversation

These choices decide whether users feel supported or uneasy.

3. Plan for Serious Conversations

At some point, a chatbot will encounter distress. Responsible chatbot development includes:

- Recognising when a conversation becomes heavy

- Keeping responses calm and neutral

- Clearly pointing users to helplines or human support

- Reviewing how these situations are handled over time

This planning is about readiness, not perfection.

4. Handle Mental Health Data with Care

What users share in these chats is personal and sensitive. Good health AI chatbot development usually focuses on:

- Collecting only necessary information

- Explaining why data is collected

- Protecting it with strong security measures

- Giving users control over their data

Careful data handling builds long-term trust.

5. Treat Compliance as Ongoing Work

Regulations change, and so do user expectations. That’s why teams need:

- Regular reviews of chatbot conversations

- Updates as laws or guidance evolve

- Clear ownership for oversight and decisions

In the end, regulation and ethics are not about slowing development. They help keep mental health chatbots grounded, reliable, and safe for real people using them.

Common Mistakes in Mental Health Chatbot Development

Most mental health chatbots don’t fail in dramatic ways. They quietly lose relevance. People stop opening them. Conversations feel off, and trust fades. In almost every case, the issue isn’t the technology. It’s how the chatbot fits into real human moments.

Here are the mistakes that tend to show up once real users enter the picture.

- Not deciding what the chatbot is not supposed to do: Many teams define what the chatbot should help with, but skip the harder question. Where should it stop? When a chatbot tries to listen, guide, reassure, and advise all at once, users sense the confusion. A clear, limited role feels safer than a broad capability.

- Believing serious conversations are rare: Some teams design for calm check-ins and hope that’s all they’ll get. That hope doesn’t last long. People don’t warn a chatbot before sharing something heavy. If those moments aren’t planned for, responses feel clumsy or detached, which can do real harm.

- Letting responses wander too freely: Free-flowing AI replies look impressive in demos. In real conversations, they can drift. A sentence that sounds fine out of context can feel wrong when someone is vulnerable. In this space, structure and restraint usually outperform creativity.

- Asking questions before earning trust: Users notice when a chatbot starts probing too quickly. Personal questions without context feel intrusive, even when well-intentioned. Most people open up gradually. Chatbots that don’t respect that pace tend to push users away.

- Treating launch as a finish line: Once a chatbot goes live, real work begins. Language shifts and social context changes. Responses that felt fine six months ago may now feel outdated or tone-deaf. Teams that don’t review conversations regularly often miss problems until engagement drops.

None of these mistakes is due to negligence. They come from underestimating how personal this space is. The teams that get it right usually slow down early, set firm boundaries, and stay involved long after the chatbot is released. That ongoing attention is what keeps a mental health chatbot from feeling uncomfortable.

Future Trends in Mental Health Chatbot Development

Mental health chatbots aren’t heading toward some futuristic breakthrough moment. They’re settling down. The next phase is quieter. Less ambitious and more careful. And honestly, that’s a good thing for the development of mental health chatbots.

What’s changing is not the tech. It’s the attitude.

- Clearer boundaries in conversations: Early chatbots tried to keep a conversation going no matter what. That’s starting to look like a mistake. Newer systems are more comfortable stopping, redirecting, or saying less. Users tend to trust that more.

- AI as an entry point, not a destination: The chatbot is becoming the first step, not the end goal. A place to pause, reflect, and notice patterns. When things get complicated, human support takes over. This shift is already visible across health AI chatbot development projects.

- More emphasis on early signals: Instead of reacting to distress, chatbots are being used to notice small changes over time. Missed sleep, repeated stress, subtle shifts and nothing dramatic. Just enough to prompt awareness.

- Less data, more restraint: The trend isn’t smarter profiling. It’s simpler data use. Clear consent and fewer assumptions. People are paying attention now, and chatbots that over-collect won’t last.

- Tighter integration with real-world support: Chatbots are increasingly built into existing systems rather than operating on their own. Workplace programs, care teams, and helplines provide the context that keeps expectations realistic.

The direction is fairly obvious if you strip away the hype. Mental health chatbots aren’t trying to become more impressive. They’re trying to become more acceptable. The teams that understand that are building things people actually keep using.

Also Read: Mental Health Technology – Trends & Innovations

Prepare for the Next Phase of Mental Health AI

Build a chatbot aligned with emerging regulations, human-in-the-loop care models, and long-term trust, not short-term trends.

Building Responsible Mental Health Chatbots with Appinventiv

Building a mental health chatbot is less about clever AI and more about responsibility. In mental health, people notice tone, timing, and intent very quickly. That’s why Appinventiv’s Mental health app development Services focus on building solutions that respect boundaries and handle sensitive interactions with care.

Appinventiv’s experience in healthcare solutions brings a useful perspective here. Solutions like Soniphi, a vitality and health monitoring app, and Health-e-People, a health assessment platform, were built around ongoing engagement, structured data, and trust. That same thinking applies to mental health chatbots. The goal is not constant conversation, but steady, predictable support that users feel comfortable returning to.

For organisations considering a mental health chatbot, the real challenge is building something people trust over time. Appinventiv approaches this by combining healthcare-grade engineering with restraint, clear escalation paths, and long-term oversight. Backed by deep expertise in healthcare application development services, the team focuses on practical, compliant, and sustainable solutions for real-world care environments.

If you’re exploring a responsible mental health chatbot, speak with Appinventiv’s healthcare team to understand what’s practical, compliant, and sustainable for your product. Let’s talk!

FAQs

Q. How long does it take to develop a mental health chatbot?

A. There’s no one-size answer. A simple mental health chatbot can come together in a couple of months. Once you add safety checks, escalation paths, and compliance review, the timeline usually stretches closer to three to six months. In mental health chatbot development, taking a bit longer often leads to a much safer product.

Q. What are the best platforms for mental health chatbot development?

A. Most mental health chatbots live on mobile apps or the web, mainly because people want privacy and easy access. Some organisations also embed them into internal tools or wellbeing platforms. The “best” platform is usually the one users already trust and feel comfortable opening.

Q. What tools can we use to build a mental health chatbot?

A. There’s no single tool that does it all. Teams usually combine conversational AI frameworks, secure cloud services, and monitoring tools. When building a chatbot for mental health, the safety layers and oversight matter just as much as the AI itself.

Q. What is a mental health chatbot?

A. A mental health chatbot is a digital support tool that helps people pause, reflect, or work through simple wellbeing exercises. It’s not a therapist, and it’s not meant to replace human care. That boundary is a core part of responsible mental health AI chatbot development.

Q. How to create a mental health chatbot?

A. The work starts before any code is written. Teams need to agree on what the chatbot should never do, not just what it can do. From there, conversation flows, safety rules, and data handling are designed first, with technology following later.

Q. How to use a mental health chatbot effectively?

A. Mental health chatbots work best as a starting point. People use them to check in, organise their thoughts, or slow things down. When the situation feels overwhelming, the chatbot should guide them to human support rather than trying to handle everything.