Liquid AI has introduced LFM2-2.6B-Exp, an experimental checkpoint of its LFM2-2.6B language model that is trained with pure reinforcement learning on top of the existing LFM2 stack. The goal is simple, improve instruction following, knowledge tasks, and math for a small 3B class model that still targets on device and edge deployment.

Where LFM2-2.6B-Exp Fits in the LFM2 Family?

LFM2 is the second generation of Liquid Foundation Models. It is designed for efficient deployment on phones, laptops, and other edge devices. Liquid AI describes LFM2 as a hybrid model that combines short range LIV convolution blocks with grouped query attention blocks, controlled by multiplicative gates.

The family includes 4 dense sizes, LFM2-350M, LFM2-700M, LFM2-1.2B, and LFM2-2.6B. All share a context length of 32,768 tokens, a vocabulary size of 65,536, and bfloat16 precision. The 2.6B model uses 30 layers, with 22 convolution layers and 8 attention layers. Each size is trained on a 10 trillion token budget.

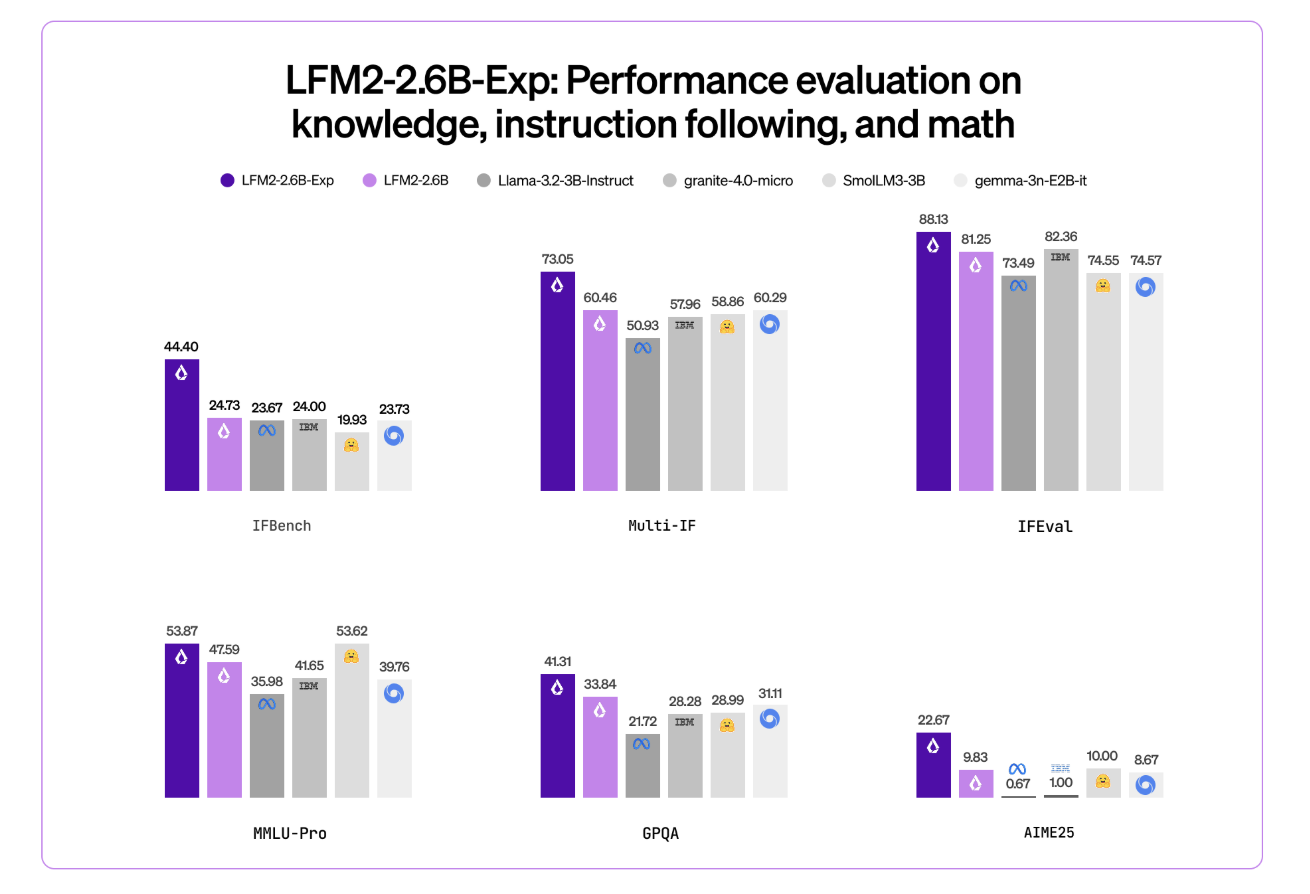

LFM2-2.6B is already positioned as a high efficiency model. It reaches 82.41 percent on GSM8K and 79.56 percent on IFEval. This places it ahead of several 3B class models such as Llama 3.2 3B Instruct, Gemma 3 4B it, and SmolLM3 3B on these benchmarks.

LFM2-2.6B-Exp keeps this architecture. It reuses the same tokenization, context window, and hardware profile. The checkpoint focuses only on changing behavior through a reinforcement learning stage.

Pure RL on Top of a Pretrained, Aligned Base

This checkpoint is built on LFM2-2.6B using pure reinforcement learning. It is specifically trained on instruction following, knowledge, and math.

The underlying LFM2 training stack combines several stages. It includes very large scale supervised fine tuning on a mix of downstream tasks and general domains, custom Direct Preference Optimization with length normalization, iterative model merging, and reinforcement learning with verifiable rewards.

But exactly ‘pure reinforcement learning’ means? LFM2-2.6B-Exp starts from the existing LFM2-2.6B checkpoint and then goes through a sequential RL training schedule. It begin with instruction following, then extend RL training to knowledge oriented prompts, math, and a small amount of tool use, without an additional SFT warm up or distillation step in that final phase.

The important point is that LFM2-2.6B-Exp does not change the base architecture or pre training. It changes the policy through an RL stage that uses verifiable rewards, on a targeted set of domains, on top of a model that is already supervised and preference aligned.

Benchmark Signal, Especially On IFBench

Liquid AI team highlights IFBench as the main headline metric. IFBench is an instruction following benchmark that checks how reliably a model follows complex, constrained instructions. On this benchmark, LFM2-2.6B-Exp surpasses DeepSeek R1-0528, which is reported as 263 times larger in parameter count.

LFM2 models provide strong performance across a standard set of benchmarks such as MMLU, GPQA, IFEval, GSM8K, and related suites. The 2.6B base model already competes well in the 3B segment. The RL checkpoint then pushes instruction following and math further, while staying in the same 3B parameter budget.

Architecture and Capabilities that Matters

The architecture uses 10 double gated short range LIV convolution blocks and 6 grouped query attention blocks, arranged in a hybrid stack. This design reduces KV cache cost and keeps inference fast on consumer GPUs and NPUs.

The pre training mixture uses roughly 75 percent English, 20 percent multilingual data, and 5 percent code. The supported languages include English, Arabic, Chinese, French, German, Japanese, Korean, and Spanish.

LFM2 models expose a ChatML like template and native tool use tokens. Tools are described as JSON between dedicated tool list markers. The model then emits Python like calls between tool call markers and reads tool responses between tool response markers. This structure makes the model suitable as the agent core for tool calling stacks without custom prompt engineering.

LFM2-2.6B, and by extension LFM2-2.6B-Exp, is also the only model in the family that enables dynamic hybrid reasoning through special think tokens for complex or multilingual inputs. That capability remains available because the RL checkpoint does not change tokenization or architecture.

Key Takeaways

- LFM2-2.6B-Exp is an experimental checkpoint of LFM2-2.6B that adds a pure reinforcement learning stage on top of a pretrained, supervised and preference aligned base, targeted at instruction following, knowledge tasks, and math.

- The LFM2-2.6B backbone uses a hybrid architecture that combines double gated short range LIV convolution blocks and grouped query attention blocks, with 30 layers, 22 convolution layers and 8 attention layers, 32,768 token context length, and a 10 trillion token training budget at 2.6B parameters.

- LFM2-2.6B already achieves strong benchmark scores in the 3B class, around 82.41 percent on GSM8K and 79.56 percent on IFEval, and the LFM2-2.6B-Exp RL checkpoint further improves instruction following and math performance without changing the architecture or memory profile.

- Liquid AI reports that on IFBench, an instruction following benchmark, LFM2-2.6B-Exp surpasses DeepSeek R1-0528 even though the latter has many more parameters, which shows a strong performance per parameter for constrained deployment settings.

- LFM2-2.6B-Exp is released on Hugging Face with open weights under the LFM Open License v1.0 and is supported through Transformers, vLLM, llama.cpp GGUF quantizations, and ONNXRuntime, making it suitable for agentic systems, structured data extraction, retrieval augmented generation, and on device assistants where a compact 3B model is required.

Check out the Model here. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.