In this article, you will learn how to move beyond Andrew Ng’s machine learning course by rebuilding your mental model for neural networks, shifting from algorithms to architectures, and practicing with real, messy data and language models.

Topics we will cover include:

- Reframing representation learning and mastering backpropagation as information flow.

- Understanding architectures and pipelines as composable systems.

- Working at data scale, instrumenting experiments, and selecting projects that stretch you.

Let’s break it down.

Leveling Up Your Machine Learning: What To Do After Andrew Ng’s Course

Image by Editor

Getting to “Start”

Finishing Andrew Ng’s machine learning course can feel like a strange moment. You understand linear regression, logistic regression, bias–variance trade-offs, and why gradient descent works, yet modern machine learning conversations can seem like they’re happening in another universe.

Transformers, embeddings, fine-tuning, diffusion, large language model (LLM) agents. None of that was on the syllabus. The gap isn’t a failure of the course; it’s a mismatch between foundational education and where the field jumped next.

What you need now is not another grab bag of algorithms, but a deliberate progression that turns classical intuition into neural fluency. This is where machine learning stops being a set of formulas and starts behaving like a system you can reason about, debug, and extend.

Rebuilding Your Mental Model for Neural Networks

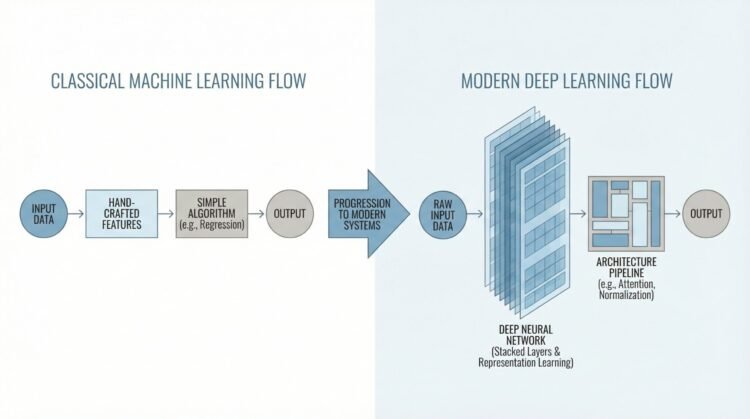

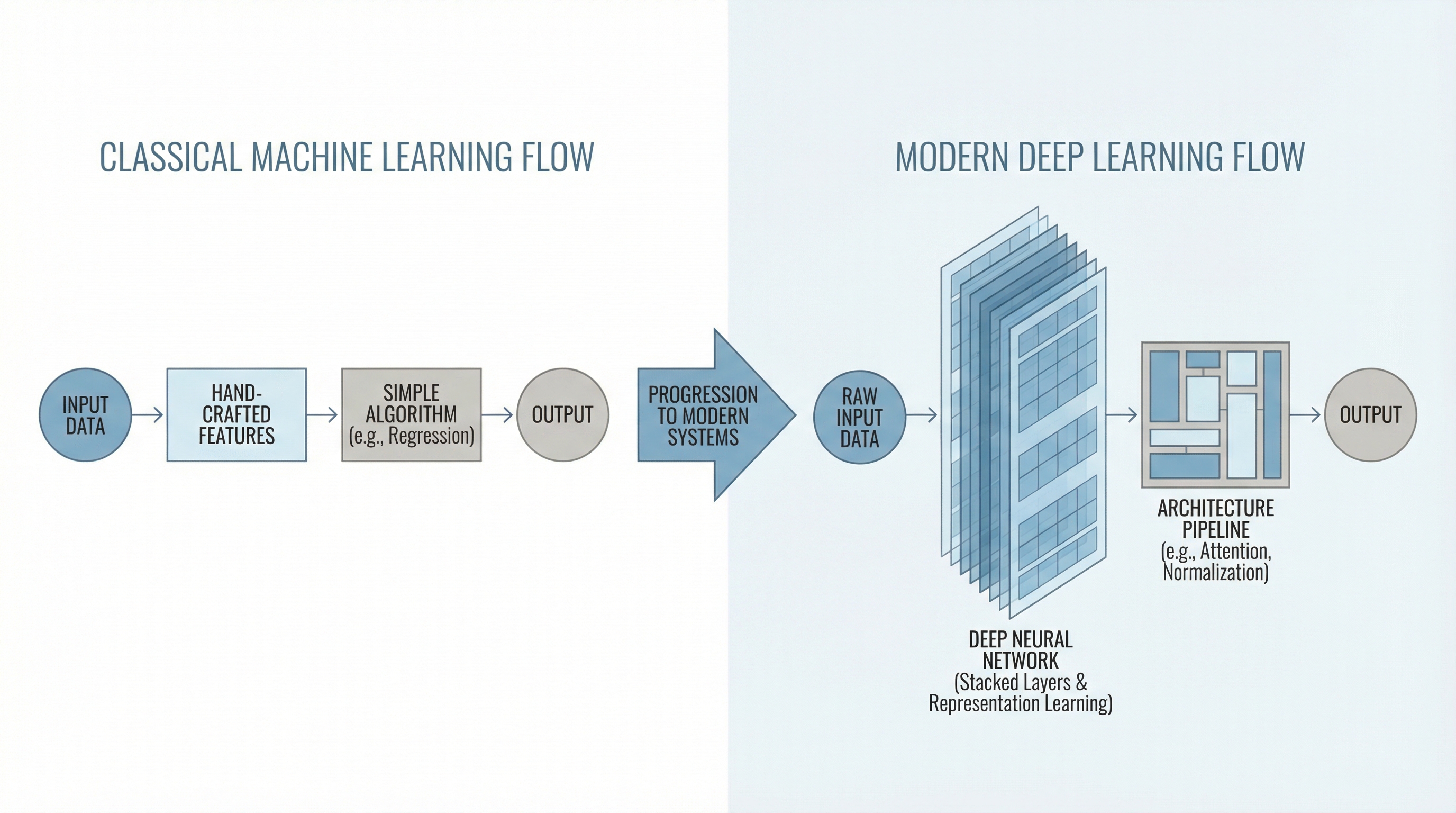

Traditional machine learning teaches you to think in terms of features, objective functions, and optimization. Neural networks ask you to hold the same ideas, but at a different scale and with more abstraction.

The first step forward is not memorizing architectures, but reframing how representation learning works. Instead of hand-engineering features, you are learning transformations that invent features for you, layer by layer. This shift sounds obvious, but it changes how you debug, evaluate, and improve models.

Spend time deeply understanding backpropagation in multilayer networks, not just as an algorithm but as a flow of information and blame assignment. When a network fails, the question is rarely “Which model should I use?” and more often “Where did learning collapse?” Vanishing gradients, dead neurons, saturation, and initialization issues all live here. If this layer is opaque, everything built on top of it stays mysterious.

Frameworks like PyTorch help, but they can also hide essential mechanics. Reimplementing a small neural network from scratch, even once, forces clarity. Suddenly, tensor shapes matter. Activation choices stop being arbitrary. Loss curves become diagnostic tools instead of charts you merely hope go down. This is where intuition begins to form.

Moving From Algorithms to Architectures

Andrew Ng’s course trains you to select algorithms based on data properties. Modern machine learning shifts decision-making toward architectures. Convolutional networks encode spatial assumptions. Recurrent models encode sequence dependencies. Transformers assume attention is the primitive worth scaling. Understanding these assumptions is more important than memorizing model diagrams.

Start by studying why certain architectures replaced others. CNNs didn’t win because they were fashionable, but because weight sharing and locality aligned with visual structure. Transformers didn’t dominate language because recurrence was broken, but because attention scaled better and parallelized learning. Every architecture is a hypothesis about structure in data. Learn to read them that way.

This is also the moment to stop thinking in terms of single models and start thinking in terms of pipelines. Tokenization, embeddings, optimizing cloud costs, positional encoding, normalization, and decoding strategies are all part of the system. Performance gains often come from adjusting these components, not swapping out the core model. Once you see architectures as composable systems, the field starts to feel navigable rather than overwhelming.

Learning to Work With Real Data at Scale

Classic coursework often uses clean, preprocessed datasets where the hard parts are politely removed. Real-world machine learning is the opposite. Data is messy, biased, incomplete, and constantly shifting. The faster you confront this, the faster you level up.

Modern neural models are sensitive to data distribution in ways linear models rarely are. Small preprocessing decisions can quietly dominate outcomes. Normalization choices, sequence truncation, class imbalance handling, and augmentation strategies are not peripheral concerns. They are central to performance and stability. Learning to inspect data statistically and visually becomes a core skill, not a hygiene step.

You also need to get comfortable with experiments that do not converge cleanly. Training runs that diverge, stall, or behave inconsistently are normal. Instrumentation matters. Logging gradients, activations, and intermediate metrics helps you distinguish between data problems, optimization problems, and architectural limits. This is where machine learning starts to resemble engineering more than math.

Understanding Language Models Without Treating Them as Magic

Language models can feel like a cliff after traditional machine learning. The math looks familiar, but the behavior feels alien. The key is to ground LLMs in concepts you already know. They are neural networks trained with maximum-likelihood objectives over token sequences. Nothing supernatural is happening, even if the outputs feel uncanny.

Focus first on embeddings and attention. Embeddings translate discrete symbols into continuous spaces where similarity becomes geometric. Attention learns which parts of a sequence matter for predicting the next token. Once these ideas click, transformers stop feeling like black boxes and start looking like very large, very regular neural networks.

Fine-tuning and prompting should come later. Before adapting models, understand pretraining objectives, scaling laws, and failure modes like hallucination and bias. Treat language models as probabilistic systems with strengths and blind spots, not oracles. This mindset makes you far more effective when you eventually deploy them.

Building Projects That Actually Stretch You

Projects are the bridge between knowledge and capability, but only if they are chosen carefully. Reimplementing tutorials teaches familiarity, not fluency. The goal is to encounter problems where the solution is not already written out for you.

Good projects involve trade-offs. Training instability, limited data, computational constraints, or unclear evaluation metrics force you to make decisions. These decisions are where learning happens. A modest model you understand deeply beats a massive one you copied blindly.

Treat each project as an experiment. Document assumptions, failures, and surprising behaviors. Over time, this creates a personal knowledge base that no course can provide. When you can explain why a model failed and what you would try next, you are no longer just learning machine learning. You are practicing it.

Conclusion

The path beyond Andrew Ng’s course is not about abandoning fundamentals, but about extending them into systems that learn representations, scale with data, and behave probabilistically in the real world. Neural networks, architectures, and language models are not a separate discipline.

They are the continuation of the same ideas, pushed to their limits. Progress comes from rebuilding intuition layer by layer, confronting messy data, and resisting the temptation to treat modern models as magic. Once you make that shift, the field stops feeling like a moving target and starts feeling like a landscape you can explore with confidence.