Introducing Coral NPU, a full-stack, open-source platform designed to address the core performance, fragmentation, and privacy challenges limiting powerful, always-on AI with low-power edge devices and wearables.

Coral NPU: A full-stack platform for Edge AI

Generative AI has fundamentally reshaped our expectations of technology. We’ve seen the power of large-scale cloud-based models to create, reason and assist in incredible ways. However, the next great technological leap isn’t just about making cloud models bigger; it’s about embedding their intelligence directly into our immediate, personal environment. For AI to be truly assistive — proactively helping us navigate our day, translating conversations in real-time, or understanding our physical context — it must run on the devices we wear and carry. This presents a core challenge: embedding ambient AI onto battery-constrained edge devices, freeing them from the cloud to enable truly private, all-day assistive experiences.

To move from the cloud to personal devices, we must solve three critical problems:

- The performance gap: Complex, state-of-the-art machine learning (ML) models demand more compute, far exceeding the limited power, thermal, and memory budgets of an edge device.

- The fragmentation tax: Compiling and optimizing ML models for a diverse landscape of proprietary processors is difficult and costly, hindering consistent performance across devices.

- The user trust deficit: To be truly helpful, personal AI must prioritize the privacy and security of personal data and context.

Today we introduce Coral NPU, a full-stack platform that builds on our original work from Coral to provide hardware designers and ML developers with the tools needed to build the next generation of private, efficient edge AI devices. Co-designed in partnership with Google Research and Google DeepMind, Coral NPU is an AI-first hardware architecture built to enable the next generation of ultra-low-power, always-on edge AI. It offers a unified developer experience, making it easier to deploy applications like ambient sensing. It’s specifically designed to enable all-day AI on wearable devices while minimizing battery usage and being configurable for higher performance use cases. We’ve released our documentation and tools so that developers and designers can start building today.

Coral NPU: An AI-first architecture

Developers building for low-power edge devices face a fundamental trade-off, choosing between general purpose CPUs and specialized accelerators. General-purpose CPUs offer crucial flexibility and broad software support but lack the domain-specific architecture for demanding ML workloads, making them less performant and power-inefficient. Conversely, specialized accelerators provide high ML efficiency but are inflexible, difficult to program, and ill-suited for general tasks.

This hardware problem is magnified by a highly fragmented software ecosystem. With starkly different programming models for CPUs and ML blocks, developers are often forced to use proprietary compilers and complex command buffers. This creates a steep learning curve and makes it difficult to combine the unique strengths of different compute units. Consequently, the industry lacks a mature, low-power architecture that can easily and effectively support multiple ML development frameworks.

The Coral NPU architecture directly addresses this by reversing traditional chip design. It prioritizes the ML matrix engine over scalar compute, optimizing architecture for AI from silicon up and creating a platform purpose-built for more efficient, on-device inference.

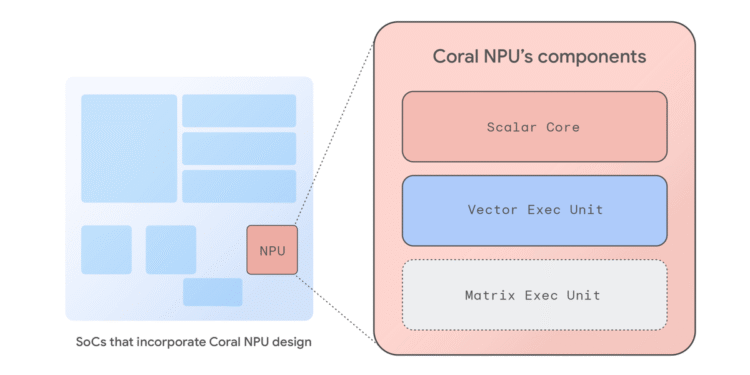

As a complete, reference neural processing unit (NPU) architecture, Coral NPU provides the building blocks for the next generation of energy-efficient, ML-optimized systems on chip (SoCs). The architecture is based on a set of RISC-V ISA compliant architectural IP blocks and is designed for minimal power consumption, making it ideal for always-on ambient sensing. The base design delivers performance in the 512 giga operations per second (GOPS) range while consuming just a few milliwatts, thus enabling powerful on-device AI for edge devices, hearables, AR glasses, and smartwatches.

The open and extensible architecture based on RISC-V gives SoC designers flexibility to modify the base design, or use it as a pre-configured NPU. The Coral NPU architecture includes the following components:

- A scalar core: A lightweight, C-programmable RISC-V frontend that manages data flow to the back-end cores, using a simple “run-to-completion” model for ultra-low power consumption and traditional CPU functions.

- A vector execution unit: A robust single instruction multiple data (SIMD) co-processor compliant with the RISC-V Vector instruction set (RVV) v1.0, enabling simultaneous operations on large data sets.

- A matrix execution unit: A highly efficient quantized outer product multiply-accumulate (MAC) engine purpose-built to accelerate fundamental neural network operations. Note that the matrix core is still under development and will be released on GitHub later this year.

Unified developer experience

The Coral NPU architecture is a simple, C-programmable target that can seamlessly integrate with modern compilers like IREE and TFLM. This enables easy support for ML frameworks like TensorFlow, JAX, and PyTorch.

Coral NPU incorporates a comprehensive software toolchain, including specialized solutions like the TFLM compiler for TensorFlow, alongside a general-purpose MLIR compiler, C compiler, custom kernels, and a simulator. This provides developers with flexible pathways. For example, a model from a framework like JAX is first imported into the MLIR format using the StableHLO dialect. This intermediate file is then fed into the IREE compiler, which applies a hardware-specific plug-in to recognize the Coral NPU’s architecture. From there, the compiler performs progressive lowering — a critical optimization step where the code is systematically translated through a series of dialects, moving closer to the machine’s native language. After optimization, the toolchain generates a final, compact binary file ready for efficient execution on the edge device. This suite of industry-standard developer tools helps simplify the programming of ML models and can allow for a consistent experience across various hardware targets.

Coral NPU’s co-design process focuses on two key areas. First, the architecture efficiently accelerates the leading encoder-based architectures used in today’s on-device vision and audio applications. Second, we are collaborating closely with the Gemma team to optimize Coral NPU for small transformer models, helping to ensure the accelerator architecture supports the next generation of generative AI at the edge.

This dual focus means Coral NPU is on track to be the first open, standards-based, low-power NPU designed to bring LLMs to wearables. For developers, this provides a single, validated path to deploy both current and future models with maximum performance at minimal power.

Target applications

Coral NPU is designed to enable ultra-low-power, always-on edge AI applications, particularly focused on ambient sensing systems. Its primary goal is to enable all day AI-experiences on wearables, mobile phones and Internet of Things (IoT) devices minimizing battery usage.

Potential use cases include:

- Contextual awareness: Detecting user activity (e.g., walking, running), proximity, or environment (e.g., indoors/outdoors, on-the-go) to enable “do-not-disturb” modes or other context-aware features.

- Audio processing: Voice and speech detection, keyword spotting, live translation, transcription, and audio-based accessibility features.

- Image processing: Person and object detection, facial recognition, gesture recognition, and low-power visual search.

- User interaction: Enabling control via hand gestures, audio cues, or other sensor-driven inputs.

Hardware-enforced privacy

A core principle of Coral NPU is building user trust through hardware-enforced security. Our architecture is being designed to support emerging technologies like CHERI, which provides fine-grained memory-level safety and scalable software compartmentalization. With this approach, we hope to enable sensitive AI models and personal data to be isolated in a hardware-enforced sandbox, mitigating memory-based attacks.

Building an ecosystem

Open hardware projects rely on strong partnerships to succeed. To that end, we’re collaborating with Synaptics, our first strategic silicon partner and a leader in embedded compute, wireless connectivity, and multimodal sensing for the IoT. Today, at their Tech Day, Synaptics announced their new Astra™ SL2610 line of AI-Native IoT Processors. This product line features their Torq™ NPU subsystem, the industry’s first production implementation of the Coral NPU architecture. The NPU’s design is transformer-capable and supports dynamic operators, enabling developers to build future-ready Edge AI systems for consumer and industrial IoT.

This partnership supports our commitment to a unified developer experience. The Synaptics Torq™ Edge AI platform is built on an open-source compiler and runtime based on IREE and MLIR. This collaboration is a significant step toward building a shared, open standard for intelligent, context-aware devices.

Solving core crises of the Edge

With Coral NPU, we are building a foundational layer for the future of personal AI. Our goal is to foster a vibrant ecosystem by providing a common, open-source, and secure platform for the industry to build upon. This empowers developers and silicon vendors to move beyond today’s fragmented landscape and collaborate on a shared standard for edge computing, enabling faster innovation. Learn more about Coral NPU and start building today.

Acknowledgements

We would like to thank the core contributors and leadership team for this work, particularly Billy Rutledge, Ben Laurie, Derek Chow, Michael Hoang, Naveen Dodda, Murali Vijayaraghavan, Gregory Kielian, Matthew Wilson, Bill Luan, Divya Pandya, Preeti Singh, Akib Uddin, Stefan Hall, Alex Van Damme, David Gao, Lun Dong, Julian Mullings-Black, Roman Lewkow, Shaked Flur, Yenkai Wang, Reid Tatge, Tim Harvey, Tor Jeremiassen, Isha Mishra, Kai Yick, Cindy Liu, Bangfei Pan, Ian Field, Srikanth Muroor, Jay Yagnik, Avinatan Hassidim, and Yossi Matias.