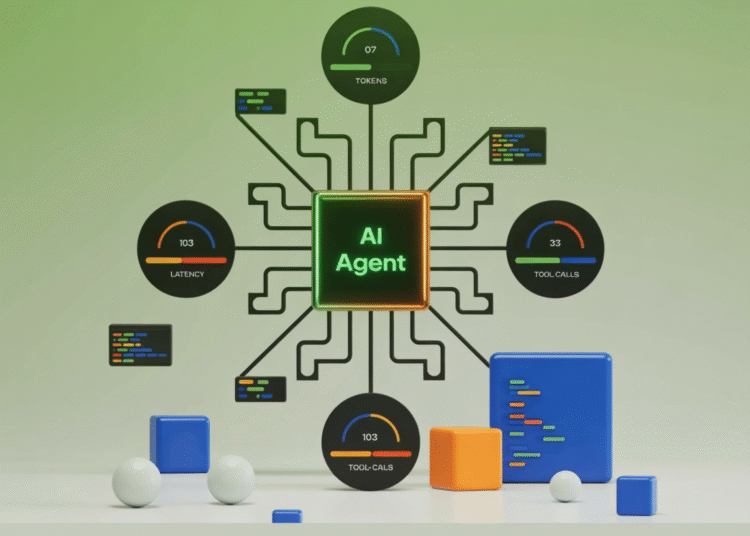

In this tutorial, we build a cost-aware planning agent that deliberately balances output quality against real-world constraints such as token usage, latency, and tool-call budgets. We design the agent to generate multiple candidate actions, estimate their expected costs and benefits, and then select an execution plan that maximizes value while staying within strict budgets. With this, we demonstrate how agentic systems can move beyond “always use the LLM” behavior and instead reason explicitly about trade-offs, efficiency, and resource awareness, which is critical for deploying agents reliably in constrained environments. Check out the FULL CODES here.

import os, time, math, json, random

from dataclasses import dataclass, field

from typing import List, Dict, Optional, Tuple, Any

from getpass import getpass

USE_OPENAI = True

if USE_OPENAI:

if not os.getenv("OPENAI_API_KEY"):

os.environ["OPENAI_API_KEY"] = getpass("Enter OPENAI_API_KEY (hidden): ").strip()

try:

from openai import OpenAI

client = OpenAI()

except Exception as e:

print("OpenAI SDK import failed. Falling back to offline mode.\nError:", e)

USE_OPENAI = FalseWe set up the execution environment and securely load the OpenAI API key at runtime without hardcoding it. We also initialize the client so the agent gracefully falls back to offline mode if the API is unavailable. Check out the FULL CODES here.

def approx_tokens(text: str) -> int:

return max(1, math.ceil(len(text) / 4))

@dataclass

class Budget:

max_tokens: int

max_latency_ms: int

max_tool_calls: int

@dataclass

class Spend:

tokens: int = 0

latency_ms: int = 0

tool_calls: int = 0

def within(self, b: Budget) -> bool:

return (self.tokens <= b.max_tokens and

self.latency_ms <= b.max_latency_ms and

self.tool_calls <= b.max_tool_calls)

def add(self, other: "Spend") -> "Spend":

return Spend(

tokens=self.tokens + other.tokens,

latency_ms=self.latency_ms + other.latency_ms,

tool_calls=self.tool_calls + other.tool_calls

)We define the core budgeting abstractions that enable the agent to reason explicitly about costs. We model token usage, latency, and tool calls as first-class quantities and provide utility methods to accumulate and validate spend. It gives us a clean foundation for enforcing constraints throughout planning and execution. Check out the FULL CODES here.

@dataclass

class StepOption:

name: str

description: str

est_spend: Spend

est_value: float

executor: str

payload: Dict[str, Any] = field(default_factory=dict)

@dataclass

class PlanCandidate:

steps: List[StepOption]

spend: Spend

value: float

rationale: str = ""

def llm_text(prompt: str, *, model: str = "gpt-5", effort: str = "low") -> str:

if not USE_OPENAI:

return ""

t0 = time.time()

resp = client.responses.create(

model=model,

reasoning={"effort": effort},

input=prompt,

)

_ = (time.time() - t0)

return resp.output_text or ""We introduce the data structures that represent individual action choices and full plan candidates. We also define a lightweight LLM wrapper that standardizes how text is generated and measured. This separation allows the planner to reason about actions abstractly without being tightly coupled to execution details. Check out the FULL CODES here.

def generate_step_options(task: str) -> List[StepOption]:

base = [

StepOption(

name="Clarify deliverables (local)",

description="Extract deliverable checklist + acceptance criteria from the task.",

est_spend=Spend(tokens=60, latency_ms=20, tool_calls=0),

est_value=6.0,

executor="local",

),

StepOption(

name="Outline plan (LLM)",

description="Create a structured outline with sections, constraints, and assumptions.",

est_spend=Spend(tokens=600, latency_ms=1200, tool_calls=1),

est_value=10.0,

executor="llm",

payload={"prompt_kind":"outline"}

),

StepOption(

name="Outline plan (local)",

description="Create a rough outline using templates (no LLM).",

est_spend=Spend(tokens=120, latency_ms=40, tool_calls=0),

est_value=5.5,

executor="local",

),

StepOption(

name="Risk register (LLM)",

description="Generate risks, mitigations, owners, and severity.",

est_spend=Spend(tokens=700, latency_ms=1400, tool_calls=1),

est_value=9.0,

executor="llm",

payload={"prompt_kind":"risks"}

),

StepOption(

name="Risk register (local)",

description="Generate a standard risk register from a reusable template.",

est_spend=Spend(tokens=160, latency_ms=60, tool_calls=0),

est_value=5.0,

executor="local",

),

StepOption(

name="Timeline (LLM)",

description="Draft a realistic milestone timeline with dependencies.",

est_spend=Spend(tokens=650, latency_ms=1300, tool_calls=1),

est_value=8.5,

executor="llm",

payload={"prompt_kind":"timeline"}

),

StepOption(

name="Timeline (local)",

description="Draft a simple timeline from a generic milestone template.",

est_spend=Spend(tokens=150, latency_ms=60, tool_calls=0),

est_value=4.8,

executor="local",

),

StepOption(

name="Quality pass (LLM)",

description="Rewrite for clarity, consistency, and formatting.",

est_spend=Spend(tokens=900, latency_ms=1600, tool_calls=1),

est_value=8.0,

executor="llm",

payload={"prompt_kind":"polish"}

),

StepOption(

name="Quality pass (local)",

description="Light formatting + consistency checks without LLM.",

est_spend=Spend(tokens=120, latency_ms=50, tool_calls=0),

est_value=3.5,

executor="local",

),

]

if USE_OPENAI:

meta_prompt = f"""

You are a planning assistant. For the task below, propose 3-5 OPTIONAL extra steps that improve quality,

like checks, validations, or stakeholder tailoring. Keep each step short.

TASK:

{task}

Return JSON list with fields: name, description, est_value(1-10).

"""

txt = llm_text(meta_prompt, model="gpt-5", effort="low")

try:

items = json.loads(txt.strip())

for it in items[:5]:

base.append(

StepOption(

name=str(it.get("name","Extra step (local)"))[:60],

description=str(it.get("description",""))[:200],

est_spend=Spend(tokens=120, latency_ms=60, tool_calls=0),

est_value=float(it.get("est_value", 5.0)),

executor="local",

)

)

except Exception:

pass

return baseWe focus on generating a diverse set of candidate steps, including both LLM-based and local alternatives with different cost–quality trade-offs. We optionally use the model itself to suggest additional low-cost improvements while still controlling their impact on the budget. By doing so, we enrich the action space without losing efficiency. Check out the FULL CODES here.

def plan_under_budget(

options: List[StepOption],

budget: Budget,

*,

max_steps: int = 6,

beam_width: int = 12,

diversity_penalty: float = 0.2

) -> PlanCandidate:

def redundancy_cost(chosen: List[StepOption], new: StepOption) -> float:

key_new = new.name.split("(")[0].strip().lower()

overlap = 0

for s in chosen:

key_s = s.name.split("(")[0].strip().lower()

if key_s == key_new:

overlap += 1

return overlap * diversity_penalty

beams: List[PlanCandidate] = [PlanCandidate(steps=[], spend=Spend(), value=0.0, rationale="")]

for _ in range(max_steps):

expanded: List[PlanCandidate] = []

for cand in beams:

for opt in options:

if opt in cand.steps:

continue

new_spend = cand.spend.add(opt.est_spend)

if not new_spend.within(budget):

continue

new_value = cand.value + opt.est_value - redundancy_cost(cand.steps, opt)

expanded.append(

PlanCandidate(

steps=cand.steps + [opt],

spend=new_spend,

value=new_value,

rationale=cand.rationale

)

)

if not expanded:

break

expanded.sort(key=lambda c: c.value, reverse=True)

beams = expanded[:beam_width]

best = max(beams, key=lambda c: c.value)

return bestWe implement the budget-constrained planning logic that searches for the highest-value combination of steps under strict limits. We apply a beam-style search with redundancy penalties to avoid wasteful action overlap. This is where the agent truly becomes cost-aware by optimizing value subject to constraints. Check out the FULL CODES here.

def run_local_step(task: str, step: StepOption, working: Dict[str, Any]) -> str:

name = step.name.lower()

if "clarify deliverables" in name:

return (

"Deliverables checklist:\n"

"- Executive summary\n- Scope & assumptions\n- Workplan + milestones\n"

"- Risk register (risk, impact, likelihood, mitigation, owner)\n"

"- Next steps + data needed\n"

)

if "outline plan" in name:

return (

"Outline:\n1) Context & objective\n2) Scope\n3) Approach\n4) Timeline\n5) Risks\n6) Next steps\n"

)

if "risk register" in name:

return (

"Risk register (template):\n"

"1) Data access delays | High | Mitigation: agree data list + owners\n"

"2) Stakeholder alignment | Med | Mitigation: weekly review\n"

"3) Tooling constraints | Med | Mitigation: phased rollout\n"

)

if "timeline" in name:

return (

"Timeline (template):\n"

"Week 1: discovery + requirements\nWeek 2: prototype + feedback\n"

"Week 3: pilot + metrics\nWeek 4: rollout + handover\n"

)

if "quality pass" in name:

draft = working.get("draft", "")

return "Light quality pass done (headings normalized, bullets aligned).\n" + draft

return f"Completed: {step.name}\n"

def run_llm_step(task: str, step: StepOption, working: Dict[str, Any]) -> str:

kind = step.payload.get("prompt_kind", "generic")

context = working.get("draft", "")

prompts = {

"outline": f"Create a crisp, structured outline for the task below.\nTASK:\n{task}\nReturn a numbered outline.",

"risks": f"Create a risk register for the task below. Include: Risk | Impact | Likelihood | Mitigation | Owner.\nTASK:\n{task}",

"timeline": f"Create a realistic milestone timeline with dependencies for the task below.\nTASK:\n{task}",

"polish": f"Rewrite and polish the following draft for clarity and consistency.\nDRAFT:\n{context}",

"generic": f"Help with this step: {step.description}\nTASK:\n{task}\nCURRENT:\n{context}",

}

return llm_text(prompts.get(kind, prompts["generic"]), model="gpt-5", effort="low")

def execute_plan(task: str, plan: PlanCandidate) -> Tuple[str, Spend]:

working = {"draft": ""}

actual = Spend()

for i, step in enumerate(plan.steps, 1):

t0 = time.time()

if step.executor == "llm" and USE_OPENAI:

out = run_llm_step(task, step, working)

tool_calls = 1

else:

out = run_local_step(task, step, working)

tool_calls = 0

dt_ms = int((time.time() - t0) * 1000)

tok = approx_tokens(out)

actual = actual.add(Spend(tokens=tok, latency_ms=dt_ms, tool_calls=tool_calls))

working["draft"] += f"\n\n### Step {i}: {step.name}\n{out}\n"

return working["draft"].strip(), actual

TASK = "Draft a 1-page project proposal for a logistics dashboard + fleet optimization pilot, including scope, timeline, and risks."

BUDGET = Budget(

max_tokens=2200,

max_latency_ms=3500,

max_tool_calls=2

)

options = generate_step_options(TASK)

best_plan = plan_under_budget(options, BUDGET, max_steps=6, beam_width=14)

print("=== SELECTED PLAN (budget-aware) ===")

for s in best_plan.steps:

print(f"- {s.name} | est_spend={s.est_spend} | est_value={s.est_value}")

print("\nEstimated spend:", best_plan.spend)

print("Budget:", BUDGET)

print("\n=== EXECUTING PLAN ===")

draft, actual = execute_plan(TASK, best_plan)

print("\n=== OUTPUT DRAFT ===\n")

print(draft[:6000])

print("\n=== ACTUAL SPEND (approx) ===")

print(actual)

print("\nWithin budget?", actual.within(BUDGET))We execute the selected plan and track actual resource usage step by step. We dynamically choose between local and LLM execution paths and aggregate the final output into a coherent draft. By comparing estimated and actual spend, we demonstrate how planning assumptions can be validated and refined in practice.

In conclusion, we demonstrated how a cost-aware planning agent can reason about its resource consumption and adapt its behavior in real time. We executed only the steps that fit within predefined budgets and tracked actual spend to validate the planning assumptions, closing the loop between estimation and execution. Also, we highlighted how agentic AI systems can become more practical, controllable, and scalable by treating cost, latency, and tool usage as first-class decision variables rather than afterthoughts.

Check out the FULL CODES here. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

The post How an AI Agent Chooses What to Do Under Tokens, Latency, and Tool-Call Budget Constraints? appeared first on MarkTechPost.