Google DeepMind Research have introduced WeatherNext 2, an AI based medium range global weather forecasting system that now powers upgraded forecasts in Google Search, Gemini, Pixel Weather and Google Maps Platform’s Weather API, with Google Maps integration coming next. It combines a new Functional Generative Network, or FGN, architecture with a large ensemble to deliver probabilistic forecasts that are faster, more accurate and higher resolution than the previous WeatherNext system, and it is exposed as data products in Earth Engine, BigQuery and as an early access model on Vertex AI.

From deterministic grids to functional ensembles

At the core of WeatherNext 2 is the FGN model. Instead of predicting a single deterministic future field, the model directly samples from the joint distribution over 15 day global weather trajectories. Each state 𝑋ₜ includes 6 atmospheric variables at 13 pressure levels and 6 surface variables on a 0.25 degree latitude longitude grid, with a 6 hour timestep. The model learns to approximate 𝑝(𝑋ₜ ∣ 𝑋ₜ₋₂:𝑡₋₁) and is run autoregressively from two initial analysis frames to generate ensemble trajectories.

Architecturally, each FGN instance follows a similar layout to the GenCast denoiser. A graph neural network encoder and decoder map between the regular grid and a latent representation defined on a spherical, 6 times refined icosahedral mesh. A graph transformer operates on the mesh nodes. The production FGN used for WeatherNext 2 is larger than GenCast, with about 180 million parameters per model seed, latent dimension 768 and 24 transformer layers, compared with 57 million parameters, latent 512 and 16 layers for GenCast. FGN also runs at a 6 hour timestep, where GenCast used 12 hour steps.

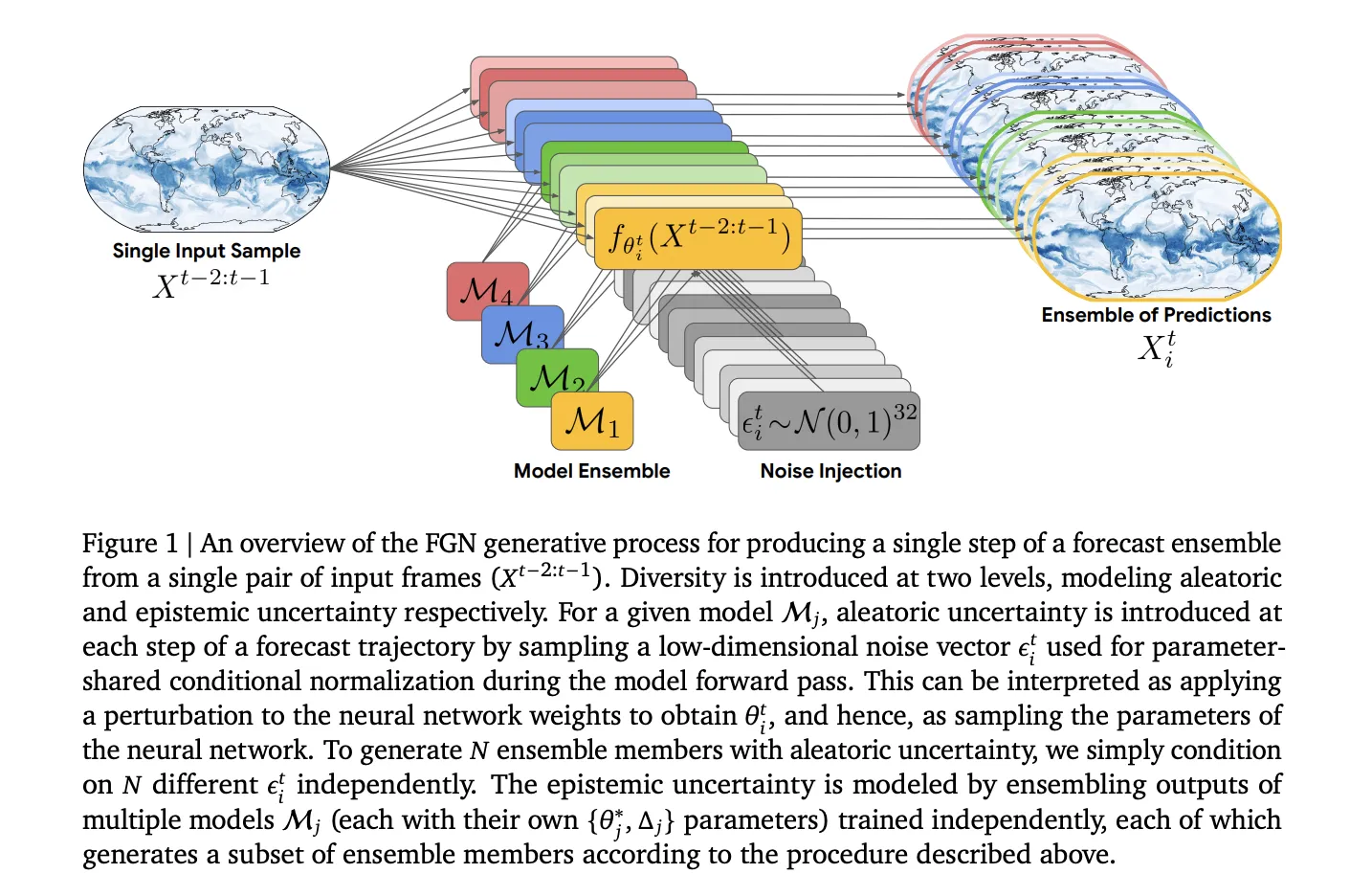

Modeling epistemic and aleatoric uncertainty in function space

FGN separates epistemic and aleatoric uncertainty in a way that is practical for large scale forecasting. Epistemic uncertainty, which comes from limited data and imperfect learning, is handled by a deep ensemble of 4 independently initialized and trained models. Each model seed has the architecture described above, and the system generates an equal number of ensemble members from each seed when producing forecasts.

Aleatoric uncertainty, which represents inherent variability in the atmosphere and unresolved processes, is handled through functional perturbations. At each forecast step, the model samples a 32 dimensional Gaussian noise vector 𝜖ₜ and feeds it through parameter shared conditional normalization layers inside the network. This effectively samples a new set of weights 𝜃ₜ for that forward pass. Different 𝜖ₜ values give different but dynamically coherent forecasts for the same initial condition, so ensemble members look like distinct plausible weather outcomes, not independent noise at each grid point.

Training on marginals with CRPS, learning joint structure

A key design choice is that FGN is trained only on per location, per variable marginals, not on explicit multivariate targets. The model uses the Continuous Ranked Probability Score (CRPS) as the training loss, computed with a fair estimator on ensemble samples at each grid point and averaged over variables, levels and time. CRPS encourages sharp, well calibrated predictive distributions for each scalar quantity. During later training stages the authors introduce short autoregressive rollouts, up to 8 steps, and back-propagate through the rollout, which improves long range stability but is not strictly required for good joint behavior.

Despite using only marginal supervision, the low dimensional noise and shared functional perturbations force the model to learn realistic joint structure. With a single 32 dimensional noise vector influencing an entire global field, the easiest way to reduce CRPS everywhere is to encode physically consistent spatial and cross variable correlations along that manifold, rather than independent fluctuations. Experiments confirm that the resulting ensemble captures realistic regional aggregates and derived quantities.

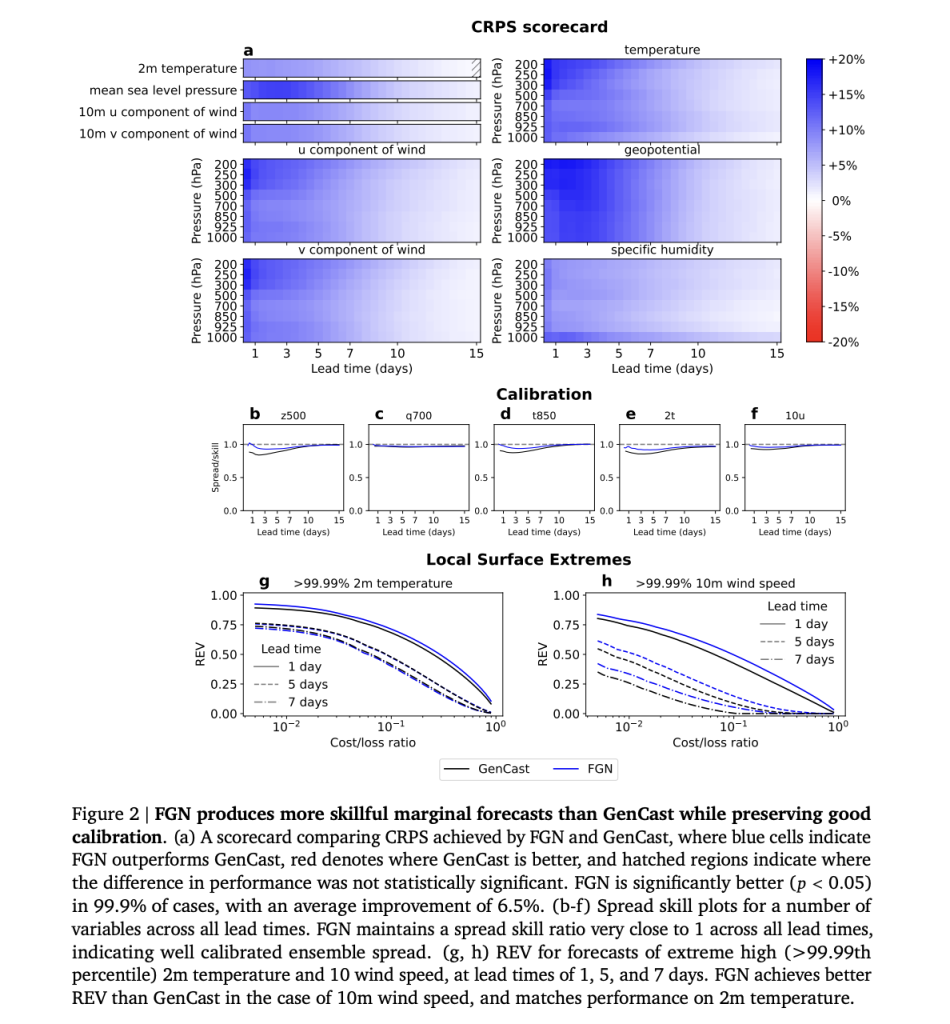

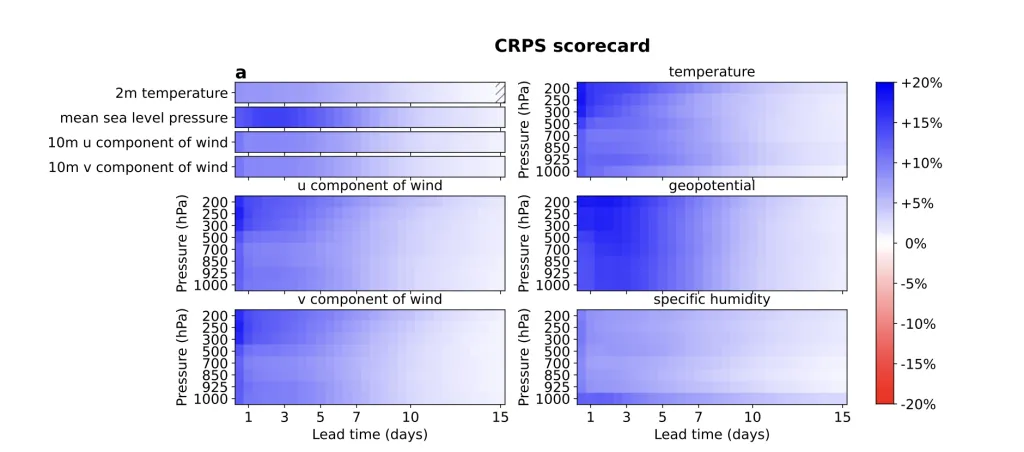

Measured gains over GenCast and traditional baselines

On marginal metrics, WeatherNext 2’s FGN ensemble clearly improves over GenCast. FGN achieves better CRPS in 99.9% of cases with statistically significant gains, with an average improvement of about 6.5% and maximum gains near 18% for some variables at shorter lead times. Ensemble mean root mean squared error also improves while maintaining good spread skill relationships, indicating that ensemble spread is consistent with forecast error out to 15 days.

To test joint structure, the research team evaluate CRPS after pooling over spatial windows at different scales and over derived quantities such as 10 meter wind speed and the difference in geopotential height between 300 hPa and 500 hPa. FGN improves both average pooled and max pooled CRPS relative to GenCast, showing that it better models region level aggregates and multivariate relationships, not only point wise values.

Tropical cyclone tracking is a particularly important use case. Using an external tracker, the research team compute ensemble mean track errors. FGN achieves position errors that correspond to roughly one extra day of useful predictive skill compared with GenCast. Even when constrained to a 12 hour timestep version, FGN still outperforms GenCast beyond 2 day lead times. Relative Economic Value analysis on track probability fields also favors FGN over GenCast across a range of cost loss ratios, which is crucial for decision makers planning evacuations and asset protection.

Key Takeaways

- Functional Generative Network core: WeatherNext 2 is built on the Functional Generative Network, a graph transformer ensemble that predicts full 15 day global trajectories on a 0.25° grid with a 6 hour timestep, modeling 6 atmospheric variables at 13 pressure levels plus 6 surface variables.

- Explicit modeling of epistemic and aleatoric uncertainty: The system combines 4 independently trained FGN seeds for epistemic uncertainty with a shared 32 dimensional noise input that perturbs network normalization layers for aleatoric uncertainty, so each sample is a dynamically coherent alternative forecast, not point wise noise.

- Trained on marginals, improves joint structure: FGN is trained only on per location marginals using fair CRPS, yet still improves joint spatial and cross variable structure over the previous diffusion based WeatherNext Gen model, including lower pooled CRPS on region level aggregated fields and derived variables such as 10 meter wind speed and geopotential thickness.

- Consistent accuracy gains over GenCast and WeatherNext Gen: WeatherNext 2 achieves better CRPS than the earlier GenCast based WeatherNext model on 99.9% of variable, level and lead time combinations, with average CRPS improvements around 6.5 percent, improved ensemble mean RMSE and better relative economic value for extreme event thresholds and tropical cyclone tracks.

Check out the Full Paper, Technical Details and Project Page. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.