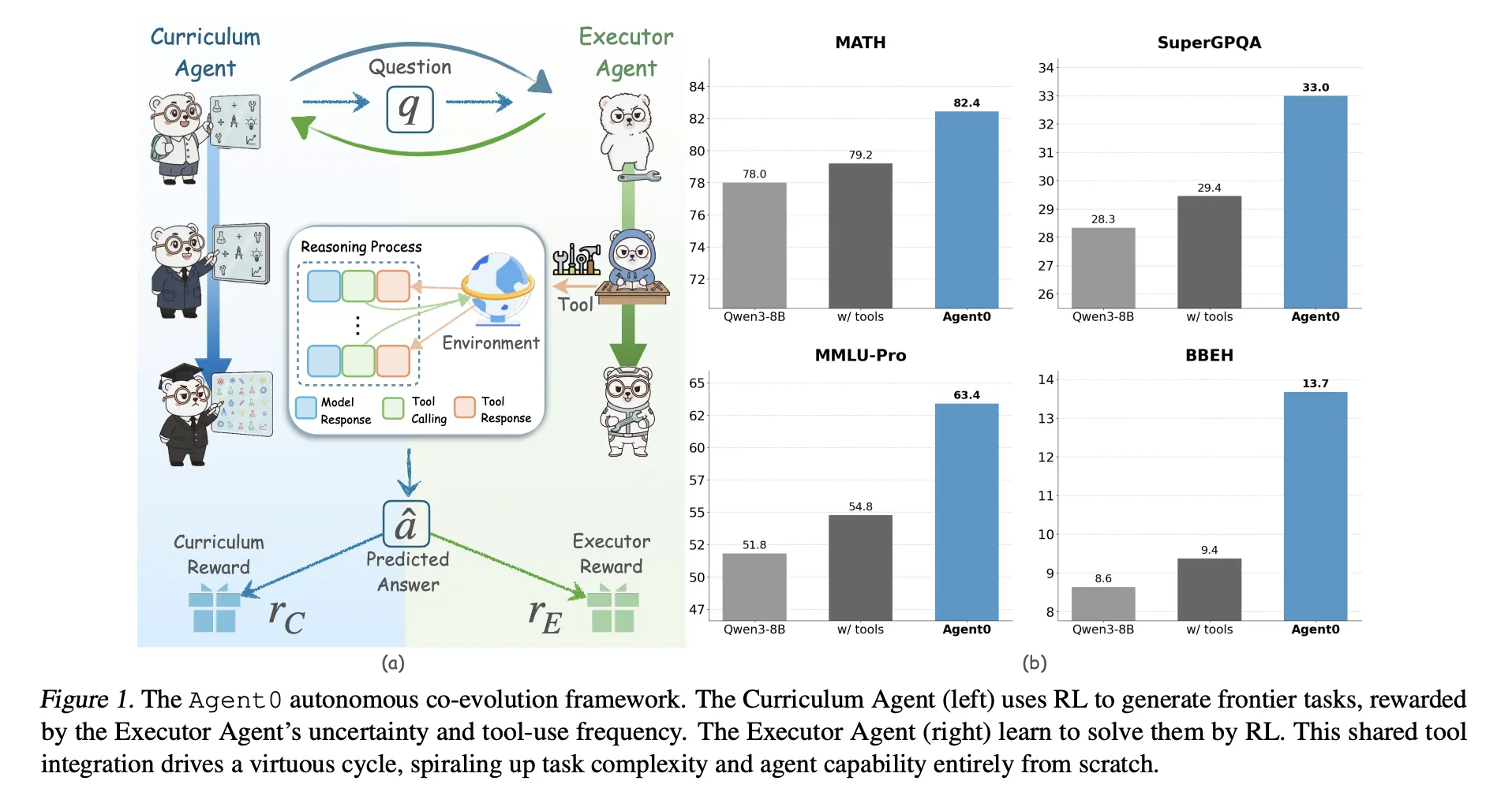

Large language models need huge human datasets, so what happens if the model must create all its own curriculum and teach itself to use tools? A team of researchers from UNC-Chapel Hill, Salesforce Research and Stanford University introduce ‘Agent0’, a fully autonomous framework that evolves high-performing agents without external data through multi-step co-evolution and seamless tool integration

Agent0 targets mathematical and general reasoning. It shows that careful task generation and tool integrated rollouts can push a base model beyond its original capabilities, across ten benchmarks.

Two agents from one base model

Agent0 starts from a base policy π_base, for example Qwen3 4B Base or Qwen3 8B Base. It clones this policy into:

- a Curriculum Agent πθ that generates tasks,

- an Executor Agent πϕ that solves those tasks with a Python tool.

Training proceeds in iterations with two stages per iteration:

- Curriculum evolution: The curriculum agent generates a batch of tasks. For each task, the executor samples multiple responses. A composite reward measures how uncertain the executor is, how often it uses the tool and how diverse the batch is. πθ is updated with Group Relative Policy Optimization (GRPO) using this reward.

- Executor evolution: The trained curriculum agent is frozen. It generates a large pool of tasks. Agent0 filters this pool to keep only tasks near the executor’s capability frontier, then trains the executor on these tasks using an ambiguity aware RL objective called Ambiguity Dynamic Policy Optimization (ADPO).

This loop creates a feedback cycle. As the executor becomes stronger by using the code interpreter, the curriculum must generate more complex, tool reliant problems to keep its reward high.

How the curriculum agent scores tasks?

The curriculum reward combines three signals:

Uncertainty reward: For each generated task x, the executor samples k responses and majority votes a pseudo answer. Self consistency p̂(x) is the fraction of responses that agree with this majority. The reward is maximal when p̂ is close to 0.5 and low when tasks are too easy or too hard. This encourages tasks that are challenging but still solvable for the current executor.

Tool use reward: The executor can trigger a sandboxed code interpreter using python tags and receives results tagged as output. Agent0 counts the number of tool calls in a trajectory and gives a scaled, capped reward, with a cap C set to 4 in experiments. This favors tasks that actually require tool calls rather than pure mental arithmetic.

Repetition penalty: Within each curriculum batch, Agent0 measures pairwise similarity between tasks using a BLEU based distance. Tasks are clustered, and a penalty term increases with cluster size. This discourages the curriculum from generating many near duplicates.

A composite reward multiplies a format check with a weighted sum of uncertainty and tool rewards minus the repetition penalty. This composite value feeds into GRPO to update πθ.

How the executor learns from noisy self labels?

The executor is also trained with GRPO but on multi turn, tool integrated trajectories and pseudo labels instead of ground truth answers.

Frontier dataset construction: After curriculum training in an iteration, the frozen curriculum generates a large candidate pool. For each task, Agent0 computes self consistency p̂(x) with the current executor and keeps only tasks where p̂ lies in an informative band, for example between 0.3 and 0.8. This defines a challenging frontier dataset that avoids trivial or impossible problems.

Multi turn tool integrated rollouts: For each frontier task, the executor generates a trajectory that can interleave:

- natural language reasoning tokens,

pythoncode segments,outputtool feedback.

Generation pauses when a tool call appears, executes the code in a sandboxed interpreter built on VeRL Tool, then resumes conditioned on the result. The trajectory terminates when the model produces a final answer inside {boxed ...} tags.

A majority vote across sampled trajectories defines a pseudo label and a terminal reward for each trajectory.

ADPO, ambiguity aware RL: Standard GRPO treats all samples equally, which is unstable when labels come from majority voting on ambiguous tasks. ADPO modifies GRPO in two ways using p̂ as an ambiguity signal.

- It scales the normalized advantage with a factor that increases with self consistency, so trajectories from low confidence tasks contribute less.

- It sets a dynamic upper clipping bound for the importance ratio, which depends on self consistency. Empirical analysis shows that fixed upper clipping mainly affects low probability tokens. ADPO relaxes this bound adaptively, which improves exploration on uncertain tasks, as visualized by the up clipped token probability statistics.

Results on mathematical and general reasoning

Agent0 is implemented on top of VeRL and evaluated on Qwen3 4B Base and Qwen3 8B Base. It uses a sandboxed Python interpreter as the single external tool.

The research team evaluate on ten benchmarks:

- Mathematical reasoning: AMC, Minerva, MATH, GSM8K, Olympiad Bench, AIME24, AIME25.

- General reasoning: SuperGPQA, MMLU Pro, BBEH.

They report pass@1 for most datasets and mean@32 for AMC and AIME tasks.

For Qwen3 8B Base, Agent0 reaches:

- math average 58.2 versus 49.2 for the base model,

- overall general average 42.1 versus 34.5 for the base model.

Agent0 also improves over strong data free baselines such as R Zero, Absolute Zero, SPIRAL and Socratic Zero, both with and without tools. On Qwen3 8B, it surpasses R Zero by 6.4 percentage points and Absolute Zero by 10.6 points on the overall average. It also beats Socratic Zero, which relies on external OpenAI APIs.

Across three co evolution iterations, average math performance on Qwen3 8B increases from 55.1 to 58.2 and general reasoning also improves per iteration. This confirms stable self improvement rather than collapse.

Qualitative examples show that curriculum tasks evolve from basic geometry questions to complex constraint satisfaction problems, while executor trajectories mix reasoning text with Python calls to reach correct answers.

Key Takeaways

- Fully data free co evolution: Agent0 eliminates external datasets and human annotations. Two agents, a curriculum agent and an executor agent, are initialized from the same base LLM and co evolve only via reinforcement learning and a Python tool.

- Frontier curriculum from self uncertainty: The curriculum agent uses the executor’s self consistency and tool usage to score tasks. It learns to generate frontier tasks that are neither trivial nor impossible, and that explicitly require tool integrated reasoning.

- ADPO stabilizes RL with pseudo labels: The executor is trained with Ambiguity Dynamic Policy Optimization. ADPO down weights highly ambiguous tasks and adapts the clipping range based on self consistency, which makes GRPO style updates stable when rewards come from majority vote pseudo labels.

- Consistent gains on math and general reasoning: On Qwen3 8B Base, Agent0 improves math benchmarks from 49.2 to 58.2 average and general reasoning from 34.5 to 42.1, which corresponds to relative gains of about 18 percent and 24 percent.

- Outperforms prior zero data frameworks: Across ten benchmarks, Agent0 surpasses previous self evolving methods such as R Zero, Absolute Zero, SPIRAL and Socratic Zero, including those that already use tools or external APIs. This shows that the co evolution plus tool integration design is a meaningful step beyond earlier single round self play approaches.

Editorial Notes

Agent0 is an important step toward practical, data free reinforcement learning for tool integrated reasoning. It shows that a base LLM can act as both Curriculum Agent and Executor Agent, and that GRPO with ADPO and VeRL Tool can drive stable improvement from majority vote pseudo labels. The method also demonstrates that tool integrated co evolution can outperform prior zero data frameworks such as R Zero and Absolute Zero on strong Qwen3 baselines. Agent0 makes a strong case that self evolving, tool integrated LLM agents are becoming a realistic training paradigm.

Check out the PAPER and Repo. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.