From all my years in research and consulting, I think I’ve learned a thing or two about marketing worth sharing. Enduring fundamentals, mostly yet often overlooked. So, this year, I’m sharing some for your consideration. I hope they’re helpful.

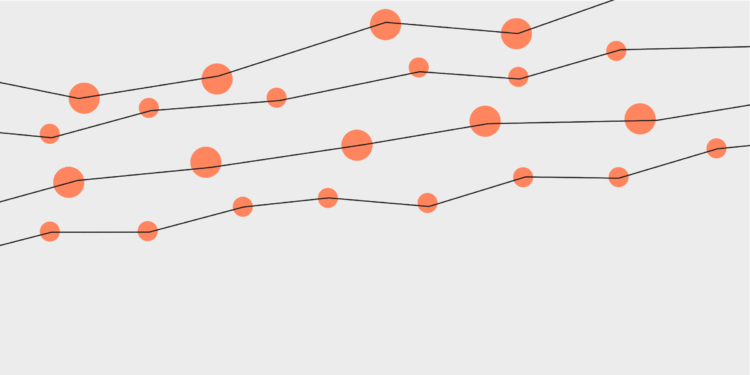

This week’s thought: Statistical significance is a perilous safeguard in marketing.

Most marketing researchers don’t understand the concept of statistical significance. So, marketers — and planners and buyers — can be forgiven for misunderstanding it, too. For all the good it brings to interpreting research, it brings just as much bad to making decisions. Not because statistical significance is out of place in marketing research and marketing. But it is frequently misused and misapplied. Making it a perilous safeguard.

Everybody in marketing has at least a casual familiarity with statistical significance. Especially for data tables comparing two groups, say men versus women. Unless some statistical test tells us that the difference we see when comparing men versus women is a statistically significant result, we report no difference between men and women on that question or characteristic. This is the value of statistical significance—it stops us from interpreting random fluctuations as real differences. It’s a safeguard.

But statistical testing is not as straightforward as we tend to think. Few people, including most researchers, know what’s really being tested or how. The result is an over-reliance on or a mis-application of statistical significance for making decisions and framing opportunities, which makes it perilous.

This article is part of Branding Strategy Insider’s newsletter. You can sign up here to get thought pieces like this sent to your inbox.

To begin with, most of what we do in marketing should not turn on small differences that require statistical testing to determine if they are real or random. Marketing decisions involving millions of dollars should be based on big differences. And big differences don’t require stat testing—there’s nothing ambiguous to sort out. The naked eye is plenty. Only small differences require stat testing.

Statisticians are clear on this point. Statistical tests were developed for scientific fields in which small differences matter. This is not the case in marketing (with the exception of media buying).

A 52%/48% difference may be statistically significant, but it is teensy in the broader context of the marketplace. In this example, only slightly more men than women, say, agree, and nearly as many disagree. This is not a result that should give a marketer any sense of assurance about success, statistical significance notwithstanding.

Marketers should be looking for needs, ads, or opinions that are overwhelmingly more characteristic of men compared to women, like 70%/30% or 80%/20%. Big differences like these are indeed rare. But investing behind statistically significant small differences, instead of doing the hard work to uncover big differences, is much, if not all, of the explanation for the marginal or failed impact of so many marketing campaigns.

Statistical significance creates an impression of precision, but this is flattery that marketing can mostly live without. As I have written before (“Marketing Is Not An Exact Science“), by and large, marketing can get by perfectly well with imprecision. Marketing decisions tend to be go/no-go decisions. One thing versus another—this instead of that. All that matters is knowing whether one choice is better than another. It doesn’t matter how much better or how much worse. It’s yes or no, on or off, launch or shelve, the current or the new. It’s whether it’s a big enough chance of success or not, above the threshold of action or below.

When it’s go/no-go, it doesn’t matter if the research result is 65%/35% or 90%/10%. Either way, it’s a go (or a no-go). Nailing down the precise difference is irrelevant to the decision. As long as the difference is big, the decision is obvious.

We get caught up in stat testing and overlook the most important part of analyzing data—the relevant benchmark or standard of comparison—the threshold of action. A good ad, for example, is not one with a test score significantly above the norm. It is one that exceeds some benchmark, indicating it is likely to generate an acceptable return on investment. Such a benchmark would typically come from financial models, not marketing research stat testing.

Statistical significance alone is not enough. A significant difference must also be meaningful, which is established by benchmarks or standards of comparison. Such benchmarks are independent of stat testing, and they generally require big differences obvious to the naked eye, making stat testing peripheral. Marketers want to invest in something that is highly characteristic of men, not something so marginally different from women that it has to be statistically tested.

Even when statistical significance is appropriate, we rely on it in an unthinking way. The conventions of modern-day statistical testing came from Sir Ronald Fisher a century ago, which reflects his balancing at that time of the need for scientific precision with the costs of collecting and analyzing data. There is nothing carved in stone about a 95 percent confidence level. Fisher articulated good reasons for his guidelines, but these conventions are arbitrary and often unhelpful for marketing decisions.

Maybe more risk-taking is better, in which case, perhaps an 80 percent confidence level is appropriate. However, choosing a confidence level that aligns with risks and opportunities requires incorporating a significant amount of additional information into the research process. The costs of data must figure in as well as the costs of failure and the likelihood of success. This is not something most researchers know how to do, and thus, we default to Fisher. Yet, this is the way researchers should be coaching marketers through data-driven decision-making, particularly in today’s fast-paced marketplace.

Fisher’s conventions are intentionally conservative, which means that marketers who rely on them will be flat-footed. Marketers may have fewer failures, but as a result, they will miss out on many opportunities to succeed. This is built into the mathematics of stat testing as it is typically practiced today.

Somewhere in the back of our minds, we remember hearing about Type 1 and Type 2 errors. Conventional stat testing is designed to minimize Type 1 error, which inherently means more Type 2 error. It’s zero-sum.

Type 1 error is concluding that there is a difference when in fact there is no difference. A Type 2 error is concluding there is no difference when, in fact, there is a difference. Minimizing Type 1 error keeps wrong ideas from making their way into scientific orthodoxy, which was Fisher’s priority. For marketers, it means fewer failures because stat testing sets a high bar for reporting differences. But deciding to live with a more failures, or a higher rate of failure, means a greater chance of stumbling across a big breakout marketing success, something that strong Type 1 protection would find to be statistically insignificant.

Balancing Type 1 and Type 2 error is all about a concept called power. Marketing researchers almost never think about power. Minimizing Type 1 error means higher Type 2 error, thus lower power. Which is to say, a lower ability to detect a difference that is a real difference. Living with a higher Type 1 error in marketing, such as 80 percent confidence instead of 95 percent, would mean more failures, but it would also increase power or chances of finding a success that would otherwise go undetected.

The choice between minimizing failures versus maximizing power is a financial calculation, not a marketing issue per se. The balance of failures versus power comes from business strategy.

Living with less Type 1 error protection is how private equity firms invest. PE firms build up portfolios of companies, knowing full well that most will fail. But the few that succeed will more than pay off the aggregate investment. Compiling portfolios is all about maximizing power at the cost of more failures.

Unfortunately, most marketing researchers don’t fully comprehend the complexities and trade-offs of statistical significance. We often fall back on a century-old set of conventions that embody decisions made long ago about risks and errors, which we accept unknowingly and without reflection.

A simple illustration of this is the formula used to determine the sample size for a survey. It’s easy to figure out how big a sample we need, given the level of Type 1 error protection we want and some guesstimate of variance. But the simple formula that we use to do this is a shorthand formula. It is not the full formula.

The full formula also includes a term for Type 2 error and a term for the costs of information. If we really wanted to balance risks and rewards relative to costs, we’d use the full formula and think explicitly about those considerations as we are calculating the appropriate sample size. We almost never do so. We omit Type 2 error and information costs and focus only on Type 1 error. Thereby defaulting into business strategies compatible with Fisher’s judgments about knowledge-building rather than thinking for ourselves about the kinds of business strategies best suited for the modern marketplace. If we did this sort of hard evaluation, we would probably set up our stat testing differently.

If we gave more consideration to power and Type 2 error, we would also realize that Big Data has ushered in the converse problem of too much power. With very large datasets, almost any stat test will find a statistically significant result. We wind up chasing our tails, a result that many have puzzled over as a problem with the immoderate amount of A/B testing now going on with social media and digital campaigns.

It’s a good thing to take more risks, but not by going to the other extreme. The objective should be balancing errors against costs and opportunities. Not to blindly accept Type 1 conventions or to blindly ignore Type 2 extremes.

While we’re at it, let’s remind ourselves what statistical significance really tells us. A stat test provides a statistic that tells us something about our dataset, and only that. It has nothing to say about any hypothesis or theory we may have about the marketplace. Statistical significance (or the lack thereof) does not say that our hypothesis or theory is true or false. It is simply the odds of getting the particular set of data we have—e.g., a survey of 1,000 respondents—if the null hypothesis, or no differences between men and women, say, is true.

A statistically significant result means that our dataset, or sample, is highly unlikely to occur if the null hypothesis is true. Thus, with high confidence (typically, 95 percent), we can say that the null hypothesis of no differences is untrue or falsified. Stat testing just gives us the odds that we have a dataset so rare that it is highly unlikely for no differences to be true.

We may have a theory about why men and women are different, but a stat test that finds a statistically significant difference has nothing to say about that theory. It is only about the likelihood of drawing a sample like that if the null hypothesis of no differences is true.

This point is worth remembering because it is often the case that the differences observed have nothing to do with the groups being compared. It could be some other factor. The difference between men and women, for example, could be due to income, with men earning more on average, so any high-versus-low-income comparison, not just men versus women, would show this difference.

As a marketer, your job is to compete. Compete differently with The Blake Project.

In other words, stat testing often tells us nothing of interest. It flags a difference but tells us little more than that. We have to think and bring other knowledge to bear. We must interpret and apply our experience and expertise. We have to do more than rely solely on statistical significance.

The danger of over-reliance on stat testing is seen in the p-hacking and replication crises plaguing the social sciences. However, there is a danger in ignoring statistical significance as well. Our hypotheses and theories should be predictive. Our minds tend to misinterpret random fluctuations as real patterns. Stat testing keeps us honest. It’s a critical safeguard. But perilous, too. The job of marketing researchers is to strike a balance between these two for better marketing decision-making.

Contributed to Branding Strategy Insider By Walker Smith, Chief Knowledge Officer, Brand & Marketing at Kantar

The Blake Project Can Help Discover Your Competitive Advantage With Brand Equity Measurement

Branding Strategy Insider is a service of The Blake Project: A strategic brand consultancy specializing in Brand Research, Brand Strategy, Brand Growth and Brand Education

Post Views: 26