- RAG delivers real-time answers using external data—great for fast-changing content.

- Fine Tuning builds in expertise—ideal for regulated, high-accuracy tasks.

- RAG is quick to launch, Fine Tuning wins on long-term efficiency.

- Fine Tuning ensures control, RAG offers flexibility and reach.

- Hybrid models blend both—perfect for enterprise-grade AI tools.

- Choose based on use case—RAG for dynamic data, Fine Tuning for precision.

AI is no longer an experimental playground for research labs. It’s a competitive edge – one that startups and scaling businesses can’t afford to get wrong. But as entrepreneurs move from hype to implementation, a tough question surfaces: what’s better – RAG vs Fine Tuning?

This isn’t just a technical dilemma. It’s a strategic one. Choosing between AI retrieval augmented generation vs fine tuning can impact your speed to market, total cost of ownership, and how well your product adapts to new data or industries. It’s not simply about training a model. It’s about building long-term leverage.

And the options aren’t interchangeable. Retrieval-Augmented Generation enables models to access external knowledge in real time. AI model fine tuning, on the other hand, rewires the model itself to specialize in your domain. Both sound powerful. Both claim to “understand your business.” But only one might be the right fit for how you build and scale.

This article breaks down the debate of LLM Fine Tuning vs RAG in a way that prioritizes what matters to founders, operators, product leaders, and CXOs – not just ML engineers. Whether you’re working on a legal co-pilot, ecommerce support, or an AI-driven SaaS tool, we’ll explore which approach actually moves the business forward – with less friction, faster ROI, and more flexibility.

Why the Confusion Between Fine Tuning and RAG Exists

If you’re unsure whether to fine-tune a model or build with RAG architecture, you’re not alone. The confusion between Fine Tuning vs retrieval-based approach has become one of the most common decision points for businesses adopting large language models.

Much of the chaos stems from how both methods aim to solve the same business problem – helping AI better understand specific domains or datasets – but do so in completely different ways. With Fine-Tuned Models, you directly update the model weights to internalize knowledge. With RAG, the model remains general-purpose but pulls relevant information from external sources like databases, APIs, or internal documents at runtime.

The terminology doesn’t help either. One moment you’re reading about types of RAG and Fine Tuning models, the next you’re knee-deep in vector stores, adapter layers, or parameter-efficient tuning – and none of it explains which route is better for a lean, fast-moving startup.

Then there’s vendor bias. Infrastructure providers push RAG architecture for its modularity, while some AI consultancies still default to fine-tuned models because it’s what their workflows are built around.

The result? Founders and product leads are stuck comparing apples and oranges, with real implications on development timelines, infrastructure costs, team skill requirements, and user experience. And that’s why this decision deserves thoughtful analysis – not just intuition.

Still Confused Between Fine-Tuning and RAG?

You’re not alone. We’ll help you pick the right approach based on your data, product goals, and where you are in the journey.

What is Fine Tuning?

LLM Fine Tuning is the process of taking a large language model that’s already been trained on vast public datasets and refining it using your own, domain-specific data. You’re not starting from scratch – you’re reshaping a general-purpose model into a business specialist.

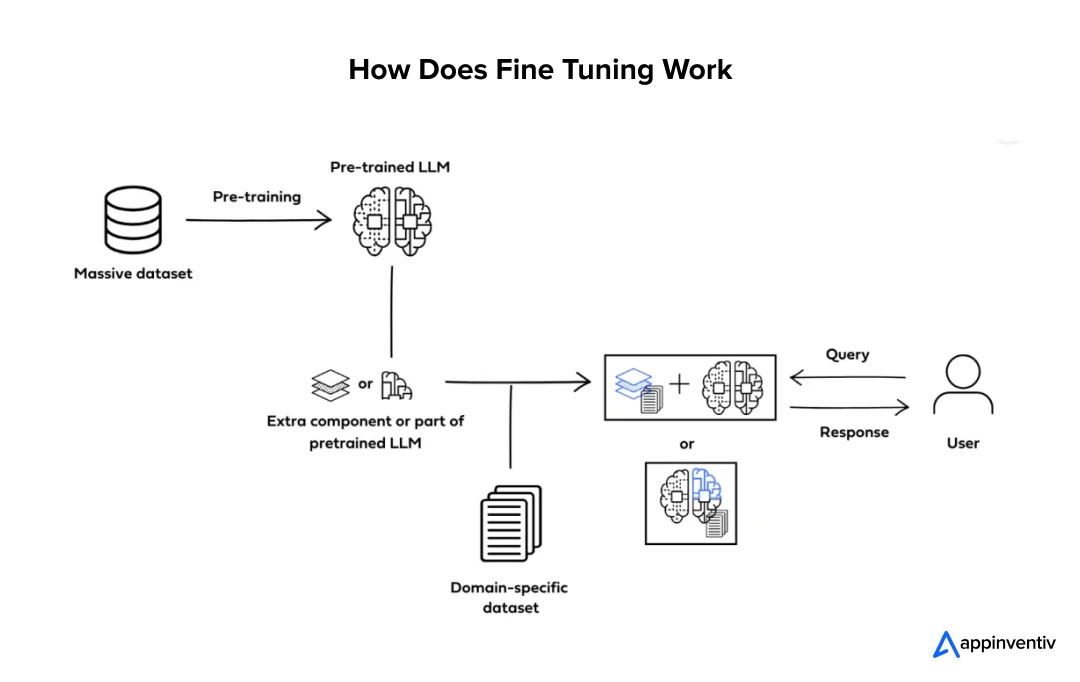

How Does Fine Tuning Work?

To fine-tune a model, you feed it examples from your business – emails, reports, knowledge base articles, chat logs, legal files, anything that reflects your specific tone, terminology, and intent. This data is used to retrain parts of the model, updating its internal weights so that it “remembers” and reasons based on that context.

Thanks to techniques like LoRA (Low-Rank Adaptation) and adapter layers, this process doesn’t always require full model retraining. You can fine-tune efficiently on just a few million parameters, cutting costs and time while still achieving strong performance.

Feature of Fine-Tuned Models

Fine-tuned models embed domain knowledge directly into their architecture. That means:

- They generate consistent and context-aware outputs without needing external lookups.

- The models are fast and self-contained – ideal for latency-sensitive environments.

- They maintain a defined voice, tone, or reasoning style aligned with your business.

You don’t need real-time access to a database or search index – the intelligence lives inside the model.

Application of Fine Tune

This approach works best when the scope is narrow but the depth of understanding must be high. Common examples include:

- A legal AI assistant trained on specific regional laws and firm-preferred language.

- A support chatbot built on years of internal FAQs, policy docs, and tone guides.

- A healthcare assistant trained to follow localized clinical guidelines.

In all these cases, off-the-shelf models won’t cut it – accuracy, tone, and alignment are non-negotiable.

Fine Tuning isn’t always the cheapest or fastest option, but when done right, it creates long-term defensibility. You end up with a model that doesn’t just answer – it answers like you.

What is Retrieval-Augmented Generation ?

Unlike Fine Tuning, where the model “learns” your business data, Retrieval-Augmented Generation takes a different path. It doesn’t store knowledge inside the model. Instead, it connects a pre-trained language model to an external knowledge source – like a vector database, document repository, or API – and retrieves relevant content in real-time to answer questions or generate outputs.

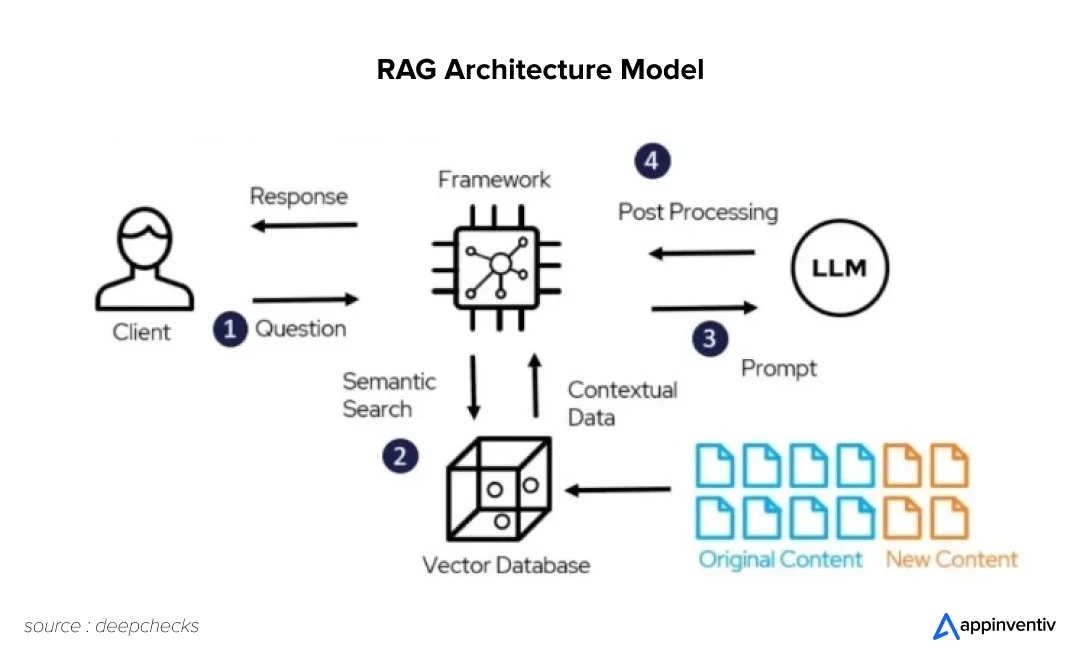

How Does RAG Work?

The RAG architecture typically involves three layers:

- A retriever that searches your external content (using embeddings and vector similarity).

- A reader – usually a general-purpose LLM – that uses the retrieved content as context.

- A generation engine that combines the query and retrieved data to produce an intelligent, grounded response.

This means the model doesn’t rely on memory – it refers to your documents, structured data, or APIs on demand. Updates to knowledge don’t require re-training. You simply change the underlying data source.

Features of RAG

What makes RAG powerful is its flexibility. It enables:

- Live access to large, evolving datasets

- Factual grounding based on trusted sources

- Reduced risk of hallucinations when retrieval is accurate

- Easier updates and scaling, since the base model remains untouched

You’re essentially creating a retrieval pipeline that feeds the model what it needs, when it needs it.

Applications of RAG

RAG is ideal for dynamic business environments where information changes frequently or is too large to embed directly into a model. Real-world applications of RAG include:

- AI customer service agents that reference up-to-date support articles

- Financial advisors pulling from live market data or client portfolios

- Internal tools that answer employee questions using HR docs or knowledge bases

- Compliance assistants that pull from evolving regulatory documents

It’s also the go-to architecture for products that require transparency – you can cite sources and show exactly where the answer came from.

LLM Fine Tuning vs RAG: A Comparison That Matters to Founders and CXOs

You’re not just choosing a technology – you’re choosing how your product learns, evolves, and scales. In the retrieval augmented generation vs fine tuning debate, the right choice depends on what your business values most today – and where it’s heading next.

Here’s a breakdown of use cases of RAG and fine tune across the dimensions that matter most to entrepreneurs:

| Decision Factor | Retrieval-Augmented Generation | Fine Tuning |

|---|---|---|

| Speed to Deployment | Fast to set up with minimal model changes | Slower due to data prep, training, and evaluation |

| Cost of Implementation | Lower upfront cost; higher retrieval/storage costs over time | Higher initial cost; cheaper inference at scale |

| Customization & Accuracy | Flexible but depends on quality of retrieval and content | Deep alignment with tone, logic, and business context |

| Maintenance & Updates | Easily updated by modifying knowledge base | Requires retraining for content updates |

| Scalability | Modular and flexible across domains | Scales well for narrow, high-precision use cases |

| Security & Data Sensitivity | Needs runtime access to external sources; higher exposure risk | Self-contained; safer for regulated or sensitive data environments |

| Best For | MVPs, dynamic content, support tools, broad use cases | Regulated apps, legal/health AI, internal assistants, high-accuracy tasks |

Speed to Deployment

If time-to-market is a priority, RAG architecture usually wins. Since you’re not retraining the model, you can plug in your knowledge base, set up retrieval, and launch within days or weeks. This makes RAG ideal for MVPs or teams iterating fast.

In contrast, fine-tuned models require more prep – dataset curation, training, evaluation, and infrastructure setup. But once deployed, they’re optimized for your use case from the inside out.

Winner for Speed: RAG

Tradeoff: RAG setups can be complex under the hood, especially when managing large or fragmented data sources.

Cost of Implementation

The cost comparison: RAG vs Fine Tuning depends on scale. RAG typically has lower upfront costs since you’re using an existing model. But as your database grows, you’ll incur ongoing retrieval and hosting costs – especially if latency is critical.

Fine Tuning, on the other hand, requires more compute upfront, but inference is cheaper over time since everything is internal to the model. If you’re serving millions of queries a month, this difference adds up fast.

Winner for Short-Term Cost: RAG

Winner for Long-Term Efficiency: Fine Tuning

Customization and Accuracy

If your product relies on precision, tone, or regulatory compliance, fine-tuned models often outperform. They internalize your data, align with your brand voice, and reduce reliance on retrieval quality.

RAG offers flexibility, but accuracy depends on how well your retriever finds the right information – and how clearly that information is written. Garbage in, garbage retrieved.

Winner for Deep Customization: Fine Tuning

RAG is better for breadth, not depth

Maintenance and Scalability

Here’s where RAG architecture vs fine-tuned models flip. Updating a RAG system is as simple as adding new documents to your knowledge base – no retraining needed. It’s modular and ideal for growing or evolving content.

Fine-tuned models are harder to update. Even minor knowledge changes might require partial retraining or multiple model versions.

Winner for Ongoing Maintenance: RAG

Winner for Stability at Scale: Fine Tuning (once mature)

Security and Data Sensitivity

With Fine Tuning, your data lives inside the model and never has to leave the system at inference time – a plus for highly sensitive environments like healthcare, legal, or defense.

RAG depends on external access during each interaction, which can create vulnerabilities if not managed carefully. It’s secure when properly architected, but the attack surface is bigger.

Winner for Data Privacy: Fine Tuning

RAG needs more attention to access control and logging

So, Which is Better?

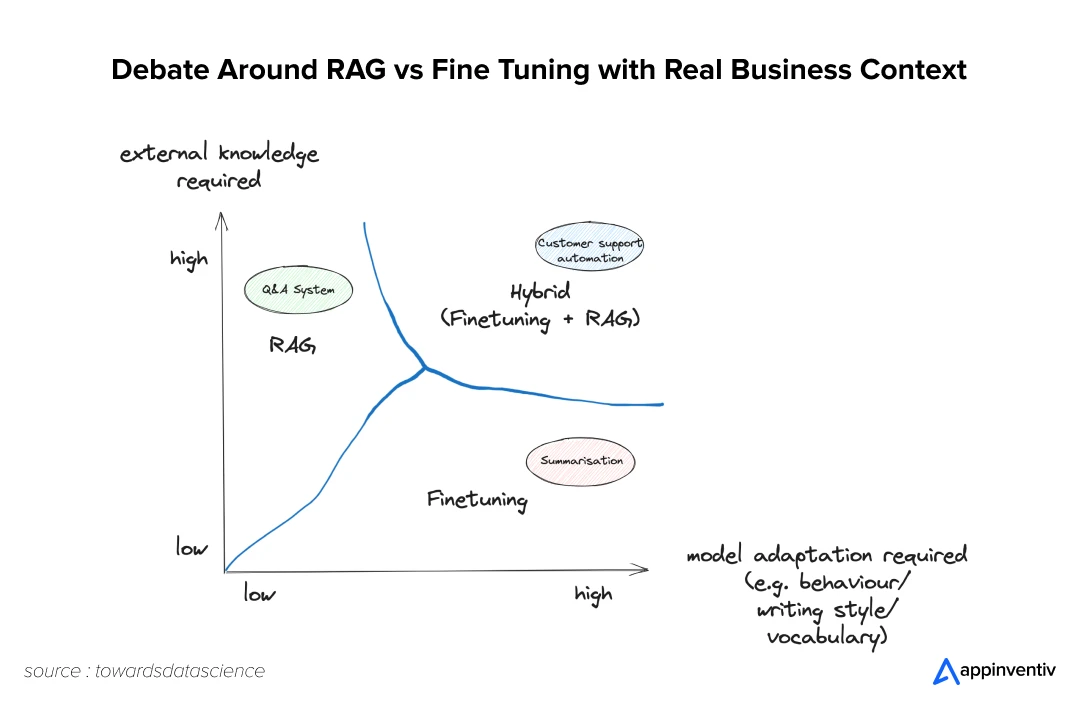

There is no absolute winner in the AI RAG vs fine tuning debate. Both offer powerful benefits – and real tradeoffs. Benefits of RAG and Fine-Tuning for businesses depend entirely on the use case, business model, and internal resources.

- RAG gets you to market fast, scales flexibly, and works great for broad or ever-changing content.

- Fine Tuning creates deeply customized, stable models that serve with confidence and brand alignment.

Your decision doesn’t just affect performance – it shapes your product’s architecture, user experience, and ability to evolve. Choose with intention.

This choice defines more than just your tech stack – it shapes how your product learns, adapts, and grows with your users.

Real-World Scenarios: Which Approach Wins Where?

The debate around RAG vs Fine Tuning makes the most sense when grounded in real business contexts. Below are real-world examples where founders and C-suite executives face different data realities, compliance risks, and time-to-market pressures – and why one approach edges out the other.

Scenario 1: A Legal Tech Startup Navigating Complex Regulations

You’re building an AI assistant for contract reviews across multiple jurisdictions. The language is dense, clients expect highly specific outputs, and even minor errors could create liability. The AI must sound like a legal associate, not a casual FAQ bot.

Here, Fine Tuning is the smarter bet. You need the model to “think like a lawyer,” internalize localized legal logic, and maintain a precise, formal tone. The knowledge is stable – you’re not rewriting law every week – so retraining is infrequent and worth the upfront effort.

Also Read: The Cost and Benefits of Building an AI-Powered Smart Personal Assistant App

Scenario 2: An Ecommerce Platform Supporting a Shifting Product Catalog

You’ve got thousands of SKUs, weekly promotions, and a support team fielding everything from return policy queries to payment issues. Information changes constantly – and your chatbot needs to reflect that in real time.

This is RAG territory. Instead of hardcoding knowledge into the model, you let the AI pull the latest policies or product details from your content store. No retraining needed when a new product line launches – just update the backend docs and you’re live. Flexibility > internalization here.

Also Read: AI in eCommerce – AI Trends and Use Cases Redefining Online Shopping Experiences

Scenario 3: A Healthcare SaaS Tool Guiding Clinicians in Real-Time

Your tool helps clinicians follow hospital-specific treatment protocols. Errors aren’t just bad UX – they can lead to real harm. You’re dealing with compliance, consistency, and heavy regulation.

Fine Tuning (or a fine-tuned hybrid) makes more sense. You don’t want retrieval failures mid-consultation. You want a self-contained model that’s been trained and validated on curated clinical procedures. RAG might be layered in for access to non-sensitive research, but your core logic needs to be locked in.

Scenario 4: An Internal Ops Assistant for Supply Chain Troubleshooting

You’re building a co-pilot for operations managers at a manufacturing firm. It needs to provide recommendations based on highly specific SOPs, escalation protocols, and machine behavior logs – none of which are public or easily structured.

This is a narrow, high-stakes, domain-specific workflow – the kind where Fine Tuning quietly outperforms. A fine-tuned model can internalize complex if-then logic and prioritize precision over flexibility. For tools meant to work with minimal oversight, aligned with years of internal data, baked-in intelligence pays off.

Also Read: The Role of Artificial in Supply Chain Management

A Quick Decision Guide

| If your situation involves… | Lean Toward |

|---|---|

| Fast-changing content or product catalog | RAG |

| Stable, regulated knowledge (e.g., legal, medical) | Fine Tuning |

| Need for live document retrieval (e.g., internal wikis) | RAG |

| Strong control over tone, brand, and accuracy | Fine Tuning |

| Limited time or budget to retrain models | RAG |

| Data sensitivity and offline inference requirements | Fine Tuning |

| Cross-domain flexibility (supporting many topics) | RAG |

| Narrow, high-stakes, domain-specific workflows | Fine Tuning |

Every domain brings its own tradeoffs. If you’re building something real, the decision between RAG and Fine-Tuning isn’t theoretical – it’s foundational.

Let’s map your product’s needs to the right AI stack.

How Appinventiv Uses a Hybrid Approach

In reality, most high-impact AI products don’t live at the extremes of RAG or Fine Tuning. They live in the middle – where precision and flexibility coexist, and where the real-world demands of scale, speed, and business logic don’t fit neatly into one architecture.

That’s where the hybrid approach of implementing RAG and fine tune comes in.

At Appinventiv, we rarely see one-size-fits-all AI tech stacks working well across the entire product lifecycle. When building AI copilots, industry-specific assistants, or decision-making tools, we often combine fine-tuned models with retrieval-augmented generation pipelines – not as a compromise, but as a strategic advantage.

How the Hybrid Model Works in Practice

Let’s say we’re building an AI assistant for a logistics company:

- We start by Fine Tuning a base model on the company’s internal SOPs, escalation protocols, and ticket patterns – giving it the “muscle memory” to understand operations without external help.

- Then we layer on RAG, connecting it to a real-time knowledge base of live inventory, transport statuses, and third-party API data. The assistant can now respond with up-to-date answers and deeply contextual reasoning.

This hybrid model allows for:

- Real-time flexibility (via RAG) for dynamic data

- Deep domain reasoning (via Fine Tuning) for structured workflows

- Fewer hallucinations, as grounded facts meet trained behavior

- Faster scaling, since not all knowledge needs to be retrained into the model

Why it Works for Our Clients

When building generative AI development services for industries like finance, logistics, healthcare, and ecommerce, we often have to find a balancing requirement: systems need to be stable and reliable, yet flexible enough to adapt as the business evolves and that is where hybrid architectures prove valuable.

Instead of boxing clients into one extreme – a highly specialized but rigid model, or a lightweight system that can’t go deep – we build solutions that stay solid where consistency matters, and adaptable where change is constant

So when the question is asked – AI RAG vs fine tuning: which one should I choose? – our real answer is often:

“Let’s not just pick one. Let’s build both – where each makes sense.”

For instance, according to a BCG study, a hybrid model combining RAG and Fine Tuning significantly enhanced the performance of their enterprise text-to-SQL solution. RAG was used to inject real-time domain context, while Fine Tuning helped the model internalize user-specific query logic. The result? Faster deployment with greater accuracy—proving that smartly combined architectures can outperform either method in isolation.

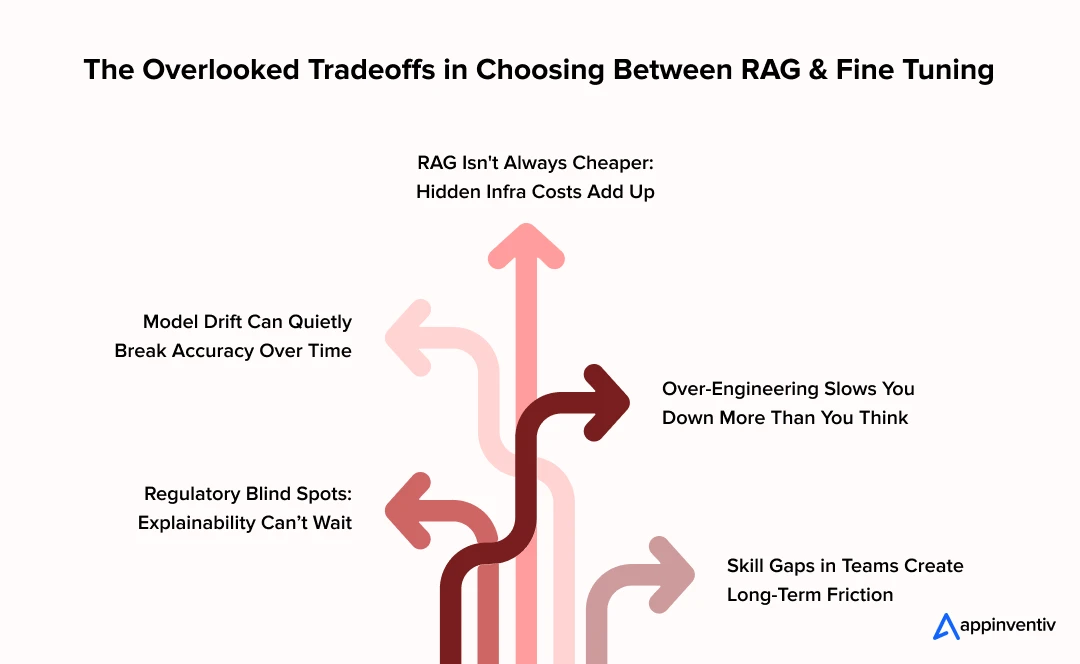

The Hidden Costs and Benefits You Shouldn’t Overlook

The AI RAG vs fine tuning decision often starts with excitement – launch speed, clever outputs, smooth demos. But what matters most doesn’t usually show up until later: when the user base scales, compliance questions hit, or the product starts behaving in ways you didn’t expect.

Founders and product leads tend to underestimate the long-term cost of maintenance, and overestimate how “set and forget” AI can be. Here’s what is often missed in early-stage planning, which brings a rise in unexpected challenges in adopting RAG or Fine-Tuning:

1. Infrastructure Demands Don’t Stay Flat

Fine Tuning might seem expensive upfront – and it can be – but RAG isn’t free either. Hosting a vector store, maintaining sync with evolving content, and optimizing retriever latency all require real-time infrastructure that scales with usage. In some cases, RAG systems quietly accumulate more ops overhead than a well-trained fine-tuned model.

Founder and CXO’s trap: Thinking RAG is always “cheaper” because you don’t retrain. It’s often not.

2. Model Drift Is Real – and Quiet

With fine-tuned models, model drift can creep in as business processes change. What worked great six months ago may no longer apply to how your company handles escalations or applies compliance logic. But you won’t always notice it right away – until errors pile up or a user flags an outdated response.

With RAG, the risk shifts: the model may not drift, but your knowledge base can – broken links, outdated pages, or new policies not yet uploaded become silent points of failure.

Founder and CXO’s trap: Assuming “once tuned” means always correct. Or assuming “live retrieval” means always current.

3. Over-Engineering for Rare Edge Cases

Some startups build massive RAG stacks when 90% of their content could’ve been baked into a fine-tuned model. Others invest weeks in Fine Tuning pipelines when a simple retrieval layer could’ve handled their MVP needs. Over-engineering isn’t just costly – it slows product delivery and clouds your roadmap.

Founder and CXO’s trap: Solving tomorrow’s scaling problems before validating today’s user needs.

4. Regulatory Risks and Explainability

In industries like healthcare, finance, or law, regulatory compliance isn’t optional – and explainability matters. Fine-tuned models are harder to audit. You can’t easily trace why they gave a particular answer unless you deeply version and log them.

With RAG, you can show sources. But that transparency is only as good as your retrieval accuracy and document hygiene.

Founder and CXO’s trap: Thinking explainability is a “later problem.” It’s not – especially if you’re planning to raise or sell.

Also Read: How Explainable AI Unlocks Accountable and Ethical Development of Artificial Intelligence

5. Team Skill Gaps Add Hidden Friction

RAG requires a blend of MLOps, search engineering, and API orchestration. Fine Tuning demands strong model training pipelines and evaluation protocols. Most product teams don’t have both. Trying to force one approach without the right skill set can lead to underperforming models and team burnout.

Founder and CXO’s trap: Thinking any AI/ML engineer can implement either architecture well.

It’s easy to fall for what works in a demo. It’s much harder to design for what works six months after launch – when your knowledge changes, your team grows, or your legal team asks for an audit trail.

That’s why we always advise founders and CXOs to weigh not just what works, but what stays manageable, compliant, and adaptable at scale.

Making the Decision – A Practical Guide for Entrepreneurs

Choosing between RAG vs Fine Tuning isn’t just a technical decision – it’s a business decision. One that affects your launch speed, infrastructure costs, user trust, and how fast you can evolve the product once it’s in the wild.

To keep things grounded, here’s a practical framework we use with clients at Appinventiv to help them commit with clarity – not guesswork.

Ask These Before You Build Anything

1. How stable is your knowledge base?

- If your content changes frequently, RAG gives you agility.

- If your knowledge is stable and high-risk, Fine Tuning is safer.

2. Do you need real-time accuracy or deep specialization?

- Real-time product/service info → RAG

- Narrow expertise or branded tone → Fine Tuning

3. How sensitive is your data?

- Does inference need to happen offline or in a secure environment? → Fine Tuning

- Can queries safely hit external systems? → RAG

4. How fast do you need to go live?

- Weeks or less → RAG

- Months for maturity and alignment → Fine Tuning

5. Does your team have MLOps + Retrieval + LLM training capabilities?

- Missing one of those? A hybrid model lets you phase complexity in gradually.

Don’t Forget the Hybrid Option

The smartest teams don’t treat RAG and Fine Tuning as opposing camps – they combine them strategically. For example:

- Light Fine Tuning + RAG: Train your model on brand tone or domain-specific phrasing, then let it pull fresh data from your backend or content store.

- Fine-tuned core + Retrieval layer for volatile data: Use a fine-tuned model for reasoning, decision trees, and tone – and augment it with retrieval for dynamic product specs, inventory, or regulations.

- RAG MVP + fine-tuned V2: Start fast with RAG to validate product–market fit. Then, fine-tune for scale, consistency, or reduced infra cost once patterns stabilize.

It’s Not About the Tech – It’s About Fit

It’s easy to get caught up in the architecture debates. LLM Fine Tuning vs RAG, adapters vs embeddings, latency vs cost curves – all of that matters. But not as much as this:

The right AI model is the one that fits your product, your team, and where you are right now.

There’s no universally better choice – just better-aligned decisions. A rapidly growing startup with shifting documentation needs a different setup than a healthcare SaaS with compliance obligations. A team with limited ML talent shouldn’t force Fine Tuning from day one, just as a company with sensitive internal protocols shouldn’t rely entirely on dynamic retrieval.

What matters is strategic fit – not technical perfection.

At Appinventiv, we’ve seen the best outcomes come from teams that don’t rush into implementation, but instead zoom out and ask:

- What’s changing fast, and what’s not?

- What needs to be accurate, and what just needs to be helpful?

- What’s good enough for now – and what has to scale later?

From RAG-first MVPs to fine-tuned enterprise assistants, we’ve helped businesses choose the right starting point – and build toward the architecture they’ll eventually need.

Build Smart. Scale Fast. Evolve Without Rebuilding.

Experimentation is critical – but so is intention. If you are serious about using AI to unlock new product value, we can help you architect not just for performance, but for adaptability, reliability, and long-term success.

So let’s build something that lasts. Get in touch with our AI team.

FAQs

Q. RAG vs Fine Tuning – what’s the real difference?

A. Think of it like this – RAG is like having a smart assistant that checks your company’s files every time someone asks a question. It fetches relevant info on the fly and uses that to respond.

Fine Tuning, on the other hand, is like training that assistant in advance. You feed it your company’s knowledge, teach it how you talk, how you think – and it learns to respond from memory. No fetching needed.

Both are powerful – the real difference is whether you want the model to know the answer or go find the answer.

Q. Which is better for enterprise use: Fine Tuning or retrieval-based models?

A. Honestly, it depends on what you’re building – and where you’re at as a company.

If your product deals with regulated, high-risk content – think legal, healthcare, finance – Fine Tuning gives you more control, consistency, and compliance.

But if you’re handling lots of content that changes often (like product info, policies, or user manuals), RAG is easier to maintain and much faster to launch.

And truth be told, a hybrid approach – mixing both – is what most growing enterprises end up using.

Q. Can I use a RAG model inside a mobile app?

A. Yes, but with a little nuance.

RAG needs to access external content – usually stored on a server. So while the app interface is mobile, the retrieval process typically runs on the backend. It fetches the content, feeds it to the model, and sends back the answer.

If you want everything to run on-device (like in offline mode), Fine Tuning tends to be the better route since the model carries the knowledge internally.

Q. How much does it cost to fine-tune a large language model for business use?

A. Costs can vary – a lot. It really comes down to:

- How much data you’re training on

- What size model you’re working with

- And whether you’re building in-house or with a partner

For lighter use cases, with good prep and a clear scope, you might get started for $10k–$30k. Larger enterprise Fine Tuning efforts – especially those involving multiple iterations, evaluations, and compliance – can climb into six figures.

At Appinventiv, we help founders and CXOs scope this early so you don’t burn the budget before proving ROI.

Q. Is RAG a good fit for niche industries like healthcare or finance?

A. It can be – but with guardrails.

If your app needs to surface fast-changing information (like medical guidelines or tax policy updates), RAG is incredibly useful. But in regulated industries, how you manage data retrieval matters. Access control, audit trails, and response explainability become critical.

In high-stakes domains, we often pair RAG with a fine-tuned core – giving you flexibility for updates and confidence where precision is non-negotiable.