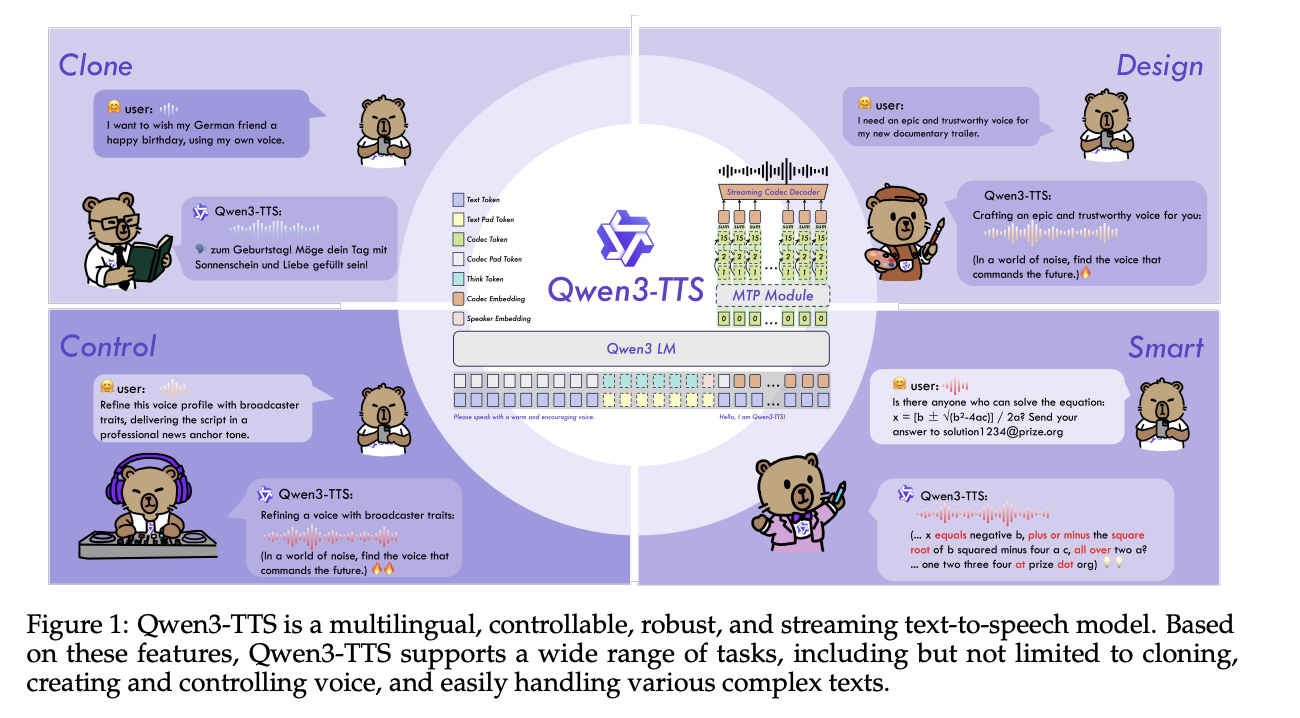

Alibaba Cloud’s Qwen team has open-sourced Qwen3-TTS, a family of multilingual text-to-speech models that target three core tasks in one stack, voice clone, voice design, and high quality speech generation.

Model family and capabilities

Qwen3-TTS uses a 12Hz speech tokenizer and 2 language model sizes, 0.6B and 1.7B, packaged into 3 main tasks. The open release exposes 5 models, Qwen3-TTS-12Hz-0.6B-Base and Qwen3-TTS-12Hz-1.7B-Base for voice cloning and generic TTS, Qwen3-TTS-12Hz-0.6B-CustomVoice and Qwen3-TTS-12Hz-1.7B-CustomVoice for promptable preset speakers, and Qwen3-TTS-12Hz-1.7B-VoiceDesign for free form voice creation from natural language descriptions, along with the Qwen3-TTS-Tokenizer-12Hz codec.

All models support 10 languages, Chinese, English, Japanese, Korean, German, French, Russian, Portuguese, Spanish, and Italian. CustomVoice variants ship with 9 curated timbres, such as Vivian, a bright young Chinese female voice, Ryan, a dynamic English male voice, and Ono_Anna, a playful Japanese female voice, each with a short description that encodes timbre and speaking style.

The VoiceDesign model maps text instructions directly to new voices, for example ‘speak in a nervous teenage male voice with rising intonation’ and can then be combined with the Base model by first generating a short reference clip and reusing it via create_voice_clone_prompt.

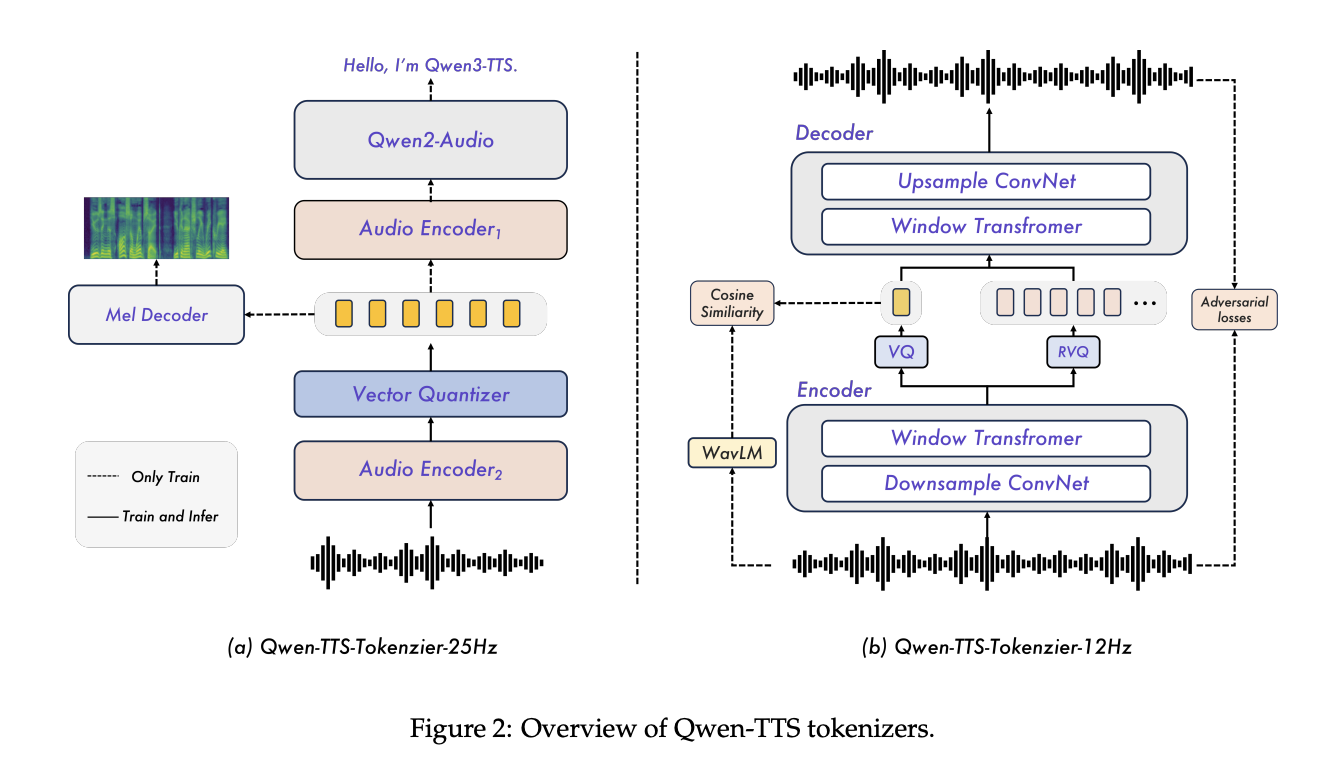

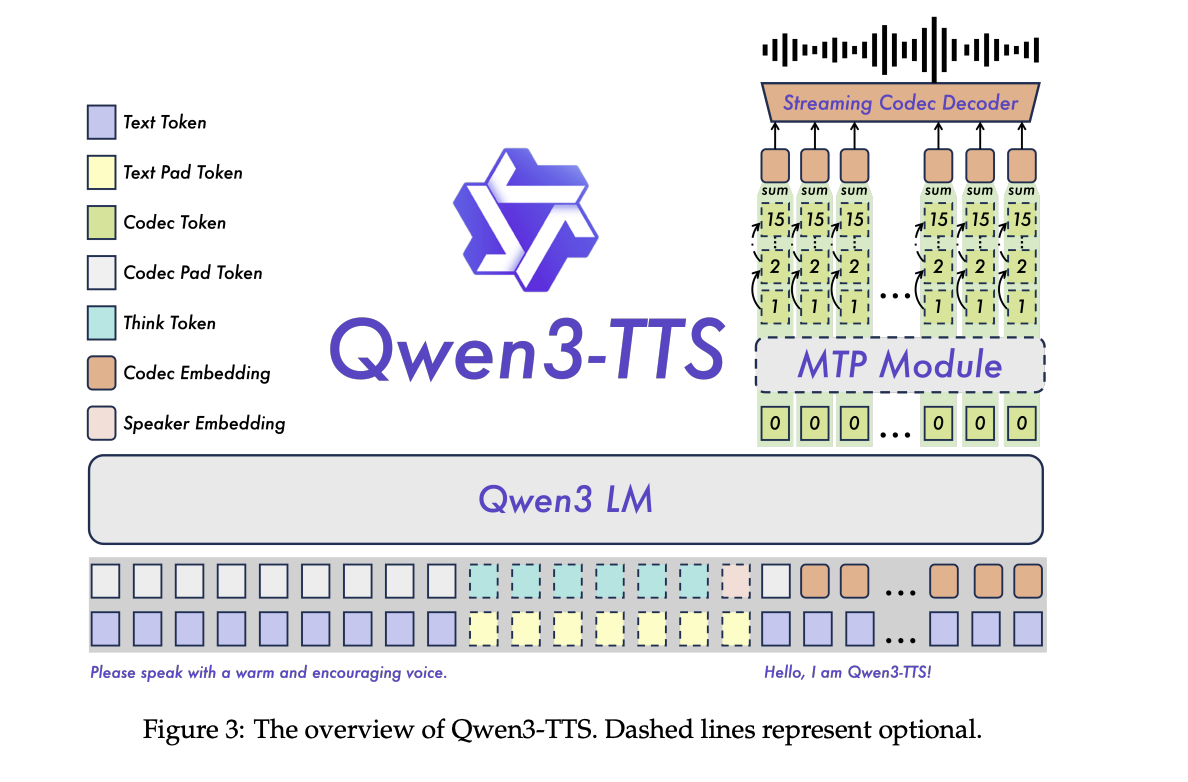

Architecture, tokenizer, and streaming path

Qwen3-TTS is a dual track language model, one track predicts discrete acoustic tokens from text, the other handles alignment and control signals. The system is trained on more than 5 million hours of multilingual speech in 3 pre training stages that move from general mapping, to high quality data, to long context support up to 32,768 tokens.

A key component is the Qwen3-TTS-Tokenizer-12Hz codec. It operates at 12.5 frames per second, about 80 ms per token, and uses 16 quantizers with a 2048 entry codebook. On LibriSpeech test clean it reaches PESQ wideband 3.21, STOI 0.96, and UTMOS 4.16, outperforming SpeechTokenizer, XCodec, Mimi, FireredTTS 2 and other recent semantic tokenizers, while using a similar or lower frame rate.

The tokenizer is implemented as a pure left context streaming decoder, so it can emit waveforms as soon as enough tokens are available. With 4 tokens per packet, each streaming packet carries 320 ms of audio. The non-DiT decoder and BigVGAN free design reduces decode cost and simplifies batching.

On the language model side, the research team reports end to end streaming measurements on a single vLLM backend with torch.compile and CUDA Graph optimizations. For Qwen3-TTS-12Hz-0.6B-Base and Qwen3-TTS-12Hz-1.7B-Base at concurrency 1, the first packet latency is around 97 ms and 101 ms, with real time factors of 0.288 and 0.313 respectively. Even at concurrency 6, first packet latency stays around 299 ms and 333 ms.

Alignment and control

Post training uses a staged alignment pipeline. First, Direct Preference Optimization aligns generated speech with human preferences on multilingual data. Then GSPO with rule based rewards improves stability and prosody. A final speaker fine tuning stage on the Base model yields target speaker variants while preserving the core capabilities of the general model.

Instruction following is implemented in a ChatML style format, where text instructions about style, emotion or tempo are prepended to the input. This same interface powers VoiceDesign, CustomVoice style prompts, and fine grained edits for cloned speakers.

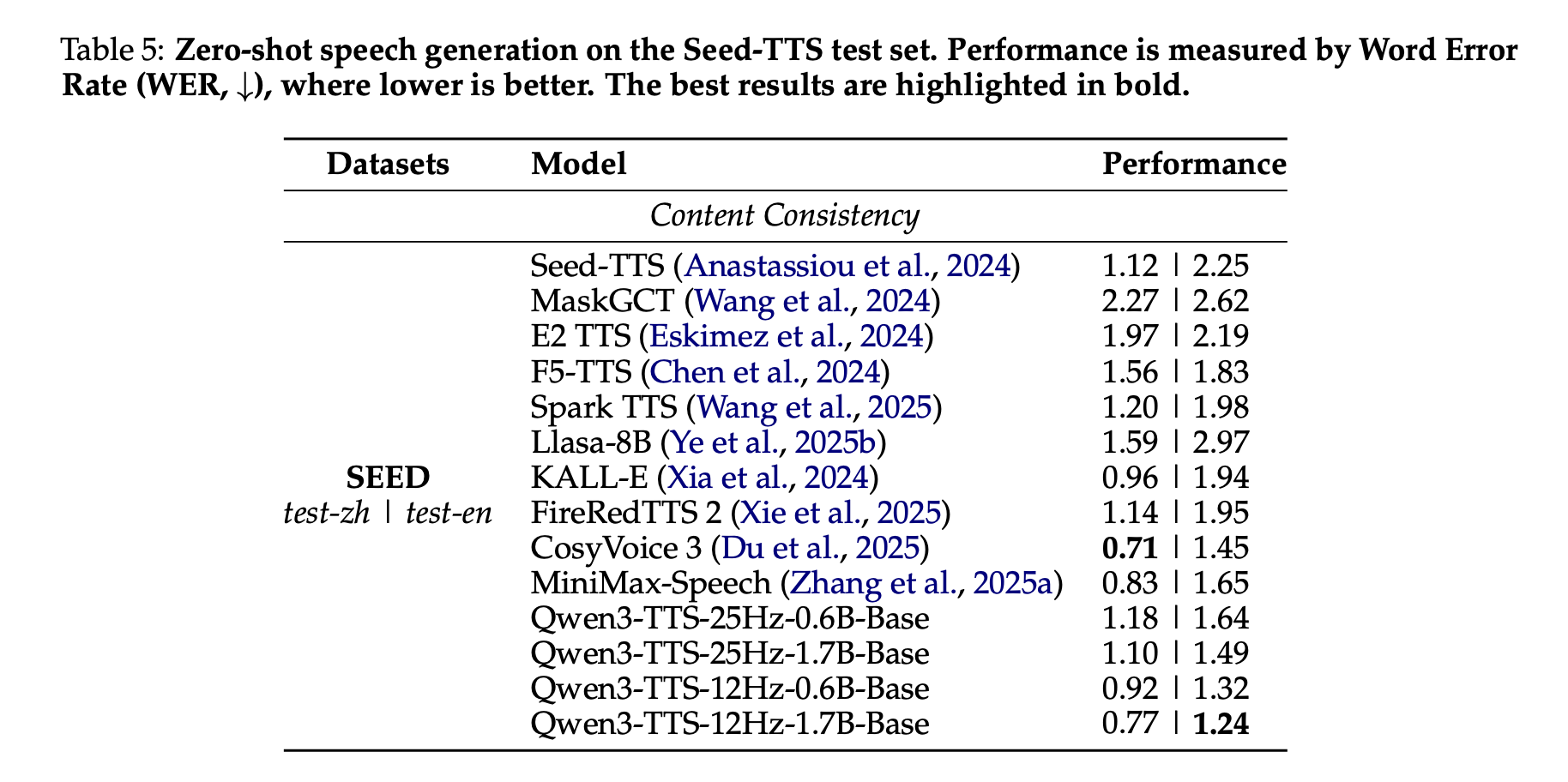

Benchmarks, zero shot cloning, and multilingual speech

On the Seed-TTS test set, Qwen3-TTS is evaluated as a zero-shot voice cloning system. The Qwen3-TTS-12Hz-1.7B-Base model reaches a Word Error Rate of 0.77 on test-zh and 1.24 on test-en. The research team highlights the 1.24 WER on test-en as state of the art among the compared systems, while the Chinese WER is close to, but not lower than, the best CosyVoice 3 score.

On a multilingual TTS test set covering 10 languages, Qwen3-TTS achieves the lowest WER in 6 languages, Chinese, English, Italian, French, Korean, and Russian, and competitive performance on the remaining 4 languages, while also obtaining the highest speaker similarity in all 10 languages compared to MiniMax-Speech and ElevenLabs Multilingual v2.

Cross-lingual evaluations show that Qwen3-TTS-12Hz-1.7B-Base reduces mixed error rate for several language pairs, such as zh-to-ko, where the error drops from 14.4 for CosyVoice3 to 4.82, about a 66 percent relative reduction.

On InstructTTSEval, the Qwen3TTS-12Hz-1.7B-VD VoiceDesign model sets new state of the art scores among open source models on Description-Speech Consistency and Response Precision in both Chinese and English, and is competitive with commercial systems like Hume and Gemini on several metrics.

Key Takeaways

- Full open source multilingual TTS stack: Qwen3-TTS is an Apache 2.0 licensed suite that covers 3 tasks in one stack, high quality TTS, 3 second voice cloning, and instruction based voice design across 10 languages using the 12Hz tokenizer family.

- Efficient discrete codec and real time streaming: The Qwen3-TTS-Tokenizer-12Hz uses 16 codebooks at 12.5 frames per second, reaches strong PESQ, STOI and UTMOS scores, and supports packetized streaming with about 320 ms of audio per packet and sub 120 ms first packet latency for the 0.6B and 1.7B models in the reported setup.

- Task specific model variants: The release offers Base models for cloning and generic TTS, CustomVoice models with 9 predefined speakers and style prompts, and a VoiceDesign model that generates new voices directly from natural language descriptions which can then be reused by the Base model.

- Strong alignment and multilingual quality: A multi stage alignment pipeline with DPO, GSPO and speaker fine tuning gives Qwen3-TTS low word error rates and high speaker similarity, with lowest WER in 6 of 10 languages and the best speaker similarity in all 10 languages among the evaluated systems, and state of the art zero shot English cloning on Seed TTS.

Check out the Model Weights, Repo and Playground. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.