Thinking Machines Lab has moved its Tinker training API into general availability and added 3 major capabilities, support for the Kimi K2 Thinking reasoning model, OpenAI compatible sampling, and image input through Qwen3-VL vision language models. For AI engineers, this turns Tinker into a practical way to fine tune frontier models without building distributed training infrastructure.

What Tinker Actually Does?

Tinker is a training API that focuses on large language model fine tuning and hides the heavy lifting of distributed training. You write a simple Python loop that runs on a CPU only machine. You define the data or RL environment, the loss, and the training logic. The Tinker service maps that loop onto a cluster of GPUs and executes the exact computation you specify.

The API exposes a small set of primitives, such as forward_backward to compute gradients, optim_step to update weights, sample to generate outputs, and functions for saving and loading state. This keeps the training logic explicit for people who want to implement supervised learning, reinforcement learning, or preference optimization, but do not want to manage GPU failures and scheduling.

Tinker uses low rank adaptation, LoRA, rather than full fine tuning for all supported models. LoRA trains small adapter matrices on top of frozen base weights, which reduces memory and makes it practical to run repeated experiments on large mixture of experts models in the same cluster.

General Availability and Kimi K2 Thinking

The flagship change in the December 2025 update is that Tinker no longer has a waitlist. Anyone can sign up, see the current model lineup and pricing, and run cookbook examples directly.

On the model side, users can now fine tune moonshotai/Kimi-K2-Thinking on Tinker. Kimi K2 Thinking is a reasoning model with about 1 trillion total parameters in a mixture of experts architecture. It is designed for long chains of thought and heavy tool use, and it is currently the largest model in the Tinker catalog.

In the Tinker model lineup, Kimi K2 Thinking appears as a Reasoning MoE model, alongside Qwen3 dense and mixture of experts variants, Llama-3 generation models, and DeepSeek-V3.1. Reasoning models always produce internal chains of thought before the visible answer, while instruction models focus on latency and direct responses.

OpenAI Compatible Sampling While Training

Tinker already had a native sampling interface through its SamplingClient. The typical inference pattern builds a ModelInput from token ids, passes SamplingParams, and calls sample to get a future that resolves to outputs

The new release adds a second path that mirrors the OpenAI completions interface. A model checkpoint on Tinker can be referenced through a URI like:

response = openai_client.completions.create(

model="tinker://0034d8c9-0a88-52a9-b2b7-bce7cb1e6fef:train:0/sampler_weights/000080",

prompt="The capital of France is",

max_tokens=20,

temperature=0.0,

stop=["\n"],

)Vision Input With Qwen3-VL On Tinker

The second major capability is image input. Tinker now exposes 2 Qwen3-VL vision language models, Qwen/Qwen3-VL-30B-A3B-Instruct and Qwen/Qwen3-VL-235B-A22B-Instruct. They are listed in the Tinker model lineup as Vision MoE models and are available for training and sampling through the same API surface.

To send an image into a model, you construct a ModelInput that interleaves an ImageChunk with text chunks. The research blog uses the following minimal example:

model_input = tinker.ModelInput(chunks=[

tinker.types.ImageChunk(data=image_data, format="png"),

tinker.types.EncodedTextChunk(tokens=tokenizer.encode("What is this?")),

])Here image_data is raw bytes and format identifies the encoding, for example png or jpeg. You can use the same representation for supervised learning and for RL fine tuning, which keeps multimodal pipelines consistent at the API level. Vision inputs are fully supported in Tinker’s LoRA training setup.

Qwen3-VL Versus DINOv2 On Image Classification

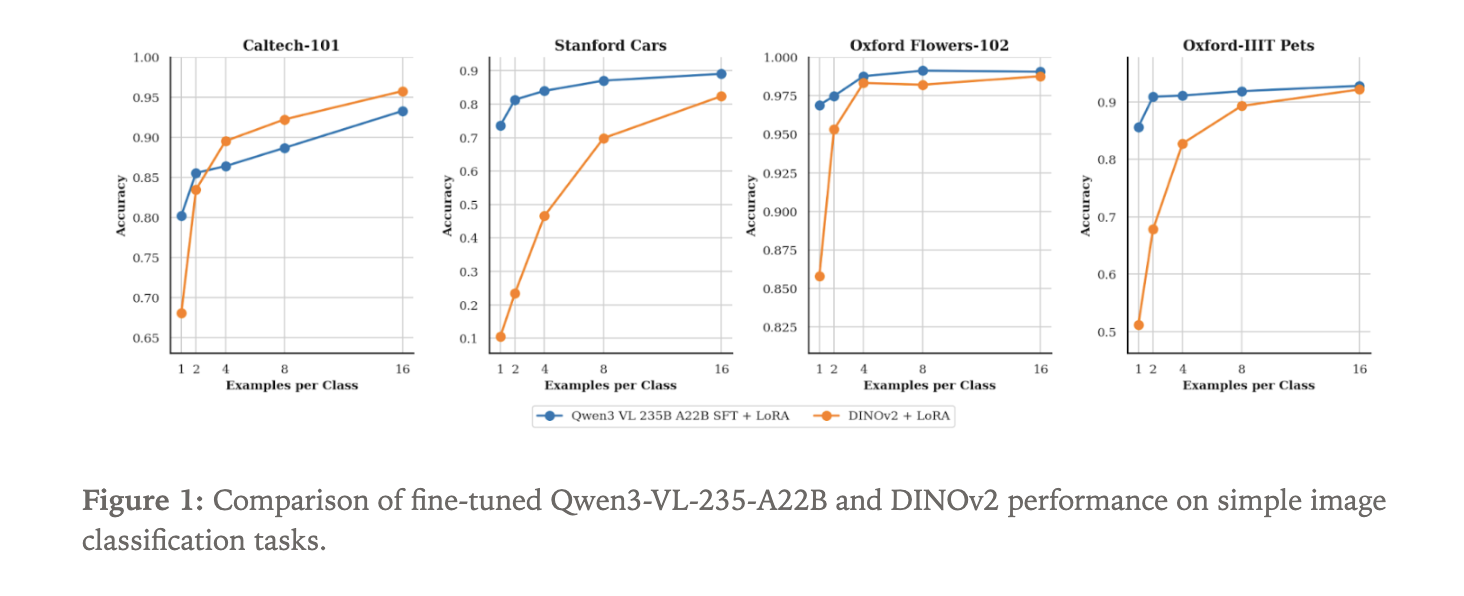

To show what the new vision path can do, the Tinker team fine tuned Qwen3-VL-235B-A22B-Instruct as an image classifier. They used 4 standard datasets:

- Caltech 101

- Stanford Cars

- Oxford Flowers

- Oxford Pets

Because Qwen3-VL is a language model with visual input, classification is framed as text generation. The model receives an image and generates the class name as a text sequence.

As a baseline, they fine tuned a DINOv2 base model. DINOv2 is a self supervised vision transformer that encodes images into embeddings and is often used as a backbone for vision tasks. For this experiment, a classification head is attached on top of DINOv2 to predict a distribution over the N labels in each dataset.

Both Qwen3-VL-235B-A22B-Instruct and DINOv2 base are trained using LoRA adapters within Tinker. The focus is data efficiency. The experiment sweeps the number of labeled examples per class, starting from only 1 sample per class and increasing. For each setting, the team measures classification accuracy.

Key Takeaways

- Tinker is now generally available, so anyone can sign up and fine tune open weight LLMs through a Python training loop while Tinker handles the distributed training backend.

- The platform supports Kimi K2 Thinking, a 1 trillion parameter mixture of experts reasoning model from Moonshot AI, and exposes it as a fine tunable reasoning model in the Tinker lineup.

- Tinker adds an OpenAI compatible inference interface, which lets you sample from in training checkpoints using a

tinker://…model URI through standard OpenAI style clients and tooling. - Vision input is enabled through Qwen3-VL models, Qwen3-VL 30B and Qwen3-VL 235B, so developers can build multimodal training pipelines that combine

ImageChunkinputs with text using the same LoRA based API. - Thinking Machines demonstrates that Qwen3-VL 235B, fine tuned on Tinker, achieves stronger few shot image classification performance than a DINOv2 base baseline on datasets such as Caltech 101, Stanford Cars, Oxford Flowers, and Oxford Pets, highlighting the data efficiency of large vision language models.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.